Affective haptics

Affective haptics is the emerging area of research which focuses on the study and design of devices and systems that can elicit, enhance, or influence the emotional state of a human by means of sense of touch. The research field is originated with the Dzmitry Tsetserukou and Alena Neviarouskaya papers[1][2] on affective haptics and real-time communication system with rich emotional and haptic channels. Driven by the motivation to enhance social interactivity and emotionally immersive experience of users of real-time messaging, virtual, augmented realities, the idea of reinforcing (intensifying) own feelings and reproducing (simulating) the emotions felt by the partner was proposed. Four basic haptic (tactile) channels governing our emotions can be distinguished: (1) physiological changes (e.g., heart beat rate, body temperature, etc.), (2) physical stimulation (e.g., tickling), (3) social touch (e.g., hug, handshake), (4) emotional haptic design (e.g., shape of device, material, texture).

Emotion theories

According to James-Lange theory,[3] the conscious experience of emotion occurs after the cortex receives signals about changes in physiological state. Researchers argued that feelings are preceded by certain physiological changes. Thus, when we see a venomous snake, we feel fear, because our cortex has received signals about our racing heart, knocking knees, etc. Damasio[4] distinguishes primary and secondary emotions. Both involve changes in bodily states, but the secondary emotions are evoked by thoughts. Recent empirical studies support non-cognitive theories of nature of emotions. It was proven that we can easily evoke our emotions by something as simple as changing facial expression (e.g., smile brings on a feeling of happiness).[5]

Sense of touch in affective haptics

Human emotions can be easily evoked by different cues, and the sense of touch is one of the most emotionally charged channels. Affective haptic devices produce different senses of touch including kinesthetic and coetaneous channels. Kinesthetic stimulations, which are produced by forces exerted on the body, are sensed by mechanoreceptors in the tendons and muscles. On the other hand, mechanoreceptors in the skin layers are responsible for the perception of cutaneous stimulation. Different types of tactile corpuscles allow us sensing thermal property of the object, pressure, vibration frequency, and stimuli location.[6]

Technologies of affective haptics

Social touch

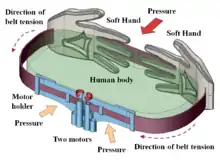

Online interactions rely heavily on vision and hearing, so a substantial need exists for mediated social touch.[7] Of the forms of physical contact, hugging is particularly emotionally charged; it conveys warmth, love, and affiliation. Recently, researchers have made several attempts to create a hugging device providing some sense of physical co-presence over a distance.[8][9] HaptiHug's[10] key feature is that it physically reproduces the human-hug pattern, generating pressure simultaneously on each user's chest and back. The idea to realistically reproduce hugging is in integration of active-haptic device HaptiHug and pseudo-haptic touch simulated by hugging animation. Thus, high immersion into the physical contact of partners while hugging is achieved.

Intimate touch

Affection goes further than hugging alone. People in long distance relationships are faced with a lack of physical intimacy on a day-to-day basis. Haptic technology allows for kinesthetic and tactile interface design. The field of digital co-presence envelops Teledildonics as well. The Kiiroo SVir is a good example of an Adult CyberToy that incorporates tactile input, by means of a surface that is touch capacitive, and an inside that is kinesthetic in nature. 12 rings contract, pulse and vibrate according to the movements one's partner makes in real time. The SVir mimics the actual motion of its OPue counterpart and is also compatible with other SVir models. The SVir enables women to have intercourse with their partner through use of the OPue interactive vibrator no matter how great the distance that separates the couple.

Implicit emotion elicitation

Different types of devices can be used to produce the physiological changes. Of the bodily organs, the heart plays a particularly important role in our emotional experience. The heart imitator HaptiHeart[2] produces special heartbeat patterns according to emotion to be conveyed or elicited (sadness is associated with slightly intense heartbeat, anger with quick and violent heartbeat, fear with intense heart rate). False heart beat feedback can be directly interpreted as a real heart beat, so it can change the emotional perception. HaptiButterfly[2] reproduces “butterflies in your stomach” (the fluttery or tickling feeling felt by people experiencing love) through arrays of vibration motors attached to the user's abdomen. HaptiShiver[2] sends “shivers up and down your spine” through a row of vibration motors. HaptiTemper[2] sends “chills up and down your spine” through both cold airflow from a fan and the cold side of a Peltier element. HaptiTemper is also intended for simulation of warmth on the human skin to evoke either pleasant feeling or aggression

Explicit emotion elicitation

HaptiTickler[2] directly evokes joy by tickling the user's ribs. It includes four vibration motors reproducing stimuli similar to human finger movements

Affective (emotional) haptic design.

Recent findings show that attractive things make people feel good, which in turn makes them think more creatively.[11] The concept of emotional haptic design was proposed.[2] The core idea is to make user to feel an affinity for the device through:

- appealing shapes evoking the desire to touch and haptically explore them,

- material pleasurable to touch, and

- the anticipation of the pleasure of wearing the device.

Affective computing

Affective computing can be used to measure and to recognize emotional information in systems and devises employing affective haptics. Emotional information is extracted by using such techniques as speech recognition, natural language processing, facial expression detection, and measurement of physiological data.

Potential applications

Possible applications are as follows:

- treating depression and anxiety (problematic emotional states),

- controlling and modulating moods on the basis of physiological signals,

- affective and collaborative games,

- psychological testing,

- assistive technology and augmentative communication systems for children with autism.

Affective Haptics is in the vanguard of emotional telepresence,[12] technology that lets users feel emotionally as if they were present and communicating at a remote physical location. The remote environment can be real, virtual, or augmented.

Application examples

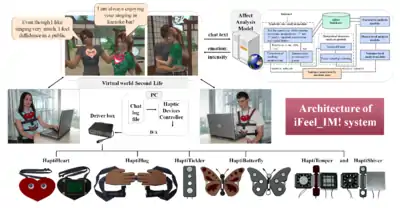

The philosophy behind the iFeel_IM! (intelligent system for Feeling enhancement powered by affect sensitive Instant Messenger) is “I feel [therefore] I am!”. In the iFeel_IM! system, great importance is placed on the automatic sensing of emotions conveyed through textual messages in 3D virtual world Second Life (artificial intelligence), the visualization of the detected emotions by avatars in virtual environment, enhancement of user's affective state, and reproduction of feeling of social touch (e.g., hug) by means of haptic stimulation in a real world. The control of the conversation is implemented through the Second Life object called EmoHeart[13] attached to the avatar's chest. In addition to communication with the system for textual affect sensing (Affect Analysis Model),[14] EmoHeart is responsible for sensing symbolic cues or keywords of ‘hug’ communicative function conveyed by text, and for visualization of ‘hugging’ in Second Life. The iFeel_IM! system considerably enhance emotionally immersive experience of real-time messaging.

In order to build a social interface, Réhman et al. [15] [16] developed two tactile systems (i.e. mobile phone based and chair based) which could render human facial expression for visually impaired persons. These systems conveyed human emotions to visually impaired persons using vibrotactile stimuli.

To produce movie-specific tactile stimuli influencing the viewer's emotions to the viewer's body, the wearable tactile jacket was developed by Philips researchers.[17] The motivation was to increase emotional immersion in a movie-viewing. The jacket contains 64 vibration motors that produce specially designed tactile patterns on the human torso.

See also

References

- Tsetserukou, Dzmitry; Alena Neviarouskaya; Helmut Prendinger; Naoki Kawakami; Mitsuru Ishizuka; Susumu Tachi (2009). "Enhancing Mediated Interpersonal Communication through Affective Haptics". Intelligent Technologies for Interactive Entertainment. INTETAIN: International Conference on Intelligent Technologies for Interactive Entertainment. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering. 9. Amsterdam: Springer. pp. 246–251. CiteSeerX 10.1.1.674.243. doi:10.1007/978-3-642-02315-6_27. ISBN 978-3-642-02314-9.

- Tsetserukou, Dzmitry; Alena Neviarouskaya; Helmut Prendinger; Naoki Kawakami; Susumu Tachi (2009). "Affective Haptics in Emotional Communication" (PDF). 2009 3rd International Conference on Affective Computing and Intelligent Interaction and Workshops. Amsterdam, the Netherlands: IEEE Press. pp. 181–186. doi:10.1109/ACII.2009.5349516. ISBN 978-1-4244-4800-5.

- James, William (1884). "What is an Emotion?" (PDF). Mind. 9 (34): 188–205. doi:10.1093/mind/os-IX.34.188.

- Antonio, Damasio (2000). The Feeling of What Happens: Body, Emotion and the Making of Consciousness. Vintage. ISBN 978-0-09-928876-3.

- Zajonc, Robert B.; Sheila T. Murphy; Marita Inglehart (1989). "Feeling and Facial Efference: Implication of the Vascular Theory of Emotion" (PDF). Psychological Review. 96 (3): 395–416. doi:10.1037/0033-295X.96.3.395. PMID 2756066.

- Kandel, Eric R.; James H. Schwartz; Thomas M. Jessell (2000). Principles of Neural Science. McGraw-Hill. ISBN 978-0-8385-7701-1.

- Haans, Antal; Wijnand I. Ijsselsteijn (2006). "Mediated Social Touch: a Review of Current Research and Future Directions". Virtual Reality. 9 (2–3): 149–159. doi:10.1007/s10055-005-0014-2. PMID 24807416.

- DiSalvo, Carl; F. Gemperle; J. Forlizzi; E. Montgomery (2003). The Hug: an Exploration of Robotic Form for Intimate Communication. Millbrae: IEEE Press. pp. 403–408. doi:10.1109/ROMAN.2003.1251879. ISBN 0-7803-8136-X.

- Mueller, Florian; F. Vetere; M.R. Gibbs; J. Kjeldskov; S. Pedell; S. Howard (2005). "Affective haptics in emotional communication" (PDF). Hug over a Distance. in Proc. of the ACM Conf. on Human Factors in Computing Systems (CHI 05), Portland, USA, ACM Press. pp. 1673–1676. doi:10.1109/ACII.2009.5349516. ISBN 978-1-4244-4800-5. Archived from the original (PDF) on 2010-09-23.

- Tsetserukou, Dzmitry (2009). "HaptiHug: a Novel Haptic Display for Communication of Hug over a Distance". Haptics: Generating and Perceiving Tangible Sensations. EuroHaptics: International Conference on Human Haptic Sensing and Touch Enabled Computer Applications. Lecture Notes in Computer Science. 6191. Amsterdam: Springer. pp. 340–347. doi:10.1007/978-3-642-14064-8_49. ISBN 978-3-642-14063-1.

- Norman, Donald A. (2004). Emotional Design: Why We Love (or Hate) Everyday Things. Basic Books. ISBN 978-0-465-05135-9.

- Tsetserukou, Dzmitry; Alena Neviarouskaya (September–October 2010). "iFeel_IM!: augmenting emotions during online communication". IEEE Computer Graphics and Applications (PDF). 30 (5): 72–80. doi:10.1007/s10055-005-0014-2. PMID 24807416.

- Neviarouskaya, Alena; Helmut Prendinger; Mitsuru Ishizuka (2010). "EmoHeart: Conveying Emotions in Second Life Based on Affect Sensing from Text". Advances in Human-Computer Interaction. 2010: 1–13. doi:10.1155/2010/209801.

- Neviarouskaya, Alena; Helmut Prendinger; Mitsuru Ishizuka (2010). "Recognition of Fine-Grained Emotions from Text: an Approach Based on the Compositionality Principle". Modeling Machine Emotions for Realizing Intelligence. Smart Innovation, Systems and Technologies. 1. In Modelling Machine Emotions for Realizing Intelligence: Foundations and Applications, Nishida, T., Jain, L., and Faucher, C. (eds.), Springer, (Smart Innovation, Systems and Technologies (SIST) series, Vol. 1). pp. 179–207. doi:10.1007/978-3-642-12604-8_9. ISBN 978-3-642-12603-1.

- Réhman, Shafiq; Li Liu; Haibo Li (October 2007). "Manifold of Facial Expressions for Tactile Perception". 2007 IEEE 9th Workshop on Multimedia Signal Processing (PDF). IEEE 9th Workshop on Multimedia Signal Processing, 2007. (MMSP 2007). pp. 239–242. doi:10.1109/MMSP.2007.4412862. ISBN 978-1-4244-1273-0.

- Réhman, Shafiq; Li Liu (July 2008). "Vibrotactile Rendering of Human Emotions on the Manifold of Facial Expressions". Journal of Multimedia (PDF). 3 (3): 18–25. CiteSeerX 10.1.1.408.3683. doi:10.4304/jmm.3.3.18-25.

- [15]Lemmens, P, Crompvoets, F., Brokken, D., Eerenbeemd, J.V.D ., Vries, G-J.D. “A Body-Conforming Tactile Jacket to Enrich Movie Viewing,” in Proc. the Third Joint EuroHaptics Conf. and Symp. on Haptic Interfaces for Virtual Environment and Teleoperator Systems (WorldHaptics 2009), Salt Lake City, US, March 18–20, 2009, pp. 7–12. Lemmens, Paul; F. Crompvoets; D. Brokken; J.V.D. Eerenbeemd; G.-J.D. Vries (2009). "A Body-Conforming Tactile Jacket to Enrich Movie Viewing". World Haptics 2009 - Third Joint Euro Haptics conference and Symposium on Haptic Interfaces for Virtual Environment and Teleoperator Systems. in Proc. the Third Joint EuroHaptics Conf. and Symp. on Haptic Interfaces for Virtual Environment and Teleoperator Systems, Salt Lake City, USA. pp. 7–12. doi:10.1109/WHC.2009.4810832. ISBN 978-1-4244-3858-7.

External links

| Wikimedia Commons has media related to Affective haptics. |

- IEEE Transactions on Haptics (ToH)

- Affective Haptics research group