Device fingerprint

A device fingerprint or machine fingerprint is information collected about the software and hardware of a remote computing device for the purpose of identification. The information is usually assimilated into a brief identifier using a fingerprinting algorithm. A browser fingerprint is information collected specifically by interaction with the web browser of the device.[1]:878[2]:1

Device fingerprints can be used to fully or partially identify individual devices even when persistent cookies (and zombie cookies) cannot be read or stored in the browser, the client IP address is hidden, or one switches to another browser on the same device.[3] This may allow a service provider to detect and prevent identity theft and credit card fraud,[4]:299[5][6][7] but also to compile long-term records of individuals' browsing histories (and deliver targeted advertising[8]:821[9]:9 or targeted exploits[10]:8[11]:547) even when they are attempting to avoid tracking – raising a major concern for internet privacy advocates.[12]

History

Basic web browser configuration information has long been collected by web analytics services in an effort to measure real human web traffic and discount various forms of click fraud. Since its introduction in the late 1990s, client-side scripting has gradually enabled the collection of an increasing amount of diverse information, with some computer security experts starting to complain about the ease of bulk parameter extraction offered by web browsers as early as 2003.[13]

In 2005, researchers at University of California, San Diego showed how TCP timestamps could be used to estimate the clock skew of a device, and consequently to remotely obtain a hardware fingerprint of the device.[14]

In 2010, Electronic Frontier Foundation launched a website where visitors can test their browser fingerprint.[15] After collecting a sample of 470161 fingerprints, they measured at least 18.1 bits of entropy possible from browser fingerprinting,[16] but that was before the advancements of canvas fingerprinting, which claims to add another 5.7 bits.

In 2012, Keaton Mowery and Hovav Shacham, researchers at University of California, San Diego, showed how the HTML5 canvas element could be used to create digital fingerprints of web browsers.[17][18]

In 2013, at least 0.4% of Alexa top 10,000 sites were found to use fingerprinting scripts provided by a few known third parties.[11]:546

In 2014, 5.5% of Alexa top 10,000 sites were found to use canvas fingerprinting scripts served by a total of 20 domains. The overwhelming majority (95%) of the scripts were served by AddThis, which started using canvas fingerprinting in January that year, without the knowledge of some of its clients.[19]:678[20][17][21][5]

In 2015, a feature to protect against browser fingerprinting was introduced in Firefox version 41,[22] but it has been since left in an experimental stage, not initiated by default.[23]

The same year a feature named Enhanced Tracking Protection was introduced in Firefox version 42 to protect against tracking during private browsing[24] by blocking scripts from third party domains found in the lists published by the company Disconnect.

At WWDC 2018 Apple announced that Safari on macOS Mojave "presents simplified system information when users browse the web, preventing them from being tracked based on their system configuration."[25]

A 2018 study revealed that only one-third of browser fingerprints in a French database were unique, indicating that browser fingerprinting may become less effective as the number of users increases and web technologies convergently evolve to implement fewer distinguishing features.[26]

In 2019, starting from Firefox version 69, Enhanced Tracking Protection has been turned on by default for all users also during non-private browsing.[27] The feature was first introduced to protect private browsing in 2015 and was then extended to standard browsing as an opt-in feature in 2018.

Diversity and stability

Motivation for the device fingerprint concept stems from the forensic value of human fingerprints.

In order to uniquely distinguish over time some devices through their fingerprints, the fingerprints must be both sufficiently diverse and sufficiently stable. In practice neither diversity nor stability is fully attainable, and improving one has a tendency to adversely impact the other. For example, the assimilation of an additional browser setting into the browser fingerprint would usually increase diversity, but it would also reduce stability, because if a user changes that setting, then the browser fingerprint would change as well.[2]:11

Entropy is one of several ways to measure diversity.

Sources of identifying information

Applications that are locally installed on a device are allowed to gather a great amount of information about the software and the hardware of the device, often including unique identifiers such as the MAC address and serial numbers assigned to the machine hardware. Indeed, programs that employ digital rights management use this information for the very purpose of uniquely identifying the device.

Even if they aren’t designed to gather and share identifying information, local applications might unwillingly expose identifying information to the remote parties with which they interact. The most prominent example is that of web browsers, which have been proved to expose diverse and stable information in such an amount to allow remote identification, see § Browser fingerprint.

Diverse and stable information can also be gathered below the application layer, by leveraging the protocols that are used to transmit data. Sorted by OSI model layer, some examples of such protocols are:

- OSI Layer 7: SMB, FTP, HTTP, Telnet, TLS/SSL, DHCP[28]

- OSI Layer 5: SNMP, NetBIOS

- OSI Layer 4: TCP (see TCP/IP stack fingerprinting)

- OSI Layer 3: IPv4, IPv6, ICMP, IEEE 802.11[29]

- OSI Layer 2: CDP[30]

Passive fingerprinting techniques merely require the fingerprinter to observe traffic originated from the target device, while active fingerprinting techniques require the fingerprinter to initiate connections to the target device. Techniques that require to interact with the target device over a connection initiated by the latter are sometimes addressed as semi-passive.[14]

Browser fingerprint

The collection of large amount of diverse and stable information from web browsers is possible thanks for most part to client-side scripting languages, which have been introduced in the late '90s.

Browser version

Browsers provide their name and version, together with some compatibility information, in the User-Agent request header.[31][32] Being a statement freely given by the client, it shouldn't be trusted when assessing its identity. Instead, the type and version of the browser can be inferred from the observation of quirks in its behavior: for example, the order and number of HTTP header fields is unique to each browser family[33]:257[34]:357 and, most importantly, each browser family and version differs in its implementation of HTML5,[10]:1[33]:257 CSS[35]:58[33]:256 and JavaScript.[11]:547,549-50[36]:2[37][38] Such differences can be remotely tested by using JavaScript. A Hamming distance comparison of parser behaviors has been shown to effectively fingerprint and differentiate a majority of browser versions.[10]:6

| Browser family | Property deletion (of navigator object) | Reassignment (of navigator/screen object) |

|---|---|---|

| Google Chrome | allowed | allowed |

| Mozilla Firefox | ignored | ignored |

| Opera | allowed | allowed |

| Internet Explorer | ignored | ignored |

Browser extensions

A browser unique combination of extensions or plugins can be added to a fingerprint directly.[11]:545 Extensions may also modify how any other browser attributes behave, adding additional complexity to the user's fingerprint.[39]:954[40]:688[9]:1131[41]:108 Adobe Flash and Java plugins were widely used to access user information before their deprecation.[34]:3[11]:553[38]

Hardware properties

User agents may provide system hardware information, such as phone model, in the HTTP header.[41]:107[42]:111 Properties about the user's operating system, screen size, screen orientation, and display aspect ratio can be also retrieved by observing with JavaScript the result of CSS media queries.[35]:59-60

Browsing history

The fingerprinter can determine which sites the browser has previously visited within a list it provides, by querying the list using JavaScript with the CSS selector :visited.[43]:5 Typically, a list of 50 popular websites is sufficient to generate a unique user history profile, as well as provide information about the user's interests.[43]:7,14 However, browsers have since then mitigated this risk.[44]

Font metrics

The letter bounding boxes differ between browsers based on anti-aliasing and font hinting configuration and can be measured by JavaScript.[45]:108

Canvas and WebGL

Canvas fingerprinting uses the HTML5 canvas element, which is used by WebGL to render 2D and 3D graphics in a browser, to gain identifying information about the installed graphics driver, graphics card, or graphics processing unit (GPU). Canvas-based techniques may also be used to identify installed fonts.[42]:110 Furthermore, if the user does not have a GPU, CPU information can be provided to the fingerprinter instead.

A canvas fingerprinting script first draws text of specified font, size, and background color. The image of the text as rendered by the user's browser is then recovered by the ToDataURL Canvas API method. The hashed text-encoded data becomes the user's fingerprint.[19][18]:2-3,6 Canvas fingerprinting methods have been shown to produce 5.7 bits of entropy. Because the technique obtains information about the user's GPU, the information entropy gained is "orthogonal" to the entropy of previous browser fingerprint techniques such as screen resolution and JavaScript capabilities.[18]

Hardware benchmarking

Benchmark tests can be used to determine whether a user's CPU utilizes AES-NI or Intel Turbo Boost by comparing the CPU time used to execute various simple or cryptographic algorithms.[46]:588

Specialized APIs can also be used, such as the Battery API, which constructs a short-term fingerprint based on the actual battery state of the device,[47]:256 or OscillatorNode, which can be invoked to produce a waveform based on user entropy.[48]:1399

A device's hardware ID, which is a cryptographic hash function specified by the device's vendor, can also be queried to construct a fingerprint.[42]:109,114

Mitigation methods for browser fingerprinting

Offering a simplified fingerprint

Users may attempt to reduce their fingerprintability by selecting a web browser which minimizes availability of identifying information such as browser fonts, device ID, canvas element rendering, WebGL information, and local IP address.[42]:117

As of 2017 Microsoft Edge is considered to be the most fingerprintable browser, followed by Firefox and Google Chrome, Internet Explorer, and Safari.[42]:114 Among mobile browsers, Google Chrome and Opera Mini are most fingerprintable, followed by mobile Firefox, mobile Edge, and mobile Safari.[42]:115

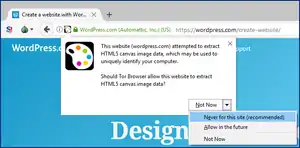

Tor Browser disables fingerprintable features such as the canvas and WebGL API and notify users of fingerprint attempts.[19]

Offering a spoofed fingerprint

Spoofing some of the information exposed to the fingerprinter (e.g. the user agent) may allow to reduce diversity.[49]:13 The contrary could be achieved if the mismatch between the spoofed information and the real browser information differentiates the user from all the others who do not use such strategy.[11]:552

Spoofing the information differently at each site visit allow to reduce stability.[8]:820,823

Different browsers on the same machine would usually have different fingerprints, but if both browsers aren't protected against fingerprinting, then the two fingerprints could be identified as originating from the same machine.[3][50]

Blocking scripts

Blindly blocking client-side scripts served from third-party domains, and possibly also first-party domains (e.g. by disabling JavaScript or using NoScript) can sometimes render websites unusable. The preferred approach is to block only third-party domains that seem to track people, either because they're found on a blacklist of tracking domains (the approach followed by most ad blockers) or because the intention of tracking is inferred by past observations (the approach followed by Privacy Badger).[51][20][52][53]

Randomizing a fingerprint

The value of certain web browser attributes can be randomized with no visible effect for the browser user. These attributes include sound or canvas rendering, which can be slightly perturbed with small amount of random noise. This disturbs a bot that looks for a fingerprint that is exactly equal to a fingerprint that it encountered in the past. Meanwhile, the user does not notice those micro random changes. This technique has been proposed and evaluated by Nikiforakis[54] in 2015 and by Laperdrix[55] in 2017. These two works were introduced in the Brave browser in 2020.[56]

See also

References

- Laperdrix P, Rudametkin W, Baudry B (May 2016). Beauty and the Beast: Diverting Modern Web Browsers to Build Unique Browser Fingerprints. 2016 IEEE Symposium on Security and Privacy. San Jose CA USA: IEEE. pp. 878–894. doi:10.1109/SP.2016.57. ISBN 978-1-5090-0824-7.

- Eckersley P (2017). "How Unique Is Your Web Browser?". In Atallah MJ, Hopper NJ (eds.). Privacy Enhancing Technologies. Lecture Notes in Computer Science. Springer Berlin Heidelberg. pp. 1–18. ISBN 978-3-642-14527-8.

- Cao, Yinzhi (2017-02-26). "(Cross-)Browser Fingerprinting via OS and Hardware Level Features" (PDF). Archived (PDF) from the original on 2017-03-07. Retrieved 2017-02-28.

- Alaca F, van Oorschot PC (December 2016). Device Fingerprinting for Augmenting Web Authentication: Classification and Analysis of Methods. 32nd Annual Conference on Computer Security. Los Angeles CA USA: Association for Computing Machinery. pp. 289–301. doi:10.1145/2991079.2991091. ISBN 978-1-4503-4771-6.

- Steinberg J (23 July 2014). "You Are Being Tracked Online By A Sneaky New Technology -- Here's What You Need To Know". Forbes. Retrieved 2020-01-30.

- "User confidence takes a Net loss". Infoworld.com. 2005-07-01. Archived from the original on 2015-10-04. Retrieved 2015-10-03.

- "7 Leading Fraud Indicators: Cookies to Null Values". 2016-03-10. Archived from the original on 2016-10-03. Retrieved 2016-07-05.

- Nikiforakis N, Joosen W, Livshits B (May 2015). PriVaricator: Deceiving Fingerprinters with Little White Lies. WWW '15: The 24th International Conference on World Wide Web. Florence Italy: International World Wide Web Conferences Steering Committee. pp. 820–830. doi:10.1145/2736277.2741090. hdl:10044/1/74945. ISBN 978-1-4503-3469-3.

- Acar G, Juarez M, Nikiforakis N, Diaz C, Gürses S, Piessens F, Preneel B (November 2013). FPDetective: Dusting the Web for Fingerprinters. 2013 ACM SIGSAC Conference on Computer & Communications Security. Berlin Germany: Association for Computing Machinery. pp. 1129–1140. doi:10.1145/2508859.2516674. ISBN 978-1-4503-2477-9.

- Abgrall E, Le Traon Y, Monperrus M, Gombault S, Heiderich M, Ribault A (2012-11-20). "XSS-FP: Browser Fingerprinting using HTML Parser Quirks". arXiv:1211.4812 [cs.CR].

- Nikiforakis N, Kapravelos A, Wouter J, Kruegel C, Piessens F, Vigna G (May 2013). Cookieless Monster: Exploring the Ecosystem of Web-Based Device Fingerprinting. 2013 IEEE Symposium on Security and Privacy. Berkeley CA USA: IEEE. doi:10.1109/SP.2013.43. ISBN 978-0-7695-4977-4.

- "EFF's Top 12 Ways to Protect Your Online Privacy | Electronic Frontier Foundation". Eff.org. 2002-04-10. Archived from the original on 2010-02-04. Retrieved 2010-01-28.

- "MSIE clientCaps "isComponentInstalled" and "getComponentVersion" registry information leakage". Archive.cert.uni-stuttgart.de. Archived from the original on 2011-06-12. Retrieved 2010-01-28.

- Kohno; Broido; Claffy. "Remote Physical Device Detection". Cs.washington.edu. Archived from the original on 2010-01-10. Retrieved 2010-01-28.

- "About Panopticlick". eff.org. Retrieved 2018-07-07.

- Eckersley, Peter (17 May 2010). "How Unique Is Your Web Browser?" (PDF). eff.org. Electronic Frontier Foundation. Archived (PDF) from the original on 9 March 2016. Retrieved 13 Apr 2016.

- Angwin J (July 21, 2014). "Meet the Online Tracking Device That is Virtually Impossible to Block". ProPublica. Retrieved 2020-01-30.

- Mowery K, Shacham H (2012), Pixel Perfect: Fingerprinting Canvas in HTML5 (PDF), retrieved 2020-01-21

- Acar G, Eubank C, Englehardt S, Juarez M, Narayanan A, Diaz C (November 2014). The Web Never Forgets: Persistent Tracking Mechanisms in the Wild. 2014 ACM SIGSAC Conference on Computer & Communications Security. Scottsdale AZ USA: Association for Computing Machinery. pp. 674–689. doi:10.1145/2660267.2660347. ISBN 978-1-4503-2957-6.

- Davis W (July 21, 2014). "EFF Says Its Anti-Tracking Tool Blocks New Form Of Digital Fingerprinting". MediaPost. Retrieved July 21, 2014.

- Knibbs K (July 21, 2014). "What You Need to Know About the Sneakiest New Online Tracking Tool". Gizmodo. Retrieved 2020-01-30.

- "meta: tor uplift: privacy.resistFingerprinting". Retrieved 2018-07-06.

- "Firefox's protection against fingerprinting". Retrieved 2018-07-06.

- "Firefox 42.0 release notes".

- "Apple introduces macOS Mojave". Retrieved 2018-07-06.

- Gómez-Boix A, Laperdrix P, Baudry B (April 2018). Hiding in the Crowd: An Analysis of the Effectiveness of Browser Fingerprinting at Large Scale. WWW '18: The Web Conference 2018. Geneva Switzerland: International World Wide Web Conferences Steering Committee. pp. 309–318. doi:10.1145/3178876.3186097. ISBN 978-1-4503-5639-8.

- "Firefox 69.0 release notes".

- "Chatter on the Wire: A look at DHCP traffic" (PDF). Archived (PDF) from the original on 2014-08-11. Retrieved 2010-01-28.

- "Wireless Device Driver Fingerprinting" (PDF). Archived from the original (PDF) on 2009-05-12. Retrieved 2010-01-28.

- "Chatter on the Wire: A look at excessive network traffic and what it can mean to network security" (PDF). Archived from the original (PDF) on 2014-08-28. Retrieved 2010-01-28.

- "User-Agent".

- Aaron Andersen. "History of the browser user-agent string".

- Unger T, Mulazzani M, Frühwirt D, Huber M, Schrittwieser S, Weippl E (September 2013). SHPF: Enhancing HTTP(S) Session Security with Browser Fingerprinting. 2013 International Conference on Availability, Reliability and Security. Regensburg Germany: IEEE. pp. 255–261. doi:10.1109/ARES.2013.33. ISBN 978-0-7695-5008-4.

- Fiore U, Castiglione A, De Santis A, Palmieri F (September 2014). Countering Browser Fingerprinting Techniques: Constructing a Fake Profile with Google Chrome. 17th International Conference on Network-Based Information Systems. Salerno Italy: IEEE. doi:10.1109/NBiS.2014.102. ISBN 978-1-4799-4224-4.

- Takei N, Saito T, Takasu K, Yamada T (Nov 2015). Web Browser Fingerprinting Using Only Cascading Style Sheets. 10th International Conference on Broadband and Wireless Computing, Communication and Applications. Krakow Poland: IEEE. pp. 57–63. doi:10.1109/BWCCA.2015.105. ISBN 978-1-4673-8315-8.

- Mulazzani M, Reschl P, Huber M, Leithner M, Schrittwieser S, Weippl E (2013), Fast and Reliable Browser Identification with JavaScript Engine Fingerprinting (PDF), SBA Research, retrieved 2020-01-21

- Mowery K, Bogenreif D, Yilek S, Shacham H (2011), Fingerprinting Information in JavaScript Implementations (PDF), retrieved 2020-01-21

- Upathilake R, Li Y, Matrawy A (July 2015). A classification of web browser fingerprinting techniques. 7th International Conference on New Technologies, Mobility and Security. Paris France: IEEE. doi:10.1109/NTMS.2015.7266460. ISBN 978-1-4799-8784-9.

- Starov O, Nikiforakis N (May 2017). XHOUND: Quantifying the Fingerprintability of Browser Extensions. 2017 IEEE Symposium on Security and Privacy. San Jose CA USA: IEEE. pp. 941–956. doi:10.1109/SP.2017.18. ISBN 978-1-5090-5533-3.

- Sanchez-Rola I, Santos I, Balzarotti D (August 2017). Extension Breakdown: Security Analysis of Browsers Extension Resources Control Policies. 26th USENIX Security Symposium. Vancouver BC Canada: USENIX Association. pp. 679–694. ISBN 978-1-931971-40-9. Retrieved 2020-01-21.

- Kaur N, Azam S, KannoorpattiK, Yeo KC, Shanmugam B (January 2017). Browser Fingerprinting as user tracking technology. 11th International Conference on Intelligent Systems and Control. Coimbatore India: IEEE. doi:10.1109/ISCO.2017.7855963. ISBN 978-1-5090-2717-0.

- Al-Fannah NM, Li W (2017). "Not All Browsers are Created Equal: Comparing Web Browser Fingerprintability". In Obana S, Chida K (eds.). Advances in Information and Computer Security. Lecture Notes in Computer Science. Springer International Publishing. pp. 105–120. arXiv:1703.05066. ISBN 978-3-319-64200-0.

- Olejnik L, Castelluccia C, Janc A (July 2012). Why Johnny Can't Browse in Peace: On the Uniqueness of Web Browsing History Patterns. 5th Workshop on Hot Topics in Privacy Enhancing Technologies. Vigo Spain: INRIA. Retrieved 2020-01-21.

- https://developer.mozilla.org/en-US/docs/Web/CSS/Privacy_and_the_:visited_selector

- Fifield D, Egelman S (2015). "Fingerprinting Web Users Through Font Metrics". In Böhme R, Okamoto T (eds.). Financial Cryptography and Data Security. Lecture Notes in Computer Science. 8975. Springer Berlin Heidelberg. pp. 107–124. doi:10.1007/978-3-662-47854-7_7. ISBN 978-3-662-47854-7.

- Saito T, Yasuda K, Ishikawa T, Hosoi R, Takahashi K, Chen Y, Zalasiński M (July 2016). Estimating CPU Features by Browser Fingerprinting. 10th International Conference on Innovative Mobile and Internet Services in Ubiquitous Computing. Fukuoka Japan: IEEE. pp. 587–592. doi:10.1109/IMIS.2016.108. ISBN 978-1-5090-0984-8.

- Olejnik L, Acar G, Castelluccia C, Diaz C (2016). "The Leaking Battery". In Garcia-Alfaro J, Navarro-Arribas G, Aldini A, Martinelli F, Suri N (eds.). Data Privacy Management, and Security Assurance. DPM 2015, QASA 2015. Lecture Notes in Computer Science. 9481. Springer, Cham. doi:10.1007/978-3-319-29883-2_18. ISBN 978-3-319-29883-2.

- Englehardt S, Arvind N (October 2016). Online Tracking: A 1-million-site Measurement and Analysis. 2014 ACM SIGSAC Conference on Computer & Communications Security. Vienna Austria: Association for Computing Machinery. pp. 1388–1401. doi:10.1145/2976749.2978313. ISBN 978-1-4503-4139-4.

- Yen TF, Xie Y, Yu F, Yu R, Abadi M (February 2012). Host Fingerprinting and Tracking on the Web: Privacy and Security Implications (PDF). The 19th Annual Network and Distributed System Security Symposium. San Diego CA USA: Internet Society. Retrieved 2020-01-21.

- Newman, Drew (2007). "The Limitations of Fingerprint Identifications". Criminal Justice. 1 (36): 36–41.

- Merzdovnik G, Huber M, Buhov D, Nikiforakis N, Neuner S, Schmiedecker M, Weippl E (April 2017). Block Me If You Can: A Large-Scale Study of Tracker-Blocking Tools (PDF). 2017 IEEE European Symposium on Security and Privacy. Paris France: IEEE. pp. 319–333. doi:10.1109/EuroSP.2017.26. ISBN 978-1-5090-5762-7.

- Kirk J (July 25, 2014). "'Canvas fingerprinting' online tracking is sneaky but easy to halt". PC World. Retrieved August 9, 2014.

- Smith, Chris. "Adblock Plus: We can stop canvas fingerprinting, the 'unstoppable' new browser tracking technique". BGR. PMC. Archived from the original on July 28, 2014.

- Nikiforakis, Nick; Joosen, Wouter; Livshits, Benjamin (18 May 2015). "PriVaricator: Deceiving Fingerprinters with Little White Lies". Proceedings of the 24th International Conference on World Wide Web: 820–830. doi:10.1145/2736277.2741090. hdl:10044/1/74945. S2CID 9954234.

- Laperdrix, Pierre; Baudry, Benoit; Mishra, Vikas (2017). "FPRandom: Randomizing Core Browser Objects to Break Advanced Device Fingerprinting Techniques" (PDF). Engineering Secure Software and Systems. Lecture Notes in Computer Science. 10379: 97–114. doi:10.1007/978-3-319-62105-0_7. ISBN 978-3-319-62104-3.

- "What's Brave Done For My Privacy Lately? Episode #3: Fingerprint Randomization".

Further reading

- Angwin, Julia; Valentino-DeVries, Jennifer (2010-11-30). "Race Is On to 'Fingerprint' Phones, PCs". Wall Street Journal. ISSN 0099-9660. Retrieved 2018-07-10.

- Segal, Ory; Fridman, Aharon; Shuster, Elad (2017-06-05). "Passive Fingerprinting of HTTP/2 Clients" (PDF). Akamai. Retrieved 2018-07-10.

External links

- Panopticlick, by the Electronic Frontier Foundation, gathers some elements of a browser's device fingerprint and estimates how identifiable it makes the user

- Am I Unique, by INRIA and INSA Rennes, implements fingerprinting techniques including collecting information through WebGL.

- *Partial database of websites that have used canvas fingerprinting