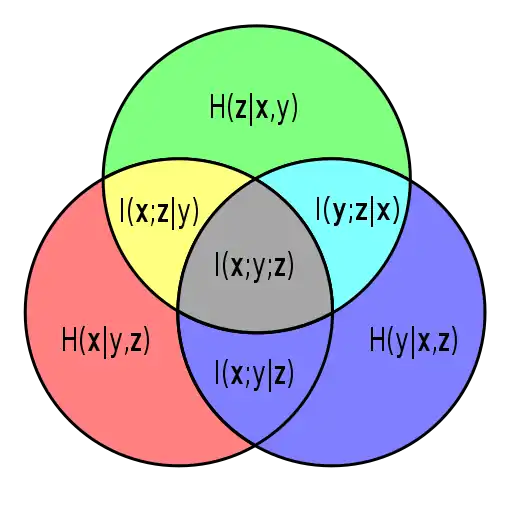

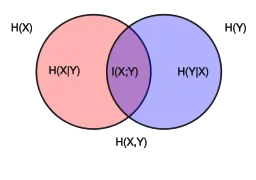

Information diagram

An information diagram is a type of Venn diagram used in information theory to illustrate relationships among Shannon's basic measures of information: entropy, joint entropy, conditional entropy and mutual information.[1][2] Information diagrams are a useful pedagogical tool for teaching and learning about these basic measures of information, but using such diagrams carries some non-trivial implications. For example, Shannon's entropy in the context of an information diagram must be taken as a signed measure. (See the article Information theory and measure theory for more information.). Information diagrams have also been applied to specific problems such as for displaying the information theoretic similarity between sets of ontological terms.[3]

References

- Fazlollah Reza. An Introduction to Information Theory. New York: McGraw-Hill 1961. New York: Dover 1994. ISBN 0-486-68210-2

- R. W. Yeung, A First Course in Information Theory. Norwell, MA/New York: Kluwer/Plenum, 2002.

- Gardeux, Vincent; Halonen, H; Jackson, D; Martinez, FD; Lussier, YA (1 Jun 2015). "Towards a PBMC "virogram assay" for precision medicine: Concordance between ex vivo and in vivo viral infection transcriptomes". Journal of Biomedical Informatics. 1: 94–103. doi:10.1016/j.jbi.2015.03.003. PMC 4951181. PMID 25797143.