Moral Machine

Moral Machine is an online platform, developed by Iyad Rahwan's Scalable Cooperation group at the Massachusetts Institute of Technology, that generates moral dilemmas and collects information on the decisions that people make between two destructive outcomes.[1][2] The platform is the idea of Iyad Rahwan and social psychologists Azim Shariff and Jean-François Bonnefon,[3] who conceived of the idea ahead of the publication of their article about the ethics of self-driving cars.[4] The key contributors to building the platform were MIT Media Lab graduate students Edmond Awad and Sohan Dsouza.

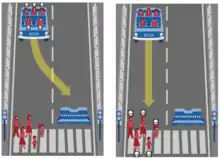

The presented scenarios are often variations of the trolley problem, and the information collected would be used for further research regarding the decisions that machine intelligence must make in the future.[5][6][7][8][9][10] For example, as artificial intelligence plays an increasingly significant role in autonomous driving technology, research projects like Moral Machine help to find solutions for challenging life-and-death decisions that will face self-driving vehicles.[11]

Analysis of the data collected through Moral Machine showed broad differences in relative preferences among different countries, and correlations between these preferences and various national metrics.[12]

References

- "Driverless cars face a moral dilemma: Who lives and who dies?". NBC News. Retrieved 2017-02-16.

- Brogan, Jacob (2016-08-11). "Should a Self-Driving Car Kill Two Jaywalkers or One Law-Abiding Citizen?". Slate. ISSN 1091-2339. Retrieved 2017-02-16.

- Awad, Edmond (2018-10-24). "Inside the Moral Machine". Behavioural and Social Sciences at Nature Research. Retrieved 2019-07-04.

- Bonnefon, Jean-François; Shariff, Azim; Rahwan, Iyad (2016-06-24). "The social dilemma of autonomous vehicles". Science. 352 (6293): 1573–1576. arXiv:1510.03346. Bibcode:2016Sci...352.1573B. doi:10.1126/science.aaf2654. ISSN 0036-8075. PMID 27339987.

- "Moral Machine | MIT Media Lab". www.media.mit.edu. Archived from the original on 2016-11-30. Retrieved 2017-02-16.

- "MIT Seeks 'Moral' to the Story of Self-Driving Cars". VOA. Retrieved 2017-02-16.

- "Moral Machine". Moral Machine. Retrieved 2017-02-16.

- Clark, Bryan (2017-01-16). "MIT's 'Moral Machine' wants you to decide who dies in a self-driving car accident". The Next Web. Retrieved 2017-02-16.

- "MIT Game Asks Who Driverless Cars Should Kill". Popular Science. Retrieved 2017-02-16.

- Constine, Josh. "Play this killer self-driving car ethics game". TechCrunch. Retrieved 2017-02-16.

- Chopra, Ajay. "What's Taking So Long for Driverless Cars to Go Mainstream?". Fortune. Retrieved 2017-08-01.

- Awad, Edmond; Dsouza, Sohan; Kim, Richard; Schulz, Jonathan; Henrich, Joseph; Shariff, Azim; Bonnefon, Jean-François; Rahwan, Iyad (24 October 2018). "The Moral Machine experiment". Nature. 563 (7729): 59–64. Bibcode:2018Natur.563...59A. doi:10.1038/s41586-018-0637-6. hdl:10871/39187. PMID 30356211.