ProActive

ProActive Parallel Suite is an open-source software for enterprise workload orchestration, part of the OW2 community. A workflow model allows to define the set of executables and scripts written in any scripting language along with their dependencies, so ProActive Parallel Suite can schedule and orchestrate executions while optimising the use of computational resources.

| Developer(s) | ActiveEon, Inria, OW2 Consortium |

|---|---|

| Stable release | 10.0 (F-Zero)

/ July 12, 2019 |

| Written in | Java |

| Operating system | Cross-platform |

| Type | Job Scheduler |

| License | AGPL |

| Website | www |

ProActive Parallel Suite is based on the "active object"-based Java framework to optimise task distribution and fault-tolerance.

ProActive Parallel Suite key features

- Workflows ease task parallelization (Java, scripts, or native executables), running them on resources matching various constraints (like GPU acceleration, library or data locality).

- Web interfaces are provided to design and execute job workflows and manage computing resources. A RESTful API provide interoperability with enterprise applications.

- Computational resources can be federated (cloud, clusters, virtualized infrastructures, desktop machines) into a single virtual infrastructure. It provides auto-scaling and ease resource management strategies.

- Interoperability is provided with heterogenous workflows, where tasks can run on various platforms, including Windows, Mac and Linux.

ProActive Java framework and Programming model

The model was created by Denis Caromel, professor at University of Nice Sophia Antipolis.[1] Several extensions of the model were made later on by members of the OASIS team at INRIA.[2] The book A Theory of Distributed Objects presents the ASP calculus that formalizes ProActive features, and provides formal semantics to the calculus, together with properties of ProActive program execution.[3]

Active objects

Active objects are the basic units of activity and distribution used for building concurrent applications using ProActive. An active object runs with its own thread. This thread only executes the methods invoked on this active object by other active objects, and those of the passive objects of the subsystem that belongs to this active object. With ProActive, the programmer does not have to explicitly manipulate Thread objects, unlike in standard Java.

Active objects can be created on any of the hosts involved in the computation. Once an active object is created, its activity (the fact that it runs with its own thread) and its location (local or remote) are perfectly transparent. Any active object can be manipulated as if it were a passive instance of the same class.

An active object is composed of two objects: a body, and a standard Java object. The body is not visible from the outside of the active object.

The body is responsible for receiving calls (or requests) on the active object and storing them in a queue of pending calls. It executes these calls in an order specified by a synchronization policy. If a synchronization policy is not specified, calls are managed in a "First in, first out" (FIFO) manner.

The thread of an active object then chooses a method in the queue of pending requests and executes it. No parallelism is provided inside an active object; this is an important decision in ProActive's design, enabling the use of "pre-post" conditions and class invariants.

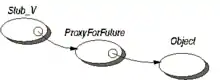

On the side of the subsystem that sends a call to an active object, the active object is represented by a proxy. The proxy generates future objects for representing future values, transforms calls into Request objects (in terms of metaobject, this is a reification) and performs deep copies of passive objects passed as parameters.

Active object basis

ProActive is a library designed for developing applications in the model introduced by Eiffel//, a parallel extension of the Eiffel programming language.

In this model, the application is structured in subsystems. There is one active object (and therefore one thread) for each subsystem, and one subsystem for each active object (or thread). Each subsystem is thus composed of one active object and any number of passive objects—possibly no passive objects. The thread of one subsystem only executes methods in the objects of this subsystem. There are no "shared passive objects" between subsystems.

These features impact the application's topology. Of all the objects that make up a subsystem—the active object and the passive objects—only the active object is known to objects outside of the subsystem. All objects, both active and passive, may have references onto active objects. If an object o1 has a reference onto a passive object o2, then o1 and o2 are part of the same subsystem.

This has also consequences on the semantics of message-passing between subsystems. When an object in a subsystem calls a method on an active object, the parameters of the call may be references on passive objects of the subsystem, which would lead to shared passive objects. This is why passive objects passed as parameters of calls on active objects are always passed by deep-copy. Active objects, on the other hand, are always passed by reference. Symmetrically, this also applies to objects returned from methods called on active objects.

Thanks to the concepts of asynchronous calls, futures, and no data sharing, an application written with ProActive doesn't need any structural change—actually, hardly any change at all—whether it runs in a sequential, multi-threaded, or distributed environment.

Asynchronous calls and futures

Whenever possible, a method call on an active object is reified as an asynchronous request. If not possible, the call is synchronous, and blocks until the reply is received. If the request is asynchronous, it immediately returns a future object.

The future object acts as a placeholder for the result of the not-yet-performed method invocation. As a consequence, the calling thread can go on with executing its code, as long as it doesn't need to invoke methods on the returned object. If the need arises, the calling thread is automatically blocked if the result of the method invocation is not yet available. Although a future object has structure similar to that of an active object, a future object is not active. It only has a Stub and a Proxy.

Code example

The code excerpt below highlights the notion of future objects. Suppose a user calls a method foo and a method bar from an active object a; the foo method returns void and the bar method returns an object of class V:

// a one way typed asynchronous communication towards the (remote) AO a

// a request is sent to a

a.foo (param);

// a typed asynchronous communication with result.

// v is first an awaited Future, to be transparently filled up after

// service of the request, and reply

V v = a.bar (param);

...

// use of the result of an asynchronous call.

// if v is still an awaited future, it triggers an automatic

// wait: Wait-by-necessity

v.gee (param);

When foo is called on an active object a, it returns immediately (as the current thread cannot execute methods in the other subsystem). Similarly, when bar is called on a, it returns immediately but the result v can't be computed yet. A future object, which is a placeholder for the result of the method invocation, is returned. From the point of view of the caller subsystem, there is no difference between the future object and the object that would have been returned if the same call had been issued onto a passive object.

After both methods have returned, the calling thread continues executing its code as if the call had been effectively performed. The role of the future mechanism is to block the caller thread when the gee method is called on v and the result has not yet been set : this inter-object synchronization policy is known as wait-by-necessity.

See also

References

- Caromel, Denis (September 1993). "Towards a Method of Object-Oriented Concurrent Programming". Communications of the ACM. 36 (9): 90–102. doi:10.1145/162685.162711.

- Baduel, Laurent; Baude, Françoise; Caromel, Denis; Contes, Arnaud; Huet, Fabrice; Morel, Matthieu; Quilici, Romain (January 2006). Cunha, José C.; Rana, Omer F. (eds.). Programming, Composing, Deploying for the Grid (PDF). Grid Computing: Software Environments and Tools (PDF). Sprinter-Verlag. pp. 205–229. CiteSeerX 10.1.1.58.7806. doi:10.1007/1-84628-339-6_9. ISBN 978-1-85233-998-2. CiteSeerX: 10.1.1.58.7806.

- Caromel, Denis; Henrio, Ludovic (2005). A Theory of Distributed Objects: asynchrony, mobility, groups, components. Berlin: Springer. ISBN 978-3-540-20866-2. LCCN 2005923024.

Further reading

- Ranaldo, N.; Tretola, G.; Zimeo, E. (April 14–18, 2008). Scheduling ProActive activities with an XPDL-based workflow engine. Parallel and Distributed Processing. Miami: IEEE. pp. 1–8. doi:10.1109/IPDPS.2008.4536336. ISBN 978-1-4244-1693-6. ISSN 1530-2075.

- Sun, Hailong; Zhu, Yanmin; Hu, Chunming; Huai, Jinpeng; Liu, Yunhao; Li, Jianxin (2005). "Early Experience of Remote and Hot Service Deployment with Trustworthiness in CROWN Grid". In Cao, Jiannong; Nejdl, Wolfgang; Xu, Ming (eds.). Advanced Parallel Processing Technologies. Lecture Notes in Computer Science. 3756. Berlin: Springer. pp. 301–312. doi:10.1007/11573937_33. ISBN 978-3-540-29639-3.

- Quéma, Vivien; Balter, Roland; Bellissard, Luc; Féliot, David; Freyssinet, André; Lacourte, Serge (2004). "Asynchronous, Hierarchical, and Scalable Deployment of Component-Based Applications". In Emmerich, Wolfgang; Wolf, Alexander L. (eds.). Component Deployment. Lecture Notes in Computer Science. 3083. Berlin: Springer. pp. 50–64. doi:10.1007/978-3-540-24848-4_4. ISBN 978-3-540-22059-6.

- ProActive-CLIF-Fractal receive OW2 award 2012

- Software to unlock the power of Grid (ICT Results)

- ActiveEon et MetaQuant renforcent leur partenariat sur le Cloud ProActive (in French)