Social intuitionism

In moral psychology, social intuitionism is a model that proposes that moral positions are often non-verbal and behavioral.[1] Often such social intuitionism is based on "moral dumbfounding" where people have strong moral reactions but fail to establish any kind of rational principle to explain their reaction.[2]

Overview

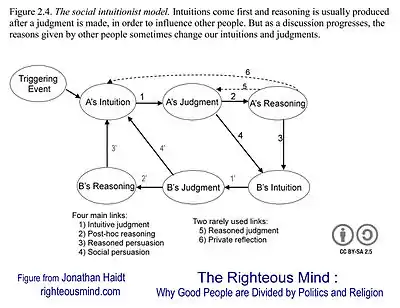

Social intuitionism proposes four main claims about moral positions, namely that they are (1) primarily intuitive ("intuitions come first"), (2) rationalized, justified, or otherwise explained after the fact, (3) taken mainly to influence other people, and are (4) often influenced and sometimes changed by discussing such positions with others.[3]

This model diverges from earlier rationalist theories of morality, such as of Lawrence Kohlberg's stage theory of moral reasoning.[4] Inspired in part by Antonio Damasio's somatic marker hypothesis, Jonathan Haidt's (2001) social intuitionist model[1] de-emphasized the role of reasoning in reaching moral conclusions. Haidt asserts that moral judgment is primarily given rise to by intuition, with reasoning playing a smaller role in most of our moral decision-making. Conscious thought-processes serve as a kind of post hoc justification of our decisions.

His main evidence comes from studies of "moral dumbfounding"[5][6] where people have strong moral reactions but fail to establish any kind of rational principle to explain their reaction.[7] An example situation in which moral intuitions are activated is as follows: Imagine that a brother and sister sleep together once. No one else knows, no harm befalls either one, and both feel it brought them closer as siblings. Most people imagining this incest scenario have very strong negative reaction, yet cannot explain why.[8] Referring to earlier studies by Howard Margolis[9] and others, Haidt suggests that we have unconscious intuitive heuristics which generate our reactions to morally charged-situations, and underlie our moral behavior. He suggests that when people explain their moral positions, they often miss, if not hide, the core premises and processes that actually led to those conclusions.[10]

Haidt's model also states that moral reasoning is more likely to be interpersonal than private, reflecting social motives (reputation, alliance-building) rather than abstract principles. He does grant that interpersonal discussion (and, on very rare occasions, private reflection) can activate new intuitions which will then be carried forward into future judgments.

Reasons to doubt the role of cognition

Haidt (2001) lists four reasons to doubt the cognitive primacy model championed by Kohlberg and others.[1]

- There is considerable evidence that many evaluations, including moral judgments, take place automatically, at least in their initial stages (and these initial judgments anchor subsequent judgments).

- The moral reasoning process is highly biased by two sets of motives, which Haidt labels "relatedness" motives (relating to managing impressions and having smooth interactions with others) and "coherence" motives (preserving a coherent identity and worldview).

- The reasoning process has repeatedly been shown to create convincing post hoc justifications for behavior that are believed by people despite not actually correctly describing the reason underlying the choice. This was demonstrated in a classic paper by Nisbett and Wilson (1977).

- According to Haidt, moral action covaries more with moral emotion than with moral reasoning.

These four arguments led Haidt to propose a major reinterpretation of decades of existing work on moral reasoning:

Because the justifications that people give are closely related to the moral judgments that they make, prior researchers have assumed that the justificatory reasons caused the judgments. But if people lack access to their automatic judgment processes then the reverse causal path becomes more plausible. If this reverse path is common, then the enormous literature on moral reasoning can be reinterpreted as a kind of ethnography of the a priori moral theories held by various communities and age groups.[1](pp822)

Objections to Haidt's model

Among the main criticisms of Haidt's model are that it underemphasizes the role of reasoning.[11] For example, Joseph Paxton and Joshua Greene (2010) review evidence suggesting that moral reasoning plays a significant role in moral judgment, including counteracting automatic tendencies toward bias.[12] Greene and colleagues have proposed an alternative to the social intuitionist model – the Dual Process Model[13] – which suggests that deontological moral judgments, which involve rights and duties, are driven primarily by intuition, while utilitarian judgments aimed at promoting the greater good are underlain by controlled cognitive reasoning processes. Paul Bloom similarly criticizes Haidt's model on the grounds that intuition alone cannot account for historical changes in moral values.[14] Moral change, he believes, is a phenomenon that is largely a product of rational deliberation.

Augusto Blasi emphasizes the importance of moral responsibility and reflection as one analyzes an intuition.[15] His main argument is that some, if not most, intuitions tend to be self-centered and self-seeking.[16] Blasi critiques Haidt in describing the average person and questioning if this model (having an intuition, acting on it, and then justifying it) always happens. He came to the conclusion that not everyone follows this model. In more detail, Blasi proposes Haidt's five default positions on intuition.

- Normally moral judgments are caused by intuitions, whether the intuitions are themselves caused by heuristics, or the heuristics are intuitions; whether they are intrinsically based on emotions, or depend on grammar type of rules and externally related to emotions.

- Intuitions occur rapidly and appear as unquestionably evident; either the intuitions themselves or their sources are unconscious.

- Intuitions are responses to minimal information, are not a result of analyses or reasoning; neither do they require reasoning to appear solid and true.

- Reasoning may occur but infrequently; its use is in justifying the judgment after the fact, either to other people or to oneself. Reasons in sum do not have a moral function.

Because such are the empirical facts, the "rationalistic" theories and methods of Piaget and Kohlberg are rejected. Blasi argues that Haidt does not provide adequate evidence to support his position.[17]

Other researchers have criticized the evidence cited in support of social intuitionism relating to moral dumbfounding,[2] arguing these findings rely on a misinterpretation of participants' responses.[18][19]

See also

References

- Haidt, Jonathan (2001). "The emotional dog and its rational tail: A social intuitionist approach to moral judgment". Psychological Review. 108 (4): 814–834. doi:10.1037/0033-295X.108.4.814. PMID 11699120.

- Haidt, Jonathan; Björklund, Fredrik; Murphy, Scott (August 10, 2000). "Moral Dumbfounding: When Intuition Finds No Reason" (PDF). Cite journal requires

|journal=(help) - Haidt, Jonathan (2012). The Righteous Mind: Why Good People Are Divided by Politics and Religion. Pantheon. pp. 913 Kindle ed. ISBN 978-0307377906.

- Levine, Charles; Kohlberg, Lawrence; Hewer, Alexandra (1985). "The Current Formulation of Kohlberg's Theory and a Response to Critics". Human Development. 28 (2): 94–100. doi:10.1159/000272945.

- McHugh, Cillian; McGann, Marek; Igou, Eric R.; Kinsella, Elaine L. (2017-10-04). "Searching for Moral Dumbfounding: Identifying Measurable Indicators of Moral Dumbfounding". Collabra: Psychology. 3 (1). doi:10.1525/collabra.79. ISSN 2474-7394.

- McHugh, Cillian; McGann, Marek; Igou, Eric R.; Kinsella, Elaine L. (2020-01-05). "Reasons or rationalizations: The role of principles in the moral dumbfounding paradigm". Journal of Behavioral Decision Making. x (x). doi:10.1002/bdm.2167. ISSN 1099-0771.

- Haidt, Jonathan. The righteous mind. Pantheon: 2012. Loc 539, Kindle ed. In footnote 29, Haidt credits the neology of the term moral dumbfounding to social/experimental psychologist Daniel Wegner.

- Haidt, Jonathan. The righteous mind. Pantheon: 2012. Loc 763 Kindle ed.

- Grover, Burton L. (1989-06-30). "Patterns, Thinking, and Cognition: A Theory of Judgment by Howard Margolis. Chicago: University of Chicago Press, 1987, 332 pp. (ISBN 0-226-50527-8)". The Educational Forum. 53 (2): 199–202. doi:10.1080/00131728909335595. ISSN 0013-1725.

- Haidt, Jonathan. The righteous mind. Pantheon: 2012. Loc 1160 Kindle ed.

- LaFollette, Hugh; Woodruff, Michael L. (13 September 2013). "The limits of Haidt: How his explanation of political animosity fails". Philosophical Psychology. 28 (3): 452–465. doi:10.1080/09515089.2013.838752.

- Paxton, Joseph M.; Greene, Joshua D. (13 May 2010). "Moral Reasoning: Hints and Allegations". Topics in Cognitive Science. 2 (3): 511–527. doi:10.1111/j.1756-8765.2010.01096.x. PMID 25163874.

- Greene, J. D. (14 September 2001). "An fMRI Investigation of Emotional Engagement in Moral Judgment". Science. 293 (5537): 2105–2108. doi:10.1126/science.1062872. PMID 11557895.

- Bloom, Paul (March 2010). "How do morals change?". Nature. 464 (7288): 490–490. doi:10.1038/464490a.

- Narvaez, Darcia; Lapsley, Daniel K. (2009). Personality, Identity, and Character: Explorations in Moral Psychology. Cambridge University Press. p. 423. ISBN 978-0-521-89507-1.

- Narvaez & Lapsley 2009, p. 397.

- Narvaez & Lapsley 2009, p. 412.

- Guglielmo, Steve (January 2018). "Unfounded dumbfounding: How harm and purity undermine evidence for moral dumbfounding". Cognition. 170: 334–337. doi:10.1016/j.cognition.2017.08.002. PMID 28803616.

- Royzman, Edward B; Kim, Kwanwoo; Leeman, Robert F (2015). "The curious tale of Julie and Mark: Unraveling the moral dumbfounding effect". Judgment and Decision Making. 10 (4): 296–313.