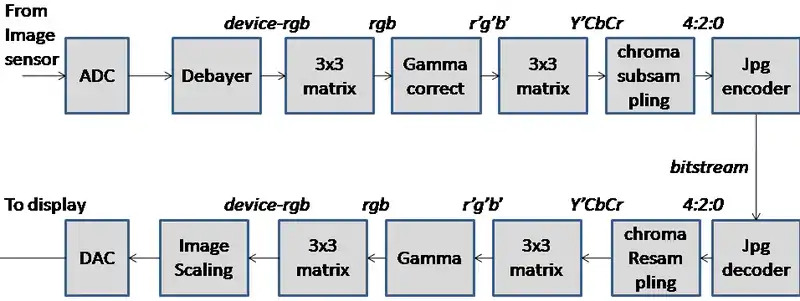

Color image pipeline

An image pipeline or video pipeline is the set of components commonly used between an image source (such as a camera, a scanner, or the rendering engine in a computer game), and an image renderer (such as a television set, a computer screen, a computer printer or cinema screen), or for performing any intermediate digital image processing consisting of two or more separate processing blocks. An image/video pipeline may be implemented as computer software, in a digital signal processor, on an FPGA, or as fixed-function ASIC. In addition, analog circuits can be used to do many of the same functions.

Typical components include image sensor corrections (including "debaying" or applying a Bayer filter), noise reduction, image scaling, gamma correction, image enhancement, colorspace conversion (between formats such as RGB, YUV or YCbCr), chroma subsampling, framerate conversion, image compression/video compression (such as JPEG), and computer data storage/data transmission.

Typical goals of an imaging pipeline may be perceptually pleasing end-results, colorimetric precision, a high degree of flexibility, low cost/low CPU utilization/long battery life, or reduction in bandwidth/file size.

Some functions may be algorithmically linear. Mathematically, those elements can be connected in any order without changing the end-result. As digital computers use a finite approximation to numerical computing, this is in practice not true. Other elements may be non-linear or time-variant. For both cases, there is often one or a few sequences of components that makes sense for optimum precision as well as minimum hardware-cost/CPU-load.[1]

See also

- Image processing

References

- Nakamura, Junichi (2005). Image Sensors and Signal Processing for Digital Still Cameras. CRC. ISBN 0-8493-3545-0.

- "Implementing Image-Processing Pipelines in Digital Cameras". Archived from the original on 2008-07-04. Retrieved 2008-07-06.