Laws of thermodynamics

The four fundamental laws of thermodynamics express empirical facts and define physical quantities, such as temperature, heat, thermodynamic work, and entropy, that characterize thermodynamic processes and thermodynamic systems in thermodynamic equilibrium. They describe the relationships between these quantities, and form a basis for precluding the possibility of certain phenomena, such as perpetual motion. In addition to their use in thermodynamics, the laws have interdisciplinary applications in physics and chemistry.

| Thermodynamics |

|---|

|

|

Traditionally, thermodynamics has stated three fundamental laws: the first law, the second law, and the third law.[1][2][3] A more fundamental statement was later labelled the 'zeroth law'. The law of conservation of mass is also an equally fundamental concept in the theory of thermodynamics, but it is not generally included as a law of thermodynamics.

The zeroth law of thermodynamics defines thermal equilibrium and forms a basis for the definition of temperature. It says that if two systems are each in thermal equilibrium with a third system, then they are in thermal equilibrium with each other.

The first law of thermodynamics says that when energy passes into or out of a system (as work, heat, or matter), the system's internal energy changes in accord with the law of conservation of energy. Equivalently, perpetual motion machines of the first kind (machines that produce work with no energy input) are impossible.

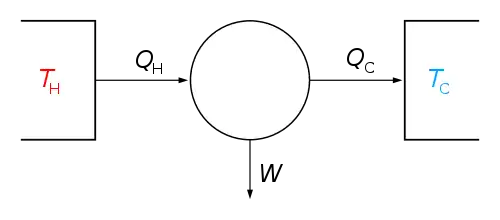

The second law of thermodynamics can be expressed in two main ways. In terms of possible processes, Rudolf Clausius stated that heat does not spontaneously pass from a colder body to a warmer body. Equivalently, perpetual motion machines of the second kind (machines that spontaneously convert thermal energy into mechanical work) are impossible. In terms of entropy, in a natural thermodynamic process, the sum of the entropies of interacting thermodynamic systems increases.

The third law of thermodynamics states that a system's entropy approaches a constant value as the temperature approaches absolute zero. With the exception of non-crystalline solids (glasses) the entropy of a system at absolute zero is typically close to zero.[2]

History

The history of thermodynamics is fundamentally interwoven with the history of physics and history of chemistry and ultimately dates back to theories of heat in antiquity. The laws of thermodynamics are the result of progress made in this field over the nineteenth and early twentieth centuries. The first established thermodynamic principle, which eventually became the second law of thermodynamics, was formulated by Sadi Carnot in 1824 in his book Reflections on the Motive Power of Fire. By 1860, as formalized in the works of scientists such as Rudolf Clausius and William Thomson, what are now known as the first and second laws were established. Later, Nernst's theorem (or Nernst's postulate), which is now known as the third law, was formulated by Walther Nernst over the period 1906–12. While the numbering of the laws is universal today, various textbooks throughout the 20th century have numbered the laws differently. In some fields, the second law was considered to deal with the efficiency of heat engines only, whereas what was called the third law dealt with entropy increases. Gradually, this resolved itself and a zeroth law was later added to allow for a self-consistent definition of temperature. Additional laws have been suggested, but have not achieved the generality of the four accepted laws, and are generally not discussed in standard textbooks.

Zeroth law

The zeroth law of thermodynamics provides for the foundation of temperature as an empirical parameter in thermodynamic systems and establishes the transitive relation between the temperatures of multiple bodies in thermal equilibrium. The law may be stated in the following form:

If two systems are both in thermal equilibrium with a third system, then they are in thermal equilibrium with each other.[4]

Though this version of the law is one of the most commonly stated versions, it is only one of a diversity of statements that are labeled as "the zeroth law". Some statements go further, so as to supply the important physical fact that temperature is one-dimensional and that one can conceptually arrange bodies in a real number sequence from colder to hotter.[5][6][7]

These concepts of temperature and of thermal equilibrium are fundamental to thermodynamics and were clearly stated in the nineteenth century. The name 'zeroth law' was invented by Ralph H. Fowler in the 1930s, long after the first, second, and third laws were widely recognized. The law allows the definition of temperature in a non-circular way without reference to entropy, its conjugate variable. Such a temperature definition is said to be 'empirical'.[8][9][10][11][12][13]

First law

The first law of thermodynamics is a version of the law of conservation of energy, adapted for thermodynamic systems. In general, the law of conservation of energy states that the total energy of an isolated system is constant; energy can be transformed from one form to another, but can be neither created nor destroyed.

In a closed system (i.e. there is no transfer of matter into or out of the system), the first law states that the change in internal energy of the system (ΔUsystem) is equal to the difference between the heat supplied to the system (Q) and the work (W) done by the system on its surroundings. (Note, an alternate sign convention, not used in this article, is to define W as the work done on the system by its surroundings):

- .

For processes that include transfer of matter, a further statement is needed.

When two initially isolated systems are combined into a new system, then the total internal energy of the new system, Usystem, will be equal to the sum of the internal energies of the two initial systems, U1 and U2:

- .

The First Law encompasses several principles:

- The Conservation of energy, which says that energy can be neither created nor destroyed, but can only change form. A particular consequence of this is that the total energy of an isolated system does not change.

- The concept of internal energy and its relationship to temperature. If a system has a definite temperature, then its total energy has three distinguishable components, termed kinetic energy (energy due to the motion of the system as a whole), potential energy (energy resulting from an externally imposed force field), and internal energy. The establishment of the concept of internal energy distinguishes the first law of thermodynamics from the more general law of conservation of energy.

- Work is a process of transferring energy to or from a system in ways that can be described by macroscopic mechanical forces acting between the system and its surroundings. The work done by the system can come from its overall kinetic energy, from its overall potential energy, or from its internal energy.

- For example, when a machine (not a part of the system) lifts a system upwards, some energy is transferred from the machine to the system. The system's energy increases as work is done on the system and in this particular case, the energy increase of the system is manifested as an increase in the system's gravitational potential energy. Work added to the system increases the potential energy of the system:

- When matter is transferred into a system, that masses' associated internal energy and potential energy are transferred with it.

- where u denotes the internal energy per unit mass of the transferred matter, as measured while in the surroundings; and ΔM denotes the amount of transferred mass.

- The flow of heat is a form of energy transfer. Heating is the natural process of moving energy to or from a system other than by work or the transfer of matter. In an isolated system, the internal energy can only be changed by the transfer of energy as heat:

Combining these principles leads to one traditional statement of the first law of thermodynamics: it is not possible to construct a machine which will perpetually output work without an equal amount of energy input to that machine. Or more briefly, a perpetual motion machine of the first kind is impossible.

Second law

The second law of thermodynamics indicates the irreversibility of natural processes, and, in many cases, the tendency of natural processes to lead towards spatial homogeneity of matter and energy, and especially of temperature. It can be formulated in a variety of interesting and important ways. One of the simplest is the Clausius statement, that heat does not spontaneously pass from a colder to a hotter body.

It implies the existence of a quantity called the entropy of a thermodynamic system. In terms of this quantity it implies that

When two initially isolated systems in separate but nearby regions of space, each in thermodynamic equilibrium with itself but not necessarily with each other, are then allowed to interact, they will eventually reach a mutual thermodynamic equilibrium. The sum of the entropies of the initially isolated systems is less than or equal to the total entropy of the final combination. Equality occurs just when the two original systems have all their respective intensive variables (temperature, pressure) equal; then the final system also has the same values.

The second law is applicable to a wide variety of processes, both reversible and irreversible. According to the second law, in a reversible heat transfer, an element of heat transferred, δQ, is the product of the temperature (T), both of the system and of the sources or destination of the heat, with the increment (dS) of the system's conjugate variable, its entropy (S):

While reversible processes are a useful and convenient theoretical limiting case, all natural processes are irreversible. A prime example of this irreversibility is the transfer of heat by conduction or radiation. It was known long before the discovery of the notion of entropy that when two bodies, initially of different temperatures, come into direct thermal connection, then heat immediately and spontaneously flows from the hotter body to the colder one.

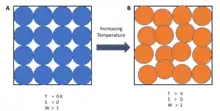

Entropy may also be viewed as a physical measure concerning the microscopic details of the motion and configuration of a system, when only the macroscopic states are known. Such details are often referred to as disorder on a microscopic or molecular scale, and less often as dispersal of energy. For two given macroscopically specified states of a system, there is a mathematically defined quantity called the 'difference of information entropy between them'. This defines how much additional microscopic physical information is needed to specify one of the macroscopically specified states, given the macroscopic specification of the other – often a conveniently chosen reference state which may be presupposed to exist rather than explicitly stated. A final condition of a natural process always contains microscopically specifiable effects which are not fully and exactly predictable from the macroscopic specification of the initial condition of the process. This is why entropy increases in natural processes – the increase tells how much extra microscopic information is needed to distinguish the initial macroscopically specified state from the final macroscopically specified state.[14] Equivalently, in a thermodynamic process, energy spreads.

Third law

The 'third law of thermodynamics can be stated as:[2]

A system's entropy approaches a constant value as its temperature approaches absolute zero.

At zero temperature the system must be in the state with the minimum thermal energy (the ground state). The constant value (not necessarily zero) of entropy at this point is called the residual entropy of the system. Note that, with the exception of non-crystalline solids (e.g. glasses) the residual entropy of a system is typically close to zero.[2] However, it reaches zero only when the system has a unique ground state (i.e. the state with the minimum thermal energy has only one configuration, or microstate). Microstates are used here to describe the probability of a system being in a specific state, as each microstate is assumed to have the same probability of occurring, so macroscopic states with fewer microstates are less probable. In general, entropy is related to the number of possible microstates according to the Boltzmann principle:

Where S is the entropy of the system, kB Boltzmann's constant, and Ω the number of microstates. At absolute zero there is only 1 microstate possible (Ω=1 as all the atoms are identical for a pure substance and as a result all orders are identical as there is only one combination) and ln(1) = 0.

See also

- Chemical thermodynamics

- Enthalpy

- Entropy production

- Ginsberg's theorem

- H-theorem

- Onsager reciprocal relations (sometimes described as a fourth law of thermodynamics)

- Statistical mechanics

- Table of thermodynamic equations

References

- Guggenheim, E.A. (1985). Thermodynamics. An Advanced Treatment for Chemists and Physicists, seventh edition, North Holland, Amsterdam, ISBN 0-444-86951-4.

- Kittel, C. Kroemer, H. (1980). Thermal Physics, second edition, W.H. Freeman, San Francisco, ISBN 0-7167-1088-9.

- Adkins, C.J. (1968). Equilibrium Thermodynamics, McGraw-Hill, London, ISBN 0-07-084057-1.

- Guggenheim (1985), p. 8.

- Sommerfeld, A. (1951/1955). Thermodynamics and Statistical Mechanics, vol. 5 of Lectures on Theoretical Physics, edited by F. Bopp, J. Meixner, translated by J. Kestin, Academic Press, New York, p. 1.

- Serrin, J. (1978). The concepts of thermodynamics, in Contemporary Developments in Continuum Mechanics and Partial Differential Equations. Proceedings of the International Symposium on Continuum Mechanics and Partial Differential Equations, Rio de Janeiro, August 1977, edited by G.M. de La Penha, L.A.J. Medeiros, North-Holland, Amsterdam, ISBN 0-444-85166-6, pp. 411–51.

- Serrin, J. (1986). Chapter 1, 'An Outline of Thermodynamical Structure', pp. 3–32, in New Perspectives in Thermodynamics, edited by J. Serrin, Springer, Berlin, ISBN 3-540-15931-2.

- Adkins, C.J. (1968/1983). Equilibrium Thermodynamics, (first edition 1968), third edition 1983, Cambridge University Press, ISBN 0-521-25445-0, pp. 18–20.

- Bailyn, M. (1994). A Survey of Thermodynamics, American Institute of Physics Press, New York, ISBN 0-88318-797-3, p. 26.

- Buchdahl, H.A. (1966), The Concepts of Classical Thermodynamics, Cambridge University Press, London, pp. 30, 34ff, 46f, 83.

-

- Münster, A. (1970), Classical Thermodynamics, translated by E.S. Halberstadt, Wiley–Interscience, London, ISBN 0-471-62430-6, p. 22.

- Pippard, A.B. (1957/1966). Elements of Classical Thermodynamics for Advanced Students of Physics, original publication 1957, reprint 1966, Cambridge University Press, Cambridge, p. 10.

- Wilson, H.A. (1966). Thermodynamics and Statistical Mechanics, Cambridge University Press, London, pp. 4, 8, 68, 86, 97, 311.

- Ben-Naim, A. (2008). A Farewell to Entropy: Statistical Thermodynamics Based on Information, World Scientific, New Jersey, ISBN 978-981-270-706-2.

Further reading

Introductory

- Atkins, Peter (2007). Four Laws That Drive the Universe. OUP Oxford. ISBN 978-0199232369

- Goldstein, Martin & Inge F. (1993). The Refrigerator and the Universe. Harvard Univ. Press. ISBN 978-0674753259