Markov renewal process

In probability and statistics, a Markov renewal process (MRP) is a random process that generalizes the notion of Markov jump processes. Other random processes like Markov chains, Poisson processes and renewal processes can be derived as special cases of MRP's.

Definition

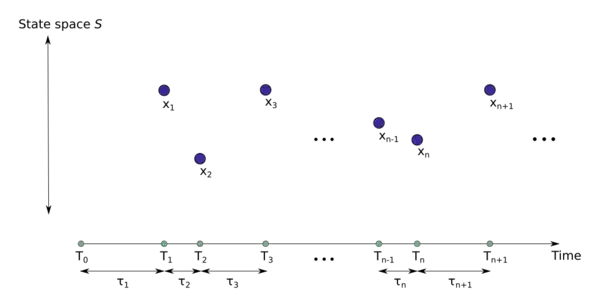

An illustration of a Markov renewal process

Consider a state space Consider a set of random variables , where are the jump times and are the associated states in the Markov chain (see Figure). Let the inter-arrival time, . Then the sequence is called a Markov renewal process if

Relation to other stochastic processes

- If we define a new stochastic process for , then the process is called a semi-Markov process. Note the main difference between an MRP and a semi-Markov process is that the former is defined as a two-tuple of states and times, whereas the latter is the actual random process that evolves over time and any realisation of the process has a defined state for any given time. The entire process is not Markovian, i.e., memoryless, as happens in a continuous time Markov chain/process (CTMC). Instead the process is Markovian only at the specified jump instants. This is the rationale behind the name, Semi-Markov.[1][2][3] (See also: hidden semi-Markov model.)

- A semi-Markov process (defined in the above bullet point) where all the holding times are exponentially distributed is called a CTMC. In other words, if the inter-arrival times are exponentially distributed and if the waiting time in a state and the next state reached are independent, we have a CTMC.

- The sequence in the MRP is a discrete-time Markov chain. In other words, if the time variables are ignored in the MRP equation, we end up with a DTMC.

- If the sequence of s are independent and identically distributed, and if their distribution does not depend on the state , then the process is a renewal process. So, if the states are ignored and we have a chain of iid times, then we have a renewal process.

References

- Medhi, J. (1982). Stochastic processes. New York: Wiley & Sons. ISBN 978-0-470-27000-4.

- Ross, Sheldon M. (1999). Stochastic processes (2nd ed.). New York [u.a.]: Routledge. ISBN 978-0-471-12062-9.

- Barbu, Vlad Stefan; Limnios, Nikolaos (2008). Semi-Markov chains and hidden semi-Markov models toward applications : their use in reliability and DNA analysis. New York: Springer. ISBN 978-0-387-73171-1.

This article is issued from Wikipedia. The text is licensed under Creative Commons - Attribution - Sharealike. Additional terms may apply for the media files.