Operant conditioning chamber

An operant conditioning chamber (also known as the Skinner box) is a laboratory apparatus used to study animal behavior. The operant conditioning chamber was created by B. F. Skinner while he was a graduate student at Harvard University. It may have been inspired by Jerzy Konorski's studies. It is used to study both operant conditioning and classical conditioning.[1][2]

Skinner created the operant chamber as a variation of the puzzle box originally created by Edward Thorndike.[3]

History

In 1905, American psychologist, Edward Thorndike proposed a ‘law of effect’, which formed the basis of operant conditioning. Thorndike conducted experiments to discover how cats learn new behaviors. His most famous work involved monitoring cats as they attempted to escape from puzzle boxes which trapped the animals until they moved a lever or performed an action that triggered their release. He ran several trials with each animal and carefully recorded the time it took for them to perform the necessary actions to escape. Thorndike discovered that his cats seemed to learn from a trial-and-error process rather than insightful inspections of their environment. Learning happened when actions led to an effect and that this effect influenced whether the behavior would be repeated. Thorndike’s ‘law of effect’ contained the core elements of what would become known as operant conditioning. About fifty years after Thorndike’s first described the principles of operant conditioning and the law of effect, B. F. Skinner expanded upon his work. Skinner theorized that if a behavior is followed by a reward, that behavior is more likely to be repeated, but added that if it is followed by some sort of punishment, it is less likely to be repeated.

Purpose

An operant conditioning chamber permits experimenters to study behavior conditioning (training) by teaching a subject animal to perform certain actions (like pressing a lever) in response to specific stimuli, such as a light or sound signal. When the subject correctly performs the behavior, the chamber mechanism delivers food or other reward. In some cases, the mechanism delivers a punishment for incorrect or missing responses. For instance, to test how operant conditioning works for certain invertebrates, such as fruit flies, psychologists use a device known as a "heat box". Essentially this takes up the same form as the Skinner box, but the box is composed of two sides: one side that can undergo temperature change and the other that does not. As soon as the invertebrate crosses over to the side that can undergo a temperature change, the area is heated up. Eventually, the invertebrate will be conditioned to stay on the side that does not undergo a temperature change. This goes to the extent that even when the temperature is turned to its lowest point, the fruit fly will still refrain from approaching that area of the heat box.[4] These types of apparatuses allow experimenters to perform studies in conditioning and training through reward/punishment mechanisms.

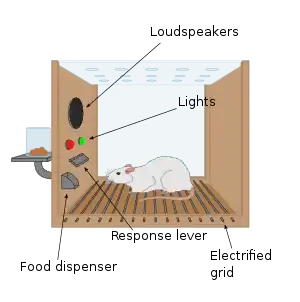

Structure

The structure forming the shell of a chamber is a box large enough to easily accommodate the animal being used as a subject. (Commonly used model animals include rodents—usually lab rats—pigeons, and primates). It is often sound-proof and light-proof to avoid distracting stimuli.

Operant chambers have at least one operandum (or "manipulandum"), and often two or more, that can automatically detect the occurrence of a behavioral response or action. Typical operanda for primates and rats are response levers; if the subject presses the lever, the opposite end moves and closes a switch that is monitored by a computer or other programmed device. Typical operanda for pigeons and other birds are response keys with a switch that closes if the bird pecks at the key with sufficient force. The other minimal requirement of a conditioning chamber is that it has a means of delivering a primary reinforcer (a reward, such as food, etc.) or unconditioned stimulus like food (usually pellets) or water. It can also register the delivery of a conditioned reinforcer, such as an LED signal (see Jackson & Hackenberg 1996 in the Journal of the Experimental Analysis of Behavior for example) as a "token".

Despite such a simple configuration (one operandum and one feeder) it is nevertheless possible to investigate a variety of psychological phenomena. Modern operant conditioning chambers typically have multiple operanda, such as several response levers, two or more feeders, and a variety of devices capable of generating different stimuli including lights, sounds, music, figures, and drawings. Some configurations use an LCD panel for the computer generation of a variety of visual stimuli.

Some operant chambers can also have electrified nets or floors so that shocks can be given to the animals; or lights of different colors that give information about when the food is available. Although the use of shock is not unheard of, approval may be needed in countries that regulate experimentation on animals.

Research impact

Operant conditioning chambers have become common in a variety of research disciplines especially in animal learning. There are varieties of applications for operant conditioning. For instance, shaping a behavior of a child is influenced by the compliments, comments, approval, and disapproval of one's behavior. An important factor of operant conditioning is its ability to explain learning in real-life situations. From an early age, parents nurture their children’s behavior by using rewards and by showing praise following an achievement (crawling or taking a first step) which reinforces such behavior. When a child misbehaves, punishments in the form of verbal discouragement or the removal of privileges are used to discourage them from repeating their actions. An example of this behavior shaping is seen by way of military students. They are exposed to strict punishments and this continuous routine influences their behavior and shapes them to be a disciplined individual. Skinner’s theory of operant conditioning played a key role in helping psychologists to understand how behavior is learned. It explains why reinforcements can be used so effectively in the learning process, and how schedules of reinforcement can affect the outcome of conditioning.

Commercial applications

Slot machines and online games are sometimes cited[5] as examples of human devices that use sophisticated operant schedules of reinforcement to reward repetitive actions.[6]

Social networking services such as Google, Facebook and Twitter have been identified as using the techniques. Critics use terms such as Skinnerian marketing[7] for the way the companies use the ideas to keep users engaged and using the service.

Gamification, the technique of using game design elements in non-game contexts, has also been described as using operant conditioning and other behaviorist techniques to encourage desired user behaviors.[8]

Skinner box

Skinner is noted to have said that he did not want to be an eponym.[9] Further, he believed that Clark Hull and his Yale students coined the expression: Skinner stated he did not use the term himself, and went so far as to ask Howard Hunt to use "lever box" instead of "Skinner box" in a published document.[10]

See also

References

- R.Carlson, Neil (2009). Psychology-the science of behavior. U.S: Pearson Education Canada; 4th edition. p. 207. ISBN 978-0-205-64524-4.

- Krebs, John R. (1983). "Animal behaviour: From Skinner box to the field". Nature. 304 (5922): 117. Bibcode:1983Natur.304..117K. doi:10.1038/304117a0. PMID 6866102. S2CID 5360836.

- Schacter, Daniel L.; Gilbert, Daniel T.; Wegner, Daniel M.; Nock, Matthew K. (2014). "B. F. Skinner: The Role of Reinforcement and Punishment". Psychology (3rd ed.). Macmillan. pp. 278–80. ISBN 978-1-4641-5528-4.

- Brembs, Björn (2003). "Operant conditioning in invertebrates" (PDF). Current Opinion in Neurobiology. 13 (6): 710–717. doi:10.1016/j.conb.2003.10.002. PMID 14662373. S2CID 2385291.

- Hopson, J. (April 2001). "Behavioral game design". Gamasutra. Retrieved 27 April 2019.

- Dennis Coon (2005). Psychology: A modular approach to mind and behavior. Thomson Wadsworth. pp. 278–279. ISBN 0-534-60593-1.

- Davidow, Bill. "Skinner Marketing: We're the Rats, and Facebook Likes Are the Reward". The Atlantic. Retrieved 1 May 2015.

- Thompson, Andrew (6 May 2015). "Slot machines perfected addictive gaming. Now, tech wants their tricks". The Verge.

- Skinner, B. F. (1959). Cumulative record (1999 definitive ed.). Cambridge, MA: B.F. Skinner Foundation. p 620

- Skinner, B. F. (1983). A Matter of Consequences. New York, NY: Alfred A. Knopf, Inc. p 116, 164

External links

| Wikimedia Commons has media related to Skinner boxes. |

- From Pavlov to Skinner Box - background and experiment