Probabilistic metric space

In mathematics, probabilistic metric spaces is a generalizations of metric spaces where the distance no longer takes values in the non-negative real numbersc R ≥ 0, but in distribution functions.

Let D+ be the set of all probability distribution functions F such that F(0) = 0 (F is a nondecreasing, left continuous mapping from R into [0, 1] such that max(F) = 1).

Then given a non-empty set S and a function F: S × S → D+ where we denote F(p, q) by Fp,q for every (p, q) ∈ S × S, the ordered pair (S, F) is said to be a probabilistic metric space if:

- For all u and v in S, u = v if and only if Fu,v(x) = 1 for all x > 0.

- For all u and v in S, Fu,v = Fv,u.

- For all u, v and w in S, Fu,v(x) = 1 and Fv,w(y) = 1 ⇒ Fu,w(x + y) = 1 for x, y > 0.

Probability metric of random variables

A probability metric D between two random variables X and Y may be defined, for example, as

where F(x, y) denotes the joint probability density function of the random variables X and Y. If X and Y are independent from each other then the equation above transforms into

where f(x) and g(y) are probability density functions of X and Y respectively.

One may easily show that such probability metrics do not satisfy the first metric axiom or satisfies it if, and only if, both of arguments X and Y are certain events described by Dirac delta density probability distribution functions. In this case:

the probability metric simply transforms into the metric between expected values , of the variables X and Y.

For all other random variables X, Y the probability metric does not satisfy the identity of indiscernibles condition required to be satisfied by the metric of the metric space, that is:

Example

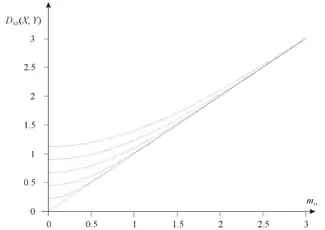

For example if both probability distribution functions of random variables X and Y are normal distributions (N) having the same standard deviation , integrating yields:

where

- ,

and is the complementary error function.

In this case:

Probability metric of random vectors

The probability metric of random variables may be extended into metric D(X, Y) of random vectors X, Y by substituting with any metric operator d(x, y):

where F(X, Y) is the joint probability density function of random vectors X and Y. For example substituting d(x, y) with Euclidean metric and providing the vectors X and Y are mutually independent would yield to: