Wikipedia and fact-checking

Wikipedia and fact-checking includes the process through which Wikipedia editors perform fact-checking of Wikipedia, and also reuse of Wikipedia for fact-checking other publications, and also the cultural discussion of the place of Wikipedia in fact-checking.

Wikipedia's volunteer editor community has the responsibility of fact-checking Wikipedia's content.[1] Fact-checking is an aspect of the broader reliability of Wikipedia. Various academic studies about Wikipedia and the body of criticism of Wikipedia seek to describe the limits of Wikipedia's reliability, document who and how anyone uses Wikipedia for fact checking, and what consequences result from the use of Wikipedia as a fact-checking resource.

Major platforms including YouTube[2] and Facebook[3] use Wikipedia's content to confirm the accuracy of information in their own media collections.

Seeking public trust is a major part of Wikipedia's publication philosophy.[4] Various reader polls and studies have reported public trust in Wikipedia's process for quality control.[4][5]

Use for fact-checking

Public trust and counter to fake news

Wikipedia served as a public resource for access to information about COVID-19 information.[6] In general the public uses Wikipedia to counter fake news.[7]

Other platforms fact-check with Wikipedia

At the 2018 South by Southwest conference YouTube CEO Susan Wojcicki made the announcement that YouTube was using Wikipedia to fact check videos which YouTube hosts.[2][8][9][10] No one at YouTube had consulted anyone at Wikipedia about this development, and the news at the time was a surprise.[8] The intent at the time was for YouTube to use Wikipedia as a counter to the spread of conspiracy theories.[8]

Facebook uses Wikipedia in various ways. Following criticism of Facebook in the context of fake news around the 2016 United States presidential election, Facebook recognized that Wikipedia already had an established process for fact-checking.[3] Facebook's subsequent strategy for countering fake news included using content from Wikipedia for fact-checking.[3][11] In 2020 Facebook began to provide information from Wikipedia's infoboxes into its own general reference knowledge panels to provide objective information.[12]

Fact-checking process

Fact-checking is one aspect of the general editing process in Wikipedia. The volunteer community develops a process for reference and fact checking through community groups such as WikiProject Reliability.[7]

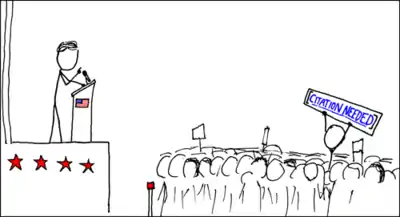

Wikipedia has a reputation for cultivating a culture of fact checking among its editors.[13] Wikipedia's fact checking process depends on the activity of its volunteer community of contributors, who numbered 200,000 as of 2018.[1]

The development of fact-checking practices is ongoing in the Wikipedia editing community.[4] One development which took years was the 2017 community decision to declare a particular news source, Daily Mail, as generally unreliable as a citation for verifying claims.[4][14]

Limitations

When Wikipedia experiences vandalism, then platforms which reuse Wikipedia's content may republish that vandalized content.[15] In 2018 Facebook and YouTube were major users of Wikipedia for its fact checking functions, but those commercial platforms were not contributing to Wikipedia's free nonprofit operations in any way.[15]

In 2016 journalists described how vandalism in Wikipedia undermines its use as a credible source.[16]

References

- Timmons, Heather; Kozlowska, Hanna (27 April 2018). "200,000 volunteers have become the fact checkers of the internet". Quartz.

- Glaser, April (14 August 2018). "YouTube Is Adding Fact-Check Links for Videos on Topics That Inspire Conspiracy Theories". Slate Magazine.

- Flynn, Kerry (5 October 2017). "Facebook outsources its fake news problem to Wikipedia—and an army of human moderators". Mashable.

- Iannucci, Rebecca (6 July 2017). "What can fact-checkers learn from Wikipedia? We asked the boss of its nonprofit owner". Poynter Institute.

- Cox, Joseph (11 August 2014). "Why People Trust Wikipedia More Than the News". Vice.

- Benjakob, Omer (4 August 2020). "Why Wikipedia is immune to coronavirus". Haaretz.

- Zachary J. McDowell; Matthew A. Vetter (July 2020). "It Takes a Village to Combat a Fake News Army: Wikipedia's Community and Policies for Information Literacy". Social Media + Society. 6 (3): 205630512093730. doi:10.1177/2056305120937309. ISSN 2056-3051. Wikidata Q105083357.

- Montgomery, Blake; Mac, Ryan; Warzel, Charlie (13 March 2018). "YouTube Said It Will Link To Wikipedia Excerpts On Conspiracy Videos — But It Didn't Tell Wikipedia". BuzzFeed News.

- Feldman, Brian (16 March 2018). "Why Wikipedia Works". Intelligencer. New York.

- Feldman, Brian (14 March 2018). "Wikipedia Is Not Going to Save YouTube From Misinformation". Intelligencer. New York.

- Locker, Melissa (5 October 2017). "Facebook thinks the answer to its fake news problems is Wikipedia". Fast Company.

- Perez, Sarah (11 June 2020). "Facebook tests Wikipedia-powered information panels, similar to Google, in its search results". TechCrunch.

- Keller, Jared (14 June 2017). "How Wikipedia Is Cultivating an Army of Fact Checkers to Battle Fake News". Pacific Standard.

- Rodriguez, Ashley (10 February 2017). "In a first, Wikipedia has deemed the Daily Mail too "unreliable" to be used as a citation". Quartz.

- Funke, Daniel (18 June 2018). "Wikipedia vandalism could thwart hoax-busting on Google, YouTube and Facebook". Poynter. Poynter Institute.

- A.E.S. (15 January 2016). "Wikipedia celebrates its first 15 years". The Economist.

Further consideration

- Anker, Andrew; Su, Sara; Smith, Jeff (5 October 2017). "New Test to Provide Context About Articles". About Facebook. Facebook, Inc.

- Mohan, Neal; Kyncl, Robert (9 July 2018). "Building a better news experience on YouTube, together". blog.youtube. YouTube.

External links

- Wikipedia:WikiProject Reliability, the English Wikipedia community project which self-organizes fact-checking