Misinformation

Misinformation is false or inaccurate information that is communicated regardless of an intention to deceive.[1][2] Examples of misinformation are false rumors, insults, and pranks. Disinformation is a species of misinformation that is deliberately deceptive, e. g. malicious hoaxes, spearphishing, and computational propaganda.[3] The principal effect of misinformation is to elicit fear and suspicion among a population.[4] News parody or satire can become misinformation if the unwary judge it to be credible and communicate it as if it were true. The words "misinformation" and "disinformation" have often been associated with the neologism "fake news", which some scholars define as "fabricated information that mimics news media content in form but not in organizational process or intent".[5]

History

The history of misinformation, along with that of disinformation and propaganda, is part of the history of mass communication.[6] Early examples cited in a 2017 article by Robert Darnton[7] are the insults and smears spread among political rivals in Imperial and Renaissance Italy in the form of "pasquinades". These are anonymous and witty verse named for the Pasquino piazza and "talking statue" in Rome, and in pre-revolutionary France as "canards", or printed broadsides that sometimes included an engraving to help convince readers to take their wild tales seriously.

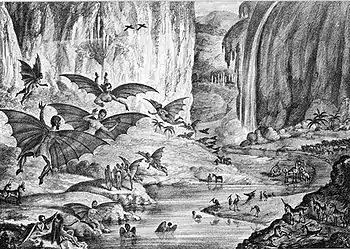

The spread in Europe and North America of Johannes Gutenberg's mechanized printing press increased the opportunities to spread English-language misinformation. In 1835, the New York Sun published the first large-scale news hoax, known as the "Great Moon Hoax". This was a series of six articles claiming to describe life on the Moon, "complete with illustrations of humanoid bat-creatures and bearded blue unicorns".[6] The fast pace and sometimes strife-filled work of mass-producing news broadsheets also led to copies rife with careless factual errors and mistakes, such as the Chicago Tribune's infamous 1948 headline "Dewey Defeats Truman".

In the so-called Information Age, social networking sites have become a notable vector for the spread of misinformation, "fake news", and propaganda.[8][5][9][10][11] Misinformation on social media spreads quickly in comparison to traditional media because of the lack of regulation and examination required before posting.[4] These sites provide users with the capability to spread information quickly to other users without requiring the permission of a gatekeeper such as an editor, who might otherwise require confirmation of its truth before allowing its publication. Journalists today are criticized for helping to spread false information on these social platforms, but research such as that from Starbird et al.[12] and Arif et al.[13] shows they also play a role in curbing the spread of misinformation on social media through debunking and denying false rumors.

Identification and correction

Information conveyed as credible but later amended can affect people's memory and reasoning after retraction.[14] Misinformation differs from concepts like rumors because misinformation is inaccurate information that has previously been disproved.[4] According to Anne Mintz, editor of Web of Deception: Misinformation on the Internet, the best ways to determine whether information is factual is to use common sense.[15] Mintz advises that the reader check whether the information makes sense and whether the founders or reporters of the websites that are spreading the information are biased or have an agenda. Professional journalists and researchers look at other sites (particularly verified sources like news channels[16]) for that information, as it might be reviewed by multiple people and heavily researched, providing more concrete details.

Martin Libicki, author of Conquest In Cyberspace: National Security and Information Warfare,[17] noted that the trick to working with misinformation is the idea that readers must have a balance of what is correct or incorrect. Readers cannot be gullible but also should not be paranoid that all information is incorrect. There is always a chance that even readers who have this balance will believe an error to be true or that they will disregard factual information as incorrect. According to Libicki, readers' prior beliefs or opinions also affect how they interpret new information. When readers believe something to be true before researching it, they are more likely to believe information that supports these prior beliefs or opinions. This phenomenon may lead readers who otherwise are skilled at evaluating credible sources and facts to believe misinformation.

According to research, the factors that lead to recognizing misinformation is the amount of education a person has and the information literacy they have.[18] This means if a person has more knowledge in the subject being investigated, or are familiar with the process of how the information is researched and presented, then they are more likely to identify misinformation. Further research reveal that content descriptors can have a varying effect in people in detecting misinformation.[19]

Prior research suggest it can be very difficult to undo the effects of misinformation once individuals believe it to be true and fact checking can even backfire.[20] Attempting to correct the wrongly held belief is difficult because the misinformation may suit someone's motivational or cognitive reasons. Motivational reasons include the desire to arrive at a foregone conclusion, so accepting information that supports that conclusion. Cognitive reasons may be that the misinformation provides scaffolding for an incident or phenomenon, and is thus part of the mental model for consideration. In this instance, it is necessary to correct the misinformation by not only refuting it, but also by providing accurate information that can also function in the mental model.[21] One suggested solution that would focus on primary prevention of misinformation is the use of a distributed consensus mechanism to validate the accuracy of claims, with appropriate flagging or removal of content that is determined to be false or misleading.[22]

Another approach to correcting misinformation is to "inoculate" against it by delivering misinformation in a weakened form by warning of the dangers of the misinformation and including counterarguments showing the misleading techniques at work in the misinformation. One way to apply this approach is to use parallel argumentation, in which the flawed logic is transferred to a parallel, if extreme or absurd, situation. This approach exposes bad logic without the need for complicated explanations.[23]

Flagging or eliminating news media containing false statements using algorithmic fact checkers is becoming the front line in the battle against the spread of misinformation. Computer programs that automatically detect misinformation are still just beginning to emerge, but similar algorithms are already in place with Facebook and Google. Algorithms detect and alert Facebook users that what they are about to share is likely false, hoping to reduce the chances of the user sharing.[24] Likewise, Google provides supplemental information pointing to fact check websites in response to its users searching controversial search terms.

Causes

Historically, people have relied on journalists and other information professionals to relay facts and truths.[25] Many different things cause miscommunication but the underlying factor is information literacy. Information is distributed by various means, and because of this it is often hard for users to ask questions of the credibility of what they are seeing. Many online sources of misinformation use techniques to fool users into thinking their sites are legitimate and the information they generate is factual. Often misinformation can be politically motivated. Websites such as USConservativeToday.com have previously posted false information for political and monetary gain.[26] Another role misinformation serves is to distract the public eye from negative information about a given person and/or bigger issues of policy, which as a result can go unremarked with the public preoccupied with fake-news.[24] In addition to the sharing of misinformation for political and monetary gain it is also spread unintentionally. Advances in digital media have made it easier to share information, although it is not always accurate. The next sections discuss the role social media has in distributing misinformation, the lack of internet gatekeepers, implications of censorship in combating misinformation, inaccurate information from media sources, and competition in news and media.

Social media

Contemporary social media platforms offer a rich ground for the spread of misinformation.The exact sharing and motivation behind why misinformation spreads through social media so easily remains unknown.[4] A 2018 study of Twitter determined that, compared to accurate information, false information spread significantly faster, further, deeper, and more broadly.[27] Combating its spread is difficult for two reasons: the profusion of information sources, and the generation of "echo chambers". The profusion of information sources makes the reader's task of weighing the reliability of information more challenging, heightened by the untrustworthy social signals that go with such information.[28] The inclination of people to follow or support like-minded individuals leads to the formation of echo chambers and filter bubbles. With no differing information to counter the untruths or the general agreement within isolated social clusters, some writers argue the outcome is a dearth, and worse, the absence of a collective reality, some writers argue.[29] Although social media sites have changed their algorithms to prevent the spread of fake news, the problem still exists.[30] Furthermore, research has shown that while people may know what the scientific community has proved as a fact, they may still refuse to accept it as such.[31]

Misinformation thrives in a social media landscape frequently used and spread by college students.[4] This can be supported by scholars such as Ghosh and Scott(2018), who indicated that misinformation is "becoming unstoppable".[32] It has also been observed that misinformation and disinformation come back, multiple times on social media sites. A research study watched the process of thirteen rumors appearing on Twitter and noticed that eleven of those same stories resurfaced multiple times, after much time had passed.[33]

Another reason that misinformation spreads on social media is from the users themselves. In a study, it was shown that the most common reasons that Facebook users were sharing misinformation for social motivated reasons, rather than taking the information seriously.[34] Although users may not be spreading false information for malicious reasons, the misinformation is still being spread across the internet. A research study shows that misinformation that is introduced through a social format influences individuals drastically more than misinformation delivered non-socially.[35]

Twitter is one of the most concentrated platforms for engagement with political fake news. 80% of fake news sources are shared by 0.1% of users, who are "super-sharers". Older, more conservative social users are also more likely to interact with fake news. On Facebook, adults older than 65 were seven times more likely to share fake news than adults ages 18–29.[27]

Lack of Internet gatekeepers

Because of the decentralized nature and structure of the Internet, writers can easily publish content without being required to subject it to peer review, prove their qualifications, or provide backup documentation. Whereas a book found in a library generally has been reviewed and edited by a second person, Internet sources cannot be assumed to be vetted by anyone other than their authors. They may be produced and posted as soon as the writing is finished.[36] In addition, the presence of trolls and bots used to spread willful misinformation has been a problem for social media platforms.[37] As many as 60 million trolls could be actively spreading misinformation on Facebook.[38]

Censorship

Social media sites such as Facebook and Twitter have found themselves defending accusations of censorship for taking down misinformation. In July 2020, a video showing Dr. Stella Immanuel claiming hydroxychloroquine as an effective cure to coronavirus went viral . In the video, Immanuel suggest that there is no need for masks, school closures, or any kind of economic shut down; attesting that this cure she speaks of is highly effective in treating those infected with the virus. The video was shared 600,000 times and received nearly 20 million views on Facebook before it was taken down for violating community guidelines on spreading misinformation.[39] The video was also taken down on Twitter overnight, but not before President Donald Trump shared it to his page, which is followed by over 85 million Twitter users.[39] NIAID director Dr. Anthony Fauci and members of the World Health Organization (WHO) quickly discredited the video citing larger scale studies of hydroxychloroquine showing it is not an effective treatment of COVID-19, and the FDA cautioned against using it to treat Covid patients following evidence of serious heart problems arising in patients that have taken the drug.[39] Facebook and Twitter alike have policies in place to combat misinformation regarding COVID-19, and the social media platforms were swift with action to back up their respective policies.

Inaccurate information from media sources

A Gallup poll made public in 2016 found that only 32% of Americans trust the mass media "to report the news fully, accurately and fairly", the lowest number in the history of that poll.[40] An example of bad information from media sources that led to the spread of misinformation occurred in November 2005, when Chris Hansen on Dateline NBC made a claim that law enforcement officials estimate 50,000 predators are online at any moment. Afterwards, the U.S. attorney general at the time, Alberto Gonzales, repeated the claim. However, the number that Hansen used in his reporting had no backing. Hansen said he received the information from Dateline expert Ken Lanning, but Lanning admitted that he made up the number 50,000 because there was no solid data on the number. According to Lanning, he used 50,000 because it sounds like a real number, not too big and not too small, and referred to it as a "Goldilocks number". Reporter Carl Bialik says that the number 50,000 is used often in the media to estimate numbers when reporters are unsure of the exact data.[41]

Competition in news and media

Because news organizations and websites hotly compete for viewers, there is a need for great efficiency in releasing stories to the public. The news media landscape in the 1970s offered American consumers access to a limited, but overall consistent and trusted selection of news offerings, where as today consumers are confronted with an overabundance of voices online.[24] This explosion of consumer choice when it comes to news media allows the consumer to pick and choose a news source that hits their preferred agenda, which consequently increases the likelihood that they are misinformed.[24] News media companies broadcast stories 24 hours a day, and break the latest news in hopes of taking audience share from their competitors. News is also produced at a pace that does not always allow for fact-checking, or for all of the facts to be collected or released to the media at one time, letting readers or viewers insert their own opinions, and possibly leading to the spread of misinformation.[42]

Impact

Misinformation can affect all aspects of life. Allcott, Gentzkow and Yu (2019:6) concur that diffusion of misinformation through social media is a potential threat to democracy and broader society. The effects of misinformation can lead to the accurateness about information and details of the occurrence to decline.[43] When eavesdropping on conversations, one can gather facts that may not always be true, or the receiver may hear the message incorrectly and spread the information to others. On the Internet, one can read content that is stated to be factual but that may not have been checked or may be erroneous. In the news, companies may emphasize the speed at which they receive and send information but may not always be correct in the facts. These developments contribute to the way misinformation will continue to complicate the public's understanding of issues and to serve as a source for belief and attitude formation.[44]

In regards to politics, some view being a misinformed citizen as worse than being an uninformed citizen. Misinformed citizens can state their beliefs and opinions with confidence and in turn affect elections and policies. This type of misinformation comes from speakers not always being upfront and straightforward, yet may appear both "authoritative and legitimate" on the surface.[8] When information is presented as vague, ambiguous, sarcastic, or partial, receivers are forced to piece the information together and make assumptions about what is correct.[45] Aside from political propaganda, misinformation can also be employed in industrial propaganda. Using tools such as advertising, a company can undermine reliable evidence or influence belief through concerted misinformation campaign. For instance, tobacco companies employed misinformation in the second half of the twentieth century to diminish the reliability of studies that demonstrated the link between smoking and lung cancer.[46] In the medical field, misinformation can immediately lead to life endangerment as seen in the case of the public's negative perception towards vaccines or the use of herbs instead of medicines to treat diseases.[8][47] In regards to the COVID-19 pandemic, the spread of misinformation has proven to cause confusion as well as negative emotions such as anxiety and fear.[48] Misinformation regarding proper safety measures for the prevention of the virus that go against information from legitimate institutions like the World Health Organization can also lead to inadequate protection and possibly place individuals at risk for exposure.[48][49]

Misinformation has the power to sway public elections and referendums if it has the chance to gain enough momentum in the public discourse. Leading up to the 2016 United Kingdom European Union membership referendum for example, a figure widely circulated by the Vote Leave campaign claimed the UK would save £350 million a week by leaving the EU, and that the money would be redistributed to the British National Health Service.[50] This was later deemed a "clear misuse of official statistics" by the UK statistics authority.[50] The advert infamously shown off on the side of London's renowned double decker busses did not take into account the UK's budget rebate, and the idea that 100% of the money saved would go to the NHS was unrealistic. A poll published in 2016 by Ipsos MORI found that nearly half of the British public believed this misinformation to be true.[50] Even when information is proven to me misinformation, it may continue to shape people's attitudes towards a given topic,[40] meaning misinformation has the power to swing political decisions if it gains enough traction in public discussion.

Websites have been created to help people to discern fact from fiction. For example, the site FactCheck.org has a mission to fact check the media, especially politician speeches and stories going viral on the Internet. The site also includes a forum where people can openly ask questions about information they're not sure is true in both the media and the internet.[51] Similar sites give individuals the option to be able to copy and paste misinformation into a search engine and the site will investigate the truthfulness of the inputted data.[52] Famous online resources, such as Facebook and Google, have attempted to add automatic fact checker programs to their sites, and created the option for users to flag information that they think are false on their website.[52] A way that fact checking programs find misinformation involve finding the truth by analyzing the language and syntax of news stories. Another way is that fact checkers can search for existing information on the subject and compare it to the new broadcasts being put online.[53] Other sites such as Wikipedia and Snopes are also widely used resources for verifying information.

Some scholars and activists are pioneering a movement to eliminate the mis/disinformation and information pollution in the digital world. The theory they are developing, "information environmentalism", has become a curriculum in some universities and colleges.[54][55]

See also

- List of common misconceptions

- List of fake news websites

- List of satirical news websites

- Character assassination

- Defamation (also known as "slander")

- Counter Misinformation Team

- Disinformation

- Factoid

- Fallacy

- Gossip

- Junk science

- Flat earth

- Euromyth

- Quotation

- Propaganda

- Pseudoscience

- Rumor

- Social engineering (in political science and cybercrime)

- Persuasion

References

- Merriam-Webster Dictionary (19 August 2020). "Misinformation". Retrieved 19 August 2020.

- Merriam-Webster Dictionary (19 August 2020). "disinformation". Merriam-Webster. Retrieved 19 August 2020.

- Woolley, Samuel C.; Howard, Philip N. (2016). "Political Communication, Computational Propaganda, and Autonomous Agents". International Journal of Communication. 10: 4882–4890. Archived from the original on 2019-10-22. Retrieved 2019-10-22.

- Chen, Xinran; Sin, Sei-Ching Joanna; Theng, Yin-Leng; Lee, Chei Sian (September 2015). "Why Students Share Misinformation on Social Media: Motivation, Gender, and Study-level Differences". The Journal of Academic Librarianship. 41 (5): 583–592. doi:10.1016/j.acalib.2015.07.003.

- Lazer, David M. J.; Baum, Matthew A.; Benkler, Yochai; Berinsky, Adam J.; Greenhill, Kelly M.; Menczer, Filippo; Metzger, Miriam J.; Nyhan, Brendan; Pennycook, Gordon; Rothschild, David; Schudson, Michael; Sloman, Steven A.; Sunstein, Cass R.; Thorson, Emily A.; Watts, Duncan J.; Zittrain, Jonathan L. (2018). "The science of fake news". Science. 359 (6380): 1094–1096. Bibcode:2018Sci...359.1094L. doi:10.1126/science.aao2998. PMID 29590025. S2CID 4410672.

- "A short guide to the history of 'fake news' and disinformation". International Center for Journalists. Archived from the original on 2019-02-25. Retrieved 2019-02-24.

- "The True History of Fake News". The New York Review of Books. 2017-02-13. Archived from the original on 2019-02-05. Retrieved 2019-02-24.

- Stawicki, Stanislaw; Firstenberg, Michael; Papadimos, Thomas. "The Growing Role of Social Media in International Health Security: The Good, the Bad, and the Ugly". Global Health Security. 1 (1): 341–357.

- Vosoughi, Soroush; Roy, Deb; Aral, Sinan (2018-03-09). "The spread of true and false news online" (PDF). Science. 359 (6380): 1146–1151. Bibcode:2018Sci...359.1146V. doi:10.1126/science.aap9559. PMID 29590045. S2CID 4549072. Archived from the original (PDF) on 2019-04-29. Retrieved 2019-08-21.

- Tucker, Joshua A.; Guess, Andrew; Barbera, Pablo; Vaccari, Cristian; Siegel, Alexandra; Sanovich, Sergey; Stukal, Denis; Nyhan, Brendan. "Social Media, Political Polarization, and Political Disinformation: A Review of the Scientific Literature". Hewlett Foundation White Paper. Archived from the original on 2019-03-06. Retrieved 2019-03-05.

- Machado, Caio; Kira, Beatriz; Narayanan, Vidya; Kollanyi, Bence; Howard, Philip (2019). "A Study of Misinformation in WhatsApp groups with a focus on the Brazilian Presidential Elections". Companion Proceedings of the 2019 World Wide Web Conference on – WWW '19. New York: ACM Press: 1013–1019. doi:10.1145/3308560.3316738. ISBN 978-1450366755. S2CID 153314118.

- Starbird, Kate; Dailey, Dharma; Mohamed, Owla; Lee, Gina; Spiro, Emma (2018). "Engage Early, Correct More: How Journalists Participate in False Rumors Online during Crisis Events". Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI '18). doi:10.1145/3173574.3173679. S2CID 5046314. Retrieved 2019-02-24.

- Arif, Ahmer; Robinson, John; Stanck, Stephanie; Fichet, Elodie; Townsend, Paul; Worku, Zena; Starbird, Kate (2017). "A Closer Look at the Self-Correcting Crowd: Examining Corrections in Online Rumors" (PDF). Proceedings of the 2017 ACM Conference on Computer Supported Cooperative Work and Social Computing (CSCW '17): 155–169. doi:10.1145/2998181.2998294. ISBN 978-1450343350. S2CID 15167363. Archived (PDF) from the original on 26 February 2019. Retrieved 25 February 2019.

- Ecker, Ullrich K.H.; Lewandowsky, Stephan; Cheung, Candy S.C.; Maybery, Murray T. (November 2015). "He did it! She did it! No, she did not! Multiple causal explanations and the continued influence of misinformation" (PDF). Journal of Memory and Language. 85: 101–115. doi:10.1016/j.jml.2015.09.002.

- Mintz, Anne. "The Misinformation Superhighway?". PBS. Archived from the original on 2 April 2013. Retrieved 26 February 2013.

- Jain, Suchita; Sharma, Vanya; Kaushal, Rishabh (September 2016). "Towards automated real-time detection of misinformation on Twitter". 2016 International Conference on Advances in Computing, Communications and Informatics (ICACCI). IEEE Conference Publication. pp. 2015–2020. doi:10.1109/ICACCI.2016.7732347. ISBN 978-1-5090-2029-4. S2CID 17767475.

- Libicki, Martin (2007). Conquest in Cyberspace: National Security and Information Warfare. New York: Cambridge University Press. pp. 51–55. ISBN 978-0521871600.

- Khan, M. Laeeq; Idris, Ika Karlina (2019-02-11). "Recognise misinformation and verify before sharing: a reasoned action and information literacy perspective". Behaviour & Information Technology. 38 (12): 1194–1212. doi:10.1080/0144929x.2019.1578828. ISSN 0144-929X. S2CID 86681742.

- Caramancion, Kevin Matthe (September 2020). "Understanding the Impact of Contextual Clues in Misinformation Detection". 2020 IEEE International IOT, Electronics and Mechatronics Conference (IEMTRONICS): 1–6. doi:10.1109/IEMTRONICS51293.2020.9216394. ISBN 978-1-7281-9615-2. S2CID 222297695.

- Ecker, Ullrich K. H.; Lewandowsky, Stephan; Chadwick, Matthew (2020-04-22). "Can Corrections Spread Misinformation to New Audiences? Testing for the Elusive Familiarity Backfire Effect". Cognitive Research: Principles and Implications. 5 (1): 41. doi:10.31219/osf.io/et4p3. PMC 7447737. PMID 32844338.

- Jerit, Jennifer; Zhao, Yangzi (2020). "Political Misinformation". Annual Review of Political Science. 23: 77–94. doi:10.1146/annurev-polisci-050718-032814.

- Plaza, Mateusz; Paladino, Lorenzo (2019). "The use of distributed consensus algorithms to curtail the spread of medical misinformation". International Journal of Academic Medicine. 5 (2): 93–96. doi:10.4103/IJAM.IJAM_47_19. S2CID 201803407.

- Cook, John (May–June 2020). "Using Humor And Games To Counter Science Misinformation". Skeptical Inquirer. Vol. 44 no. 3. Amherst, New York: Center for Inquiry. pp. 38–41. Archived from the original on 31 December 2020. Retrieved 31 December 2020.

- Lewandowsky, Stephan; Ecker, Ullrich K.H.; Cook, John (December 2017). "Beyond Misinformation: Understanding and Coping with the "Post-Truth" Era". Journal of Applied Research in Memory and Cognition. 6 (4): 353–369. doi:10.1016/j.jarmac.2017.07.008. ISSN 2211-3681.

- Calvert, Philip (December 2002). "Web of Deception: Misinformation on the Internet". The Electronic Library. 20 (6): 521. doi:10.1108/el.2002.20.6.521.7. ISSN 0264-0473.

- Marwick, Alice E. (2013-01-31), A Companion to New Media Dynamics, Wiley-Blackwell, pp. 355–364, doi:10.1002/9781118321607.ch23, ISBN 978-1-118-32160-7 Missing or empty

|title=(help);|chapter=ignored (help) - Swire-Thompson, Briony; Lazer, David (2020). "Public Health and Online Misinformation: Challenges and Recommendations". Annual Review of Public Health. 41: 433–451. doi:10.1146/annurev-publhealth-040119-094127. PMID 31874069.

- Messerole, Chris (2018-05-09). "How misinformation spreads on social media – And what to do about it". Brookings Institution. Archived from the original on 25 February 2019. Retrieved 24 February 2019.

- Benkler, Y. (2017). "Study: Breitbart-led rightwing media ecosystem altered broader media agenda". Archived from the original on 4 June 2018. Retrieved 8 June 2018.

- Allcott, Hunt (October 2018). "Trends in the Diffusion of Misinformation on Social Media" (PDF). Stanford Education. arXiv:1809.05901. Bibcode:2018arXiv180905901A. Archived (PDF) from the original on 2019-07-28. Retrieved 2019-05-10.

- Krause, Nicole M.; Scheufele, Dietram A. (2019-04-16). "Science audiences, misinformation, and fake news". Proceedings of the National Academy of Sciences. 116 (16): 7662–7669. doi:10.1073/pnas.1805871115. ISSN 0027-8424. PMC 6475373. PMID 30642953.

- Allcott, Hunt; Gentzkow, Matthew; Yu, Chuan (2019-04-01). "Trends in the diffusion of misinformation on social media". Research & Politics. 6 (2): 2053168019848554. doi:10.1177/2053168019848554. ISSN 2053-1680. S2CID 52291737.

- Shin, Jieun; Jian, Lian; Driscoll, Kevin; Bar, François (June 2018). "The diffusion of misinformation on social media: Temporal pattern, message, and source". Computers in Human Behavior. 83: 278–287. doi:10.1016/j.chb.2018.02.008. ISSN 0747-5632.

- Chen, Xinran; Sin, Sei-Ching Joanna; Theng, Yin-Leng; Lee, Chei Sian (2015). "Why Do Social Media Users Share Misinformation?". Proceedings of the 15th ACM/IEEE-CE on Joint Conference on Digital Libraries – JCDL '15. New York: ACM Press: 111–114. doi:10.1145/2756406.2756941. ISBN 978-1-4503-3594-2. S2CID 15983217.

- Gabbert, Fiona; Memon, Amina; Allan, Kevin; Wright, Daniel B. (September 2004). "Say it to my face: Examining the effects of socially encountered misinformation" (PDF). Legal and Criminological Psychology. 9 (2): 215–227. doi:10.1348/1355325041719428. ISSN 1355-3259.

- Stapleton, Paul (2003). "Assessing the quality and bias of web-based sources: implications for academic writing". Journal of English for Academic Purposes. 2 (3): 229–245. doi:10.1016/S1475-1585(03)00026-2.

- Milman, Oliver (2020-02-21). "Revealed: quarter of all tweets about climate crisis produced by bots". The Guardian. ISSN 0261-3077. Archived from the original on 2020-02-22. Retrieved 2020-02-23.

- Massey, Douglas S.; Iyengar, Shanto (2019-04-16). "Scientific communication in a post-truth society". Proceedings of the National Academy of Sciences. 116 (16): 7656–7661. doi:10.1073/pnas.1805868115. ISSN 0027-8424. PMC 6475392. PMID 30478050.

- "Stella Immanuel - the doctor behind unproven coronavirus cure claim". BBC News. 2020-07-29. Retrieved 2020-11-23.

- Marwick, Alice; Lewis, Rebecca (2017). Media Manipulation and Disinformation Online. New York: Data & Society Research Institute. pp. 40–45.

- Gladstone, Brooke (2012). The Influencing Machine. New York: W. W. Norton & Company. pp. 49–51. ISBN 978-0393342468.

- Croteau; et al. "Media Technology" (PDF): 285–321. Archived (PDF) from the original on January 2, 2013. Retrieved March 21, 2013. Cite journal requires

|journal=(help) - Bodner, Glen E.; Musch, Elisabeth; Azad, Tanjeem (2009). "Reevaluating the potency of the memory conformity effect". Memory & Cognition. 37 (8): 1069–1076. doi:10.3758/mc.37.8.1069. ISSN 0090-502X. PMID 19933452.

- Southwell, Brian G.; Thorson, Emily A.; Sheble, Laura (2018). Misinformation and Mass Audiences. University of Texas Press. ISBN 978-1477314586.

- Barker, David (2002). Rushed to Judgement: Talk Radio, Persuasion, and American Political Behavior. New York: Columbia University Press. pp. 106–109.

- O'Connor, Cailin; Weatherall, James Owen (2019). The Misinformation Age: How False Beliefs Spread. New Haven: Yale University Press. pp. 10. ISBN 978-0300234015.

- Sinha, P.; Shaikh, S.; Sidharth, A. (2019). India Misinformed: The True Story. Harper Collins. ISBN 978-9353028381.

- Bratu, Sofia (May 24, 2020). "The Fake News Sociology of COVID-19 Pandemic Fear: Dangerously Inaccurate Beliefs, Emotional Contagion, and Conspiracy Ideation". Linguistic and Philosophical Investigations. 19: 128–134. doi:10.22381/LPI19202010. Retrieved 7 November 2020.

- "Misinformation on coronavirus is proving highly contagious". AP NEWS. 2020-07-29. Retrieved 2020-11-23.

- "The misinformation that was told about Brexit during and after the referendum". The Independent. 2018-07-27. Retrieved 2020-11-23.

- "Ask FactCheck". www.factcheck.org. Archived from the original on 2016-03-31. Retrieved 2016-03-31.

- Fernandez, Miriam; Alani, Harith (2018). "Online Misinformation" (PDF). Companion of the Web Conference 2018 on the Web Conference 2018 – WWW '18. New York: ACM Press: 595–602. doi:10.1145/3184558.3188730. ISBN 978-1-4503-5640-4. S2CID 13799324. Archived (PDF) from the original on 2019-04-11. Retrieved 2020-02-13.

- Zhang, Chaowei; Gupta, Ashish; Kauten, Christian; Deokar, Amit V.; Qin, Xiao (December 2019). "Detecting fake news for reducing misinformation risks using analytics approaches". European Journal of Operational Research. 279 (3): 1036–1052. doi:10.1016/j.ejor.2019.06.022. ISSN 0377-2217.

- "Info-Environmentalism: An Introduction". Archived from the original on 2018-07-03. Retrieved 2018-09-28.

- "Information Environmentalism". Digital Learning and Inquiry (DLINQ). 2017-12-21. Archived from the original on 2018-09-28. Retrieved 2018-09-28.

Further reading

| Library resources about Misinformation |

- Machado, Caio; Kira, Beatriz; Narayanan, Vidya; Kollanyi, Bence; Howard, Philip (2019). "A Study of Misinformation in WhatsApp groups with a focus on the Brazilian Presidential Elections". Companion Proceedings of the 2019 World Wide Web Conference on – WWW '19. New York: ACM Press: 1013–1019. doi:10.1145/3308560.3316738. ISBN 978-1450366755. S2CID 153314118.

- Allcott, H.; Gentzkow, M. (2017). "Social Media and Fake News in the 2016 Election". Journal of Economic Perspectives. 31 (2): 211–236. doi:10.1257/jep.31.2.211. S2CID 32730475.

- Baillargeon, Normand (4 January 2008). A short course in intellectual self-defense. Seven Stories Press. ISBN 978-1-58322-765-7. Retrieved 22 June 2011.

- Bakir, V.; McStay, A. (2017). "Fake News and The Economy of Emotions: Problems, causes, solutions". Digital Journalism. 6: 154–175. doi:10.1080/21670811.2017.1345645. S2CID 157153522.

- Christopher Cerf, and Victor Navasky, The Experts Speak: The Definitive Compendium of Authoritative Misinformation, Pantheon Books, 1984.

- Cook, John; Stephan Lewandowsky; Ullrich K. H. Ecker (2017-05-05). "Neutralizing misinformation through inoculation: Exposing misleading argumentation techniques reduces their influence". PLOS One. 12 (5): e0175799. Bibcode:2017PLoSO..1275799C. doi:10.1371/journal.pone.0175799. PMC 5419564. PMID 28475576.

- Christopher Murphy (2005). Competitive Intelligence: Gathering, Analysing And Putting It to Work. Gower Publishing, Ltd.. pp. 186–189. ISBN 0-566-08537-2. A case study of misinformation arising from simple error

- O'Connor, Cailin, and James Owen Weatherall, "Why We Trust Lies: The most effective misinformation starts with seeds of truth", Scientific American, vol. 321, no. 3 (September 2019), pp. 54–61.

- Jürg Strässler (1982). Idioms in English: A Pragmatic Analysis. Gunter Narr Verlag. pp. 43–44. ISBN 3-87808-971-6.

External links

- Comic: Fake News Can Be Deadly. Here's How To Spot It (audio tutorial, graphic tutorial)