Recursive Internetwork Architecture

The Recursive InterNetwork Architecture (RINA) is a new computer network architecture proposed as an alternative to the architecture of the currently mainstream Internet protocol suite. RINA's fundamental principles are that computer networking is just Inter-Process Communication or IPC, and that layering should be done based on scope/scale, with a single recurring set of protocols, rather than based on function, with specialized protocols. The protocol instances in one layer interface with the protocol instances on higher and lower layers via new concepts and entities that effectively reify networking functions currently specific to protocols like BGP, OSPF and ARP. In this way, RINA claims to support features like mobility, multihoming and Quality of Service without the need for additional specialized protocols like RTP and UDP, as well as to allow simplified network administration without the need for concepts like autonomous systems and NAT.

Background

The principles behind RINA were first presented by John Day in his 2008 book Patterns in Network Architecture: A return to Fundamentals.[1] This work is a start afresh, taking into account lessons learned in the 35 years of TCP/IP’s existence, as well as the lessons of OSI’s failure and the lessons of other network technologies of the past few decades, such as CYCLADES, DECnet, and Xerox Network Systems.

The starting point for a radically new and different network architecture like RINA is an attempt to solve or a response to the following problems which do not appear to have practical or compromise-free solutions with current network architectures, especially the Internet protocol suite and its functional layering as depicted in Figure 1:

- Transmission complexity: the separation of IP and TCP results in inefficiency, with the MTU discovery performed to prevent IP fragmentation being the clearest symptom.

- Performance: TCP itself carries rather high overhead with its handshake, which also causes vulnerabilities such as SYN floods. Also, TCP relies on packet dropping to throttle itself and avoid congestion, meaning its congestion control is purely reactive, not proactive or preventive. This interacts badly with large buffers, leading to bufferbloat.[2]

- Multihoming: the IP address and port number are too low-level to identify an application in two different networks. DNS doesn't solve this because hostnames must resolve to a single IP address and port number combination, making them aliases instead of identities. Neither does LISP, because i) it still uses the locator, which is an IP address, for routing, and ii) it is based on a false distinction, in that all entities in a scope are located by their identifiers to begin with;[3] in addition, it also introduces scalability problems of its own.[4]

- Mobility: the IP address and port number are also too low-level to identify an application as it moves between networks, resulting in complications for mobile devices such as smartphones. Though a solution, Mobile IP in reality shifts the problem entirely to the Care-of address and introduces an IP tunnel, with attendant complexity.

- Management: the same low-level nature of the IP address encourages multiple addresses or even address ranges to be allocated to single hosts,[5] putting pressure on allocation and accelerating exhaustion. NAT only delays address exhaustion and potentially introduces even more problems. At the same time, functional layering of the Internet protocol suite's architecture leaves room for only two scopes, complicating subdivision of administration of the Internet and requiring the artificial notion of autonomous systems. OSPF and IS-IS have relatively few problems, but do not scale well, forcing usage of BGP for larger networks and inter-domain routing.

- Security: the nature of the IP address space itself results in frail security, since there is no true configurable policy for adding or removing IP addresses other than physically preventing attachment. TLS and IPSec provide solutions, but with accompanying complexity. Firewalls and blacklists are vulnerable to overwhelming, ergo not scalable. "[...] experience has shown that it is difficult to add security to a protocol suite unless it is built into the architecture from the beginning."[6]

Though these problems are far more acutely visible today, there have been precedents and cases almost right from the beginning of the ARPANET, the environment in which the Internet protocol suite was designed:

1972: Multihoming not supported by the ARPANET

In 1972, Tinker Air Force Base[7] wanted connections to two different IMPs for redundancy. ARPANET designers realized that they couldn't support this feature because host addresses were the addresses of the IMP port number the host was connected to (borrowing from telephony). To the ARPANET, two interfaces of the same host had different addresses; in other words, the address was too low-level to identify a host.

1978: TCP split from IP

Initial TCP versions performed the error and flow control (current TCP) and relaying and multiplexing (IP) functions in the same protocol. In 1978 TCP was split from IP even though the two layers had the same scope. By 1987, the networking community was well aware of IP fragmentation's problems, to the point of considering it harmful.[8] However, it was not understood as a symptom that TCP and IP were interdependent.

1981: Watson's fundamental results ignored

Richard Watson in 1981 provided a fundamental theory of reliable transport[9] whereby connection management requires only timers bounded by a small factor of the Maximum Packet Lifetime (MPL). Based on this theory, Watson et al. developed the Delta-t protocol [10] which allows a connection's state to be determined simply by bounding three timers, with no handshaking. On the other hand, TCP uses both explicit handshaking as well as more limited timer-based management of the connection's state.

1983: Internetwork layer lost

Early in 1972 the International Networking Working Group (INWG) was created to bring together the nascent network research community. One of the early tasks it accomplished was voting an international network transport protocol, which was approved in 1976.[11] Remarkably, the selected option, as well as all the other candidates, had an architecture composed of three layers of increasing scope: data link (to handle different types of physical media), network (to handle different types of networks) and internetwork (to handle a network of networks), each layer with its own address space. When TCP/IP was introduced it ran at the internetwork layer on top of the NCP. But when NCP was shut down, TCP/IP took the network role and the internetwork layer was lost.[12] This explains the need for autonomous systems and NAT today, to partition and reuse ranges of the IP address space to facilitate administration.

1983: First opportunity to fix addressing missed

The need for an address higher-level than the IP address was well understood since the mid-1970s. However, application names were not introduced and DNS was designed and deployed, continuing to use well-known ports to identify applications. The advent of the web and HTTP created a need for application names, leading to URLs. URLs, however, tie each application instance to a physical interface of a computer and a specific transport connection, since the URL contains the DNS name of an IP interface and TCP port number, spilling the multihoming and mobility problems to applications.

1986: Congestion collapse takes the Internet by surprise

Though the problem of congestion control in datagram networks had been known since the 1970s and early 80s,[13][14] the congestion collapse in 1986 caught the Internet by surprise. What is worse, the adopted congestion control - the Ethernet congestion avoidance scheme, with a few modifications - was put in TCP.

1988: Network management takes a step backward

In 1988 IAB recommended using SNMP as the initial network management protocol for the Internet to later transition to the object-oriented approach of CMIP.[15] SNMP was a step backwards in network management, justified as a temporary measure while the required more sophisticated approaches were implemented, but the transition never happened.

1992: Second opportunity to fix addressing missed

In 1992 the IAB produced a series of recommendations to resolve the scaling problems of the IPv4-based Internet: address space consumption and routing information explosion. Three options were proposed: introduce CIDR to mitigate the problem; design the next version of IP (IPv7) based on CLNP; or continue the research into naming, addressing and routing.[16] CLNP was an OSI-based protocol that addressed nodes instead of interfaces, solving the old multihoming problem dating back to the ARPANET, and allowing for better routing information aggregation. CIDR was introduced, but the IETF didn't accept an IPv7 based on CLNP. IAB reconsidered its decision and the IPng process started, culminating with IPv6. One of the rules for IPng was not to change the semantics of the IP address, which continues to name the interface, perpetuating the multihoming problem.[5]

Overview

RINA is the result of an effort to work out general principles in computer networking that apply in all situations. RINA is the specific architecture, implementation, testing platform and ultimately deployment of the model informally known as the IPC model,[17] although it also deals with concepts and results that apply to any distributed application, not just to networking.

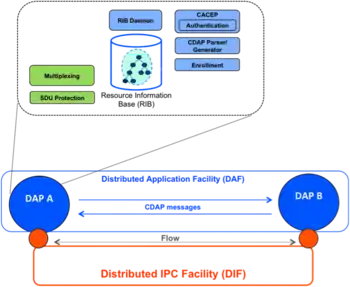

The basic entity of RINA is the Distributed Application Process or DAP, which frequently corresponds to a process on a host. Two or more DAPs constitute a Distributed Application Facility or DAF, as illustrated in Figure 3. These DAPs communicate using the Common Distributed Application Protocol or CDAP, exchanging structured data in the form of objects. These objects are structured in a Resource Information Base or RIB, which provides a naming schema and a logical organization to them. CDAP provides six basic operations on a remote DAP's objects: create, delete, read, write, start and stop.

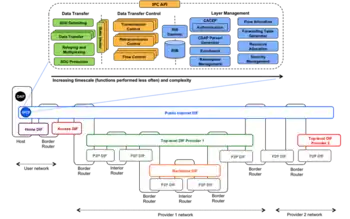

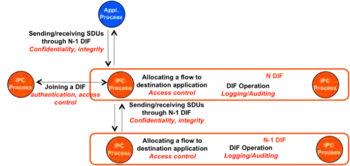

In order to exchange information, DAPs need an underlying facility that provides communication services to them. This facility is another DAF, called a Distributed IPC Facility or DIF, whose task is to provide and manage IPC services over a certain scope. The DAPs of a DIF are called IPC Processes or IPCPs. They have the same generic DAP structure shown in Figure 3, plus some specific tasks to provide and manage IPC. These tasks, as shown in Figure 4, can be divided into three categories: data transfer, data transfer control and layer management. The categories are ordered by increasing complexity and decreasing frequency, with data transfer being the simplest and most frequent, layer management being the most complex and least frequent, and data transfer control in between.

DIFs, being DAFs, in turn use other underlying DIFs themselves, going all the way down to the physical layer DIF controlling the wires and jacks. This is where the recursion of RINA comes from. As shown in Figure 4, RINA networks are usually structured in DIFs of increasing scope. Figure 5 shows an example of how the Web could be structured with RINA: the highest layer is the one closest to applications, corresponding to email or websites; the lowest layers aggregate and multiplex the traffic of the higher layers, corresponding to ISP backbones. Multi-provider DIFs (such as the public Internet or others) float on top of the ISP layers. In this model, three types of systems are distinguished: hosts, which contain DAPs; interior routers, internal to a layer; and border routers, at the edges of a layer, where packets go up or down one layer.

A DIF enables a DAP to allocate flows to one or more DAPs, by just providing the names of the targeted DAPs and the desired QoS parameters such as bounds on data loss and latency, ordered or out-of-order delivery, reliability, and so forth. DAPs may not trust the DIF they are using and may therefore protect their data before writing it to the flow via a SDU protection module, for example by encrypting it. All RINA layers have the same structure and components and provide the same functions; they differ only in their configurations or policies.[18] This mirrors the separation of mechanism and policy in operating systems.

In short, RINA keeps the concepts of PDU and SDU, but instead of layering by function, it layers by scope. Instead of considering that different scales have different characteristics and attributes, it considers that all communication has fundamentally the same behavior, just with different parameters. Thus, RINA is an attempt to conceptualize and parameterize all aspects of communication, thereby eliminating the need for specific protocols and concepts and reusing as much theory as possible.

Naming, addressing, routing, mobility and multihoming

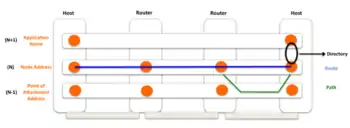

As explained above, the IP address is too low-level an identifier on which to base multihoming and mobility efficiently, as well as requiring routing tables to be bigger than necessary. RINA literature follows the general theory of Jerry Saltzer on addressing and naming. According to Saltzer, four elements need to be identified: applications, nodes, attachment points and paths.[19] An application can run in one or more nodes and should be able to move from one node to another without losing its identity in the network. A node can be connected to a pair of attachment points and should be able to move between them without losing its identity in the network. A directory maps an application name to a node address, and routes are sequences of node addresses and attachment points. These points are illustrated in Figure 6.

Saltzer took his model from operating systems, but the RINA authors concluded it could not be applied cleanly to internetworks, which can have more than one path between the same pair of nodes (let alone whole networks). Their solution is to model routes as sequences of nodes: at each hop, the respective node chooses the most appropriate attachment point to forward the packet to the next node. Therefore, RINA routes in a two-step process: first, the route as a sequence of node addresses is calculated, and then, for each hop, an appropriate attachment point is selected. These are the steps to generate the forwarding table: forwarding is still performed with a single lookup. Moreover, the last step can be performed more frequently to exploit multihoming for load balancing.

With this naming structure, mobility and multihoming are inherently supported[20] if the names have carefully chosen properties:

- application names are location-independent to allow an application to move around;

- node addresses are location-dependent but route-independent; and

- attachment points are by nature route-dependent.

Applying this naming scheme to RINA with its recursive layers allows the conclusion that mapping application names to node addresses is analogous to mapping node addresses to attachment points. Put simply, at any layer, nodes in the layer above can be seen as applications while nodes in the layer below can be seen as attachment points.

Protocol design

The Internet protocol suite also generally dictates that protocols be designed in isolation, without regard to whether aspects have been duplicated in other protocols and, therefore, whether these can be made into a policy. RINA tries to avoid this by applying the separation of mechanism and policy in operating systems to protocol design.[21] Each DIF uses different policies to provide different classes of quality of service and adapt to the characteristics of either the physical media, if the DIF is low-level, or the applications, if the DIF is high-level.

RINA uses the theory of the Delta-T protocol[10] developed by Richard Watson in 1981. Watson's research suggests that sufficient conditions for reliable transfer are to bound three timers. Delta-T is an example of how this should work: it does not have a connection setup or tear-down. The same research also notes that TCP already uses these timers in its operation, making Delta-T comparatively simpler. Watson's research also suggests that synchronization and port allocation should be distinct functions, port allocation being part of layer management, and synchronization being part of data transfer.

Security

To accommodate security, RINA requires each DIF/DAF to specify a security policy, whose functions are shown in Figure 7. This allows securing not just applications, but backbones and switching fabrics themselves. A public network is simply a special case where the security policy does nothing. This may introduce overhead for smaller networks, but it scales better with larger networks because layers do not need to coordinate their security mechanisms: the current Internet is estimated as requiring around 5 times more distinct security entities than RINA.[22] Among other things, the security policy can also specify an authentication mechanism; this obsoletes firewalls and blacklists because a DAP or IPCP that can't join a DAF or DIF can't transmit or receive. DIFs also do not expose their IPCP addresses to higher layers, preventing a wide class of man-in-the-middle attacks.

The design of the Delta-T protocol itself, with its emphasis on simplicity, is also a factor. For example, since the protocol has no handshake, it has no corresponding control messages that can be forged or state that can be misused, like that in a SYN flood. The synchronization mechanism also makes aberrant behavior more correlated with intrusion attempts, making attacks far easier to detect.[23]

Research projects

From the publishing of the PNA book in 2008 to 2014, a lot of RINA research and development work has been done. An informal group known as the Pouzin Society, named after Louis Pouzin,[24] coordinates several international efforts.

BU Research Team

The RINA research team at Boston University is led by Professors Abraham Matta, John Day and Lou Chitkushev, and has been awarded a number of grants from the National Science Foundation and EC in order to continue investigating the fundamentals of RINA, develop an open source prototype implementation over UDP/IP for Java [25][26] and experiment with it on top of the GENI infrastructure.[27][28] BU is also a member of the Pouzin Society and an active contributor to the FP7 IRATI and PRISTINE projects. In addition to this, BU has incorporated RINA concepts and theory in their computer networking courses.

FP7 IRATI

IRATI is an FP7-funded project with 5 partners: i2CAT, Nextworks, iMinds, Interoute and Boston University. It has produced an open source RINA implementation for the Linux OS on top of Ethernet.[29][30]

FP7 PRISTINE

PRISTINE is an FP7-funded project with 15 partners: WIT-TSSG, i2CAT, Nextworks, Telefónica I+D, Thales, Nexedi, B-ISDN, Atos, University of Oslo, Juniper Networks, Brno University, IMT-TSP, CREATE-NET, iMinds and UPC. Its main goal is to explore the programmability aspects of RINA to implement innovative policies for congestion control, resource allocation, routing, security and network management.

GÉANT3+ Open Call winner IRINA

IRINA was funded by the GÉANT3+ open call, and is a project with four partners: iMinds, WIT-TSSG, i2CAT and Nextworks. The main goal of IRINA is to study the use of the Recursive InterNetwork Architecture (RINA) as the foundation of the next generation NREN and GÉANT network architectures. IRINA builds on the IRATI prototype and will compare RINA against current networking state of the art and relevant clean-slate architecture under research; perform a use-case study of how RINA could be better used in the NREN scenarios; and showcase a laboratory trial of the study.

See also

References

- Patterns in Network Architecture: A Return to Fundamentals, John Day (2008), Prentice Hall, ISBN 978-0132252423

- L. Pouzin. Methods, tools and observations on flow control in packet-switched data networks. IEEE Transactions on Communications, 29(4): 413–426, 1981

- J. Day. Why loc/id split isn’t the answer, 2008. Available online at http://rina.tssg.org/docs/LocIDSplit090309.pdf

- D. Meyer and D. Lewis. Architectural Implications of Locator/ID separation. https://tools.ietf.org/html/draft-meyer-loc-id-implications-01

- R. Hinden and S. Deering. "IP Version 6 Addressing Architecture". RFC 4291 (Draft Standard), February 2006. Updated by RFCs 5952, 6052

- D. Clark, L. Chapin, V. Cerf, R. Braden and R. Hobby. Towards the Future Internet Architecture. RFC 1287 (Informational), December 1991

- Fritz. E Froehlich; Allen Kent (1992). "ARPANET, the Defense Data Network, and Internet". The Froehlich/Kent Encyclopedia of Telecommunications. 5. CRC Press. p. 82. ISBN 9780824729035.

- C.A. Kent and J.C. Mogul. Fragmentation considered harmful. Proceedings of Frontiers in Computer Communications Technologies, ACM SIGCOMM, 1987

- R. Watson. Timer-based mechanism in reliable transport protocol connection management. Computer Networks, 5:47–56, 1981

- R. Watson. Delta-t protocol specification. Technical Report UCID-19293, Lawrence Livermore National Laboratory, December 1981

- McKenzie, Alexander (2011). "INWG and the Conception of the Internet: An Eyewitness Account". IEEE Annals of the History of Computing. 33 (1): 66–71. doi:10.1109/MAHC.2011.9. ISSN 1934-1547. S2CID 206443072.

- J. Day. How in the Heck Do You Lose a Layer!? 2nd IFIP International Conference of the Network of the Future, Paris, France, 2011

- D. Davies. Methods, tools and observations on flow control in packet-switched data networks. IEEE Transactions on Communications, 20(3): 546–550, 1972

- S. S. Lam and Y.C. Luke Lien. Congestion control of packet communication networks by input buffer limits - a simulation study. IEEE Transactions on Computers, 30(10), 1981.

- Internet Architecture Board. IAB Recommendations for the Development of Internet Network Management Standards. RFC 1052, April 1988

- Internet Architecture Board. IP Version 7 ** DRAFT 8 **. Draft IAB IPversion7, july 1992

- John Day, Ibrahim Matta and Karim Mattar. Networking is IPC: A guiding principle to a better Internet. In Proceedings of the 2008 ACM CoNEXT Conference. ACM, 2008

- I. Matta, J. Day, V. Ishakian, K. Mattar and G. Gursun. Declarative transport: No more transport protocols to design, only policies to specify. Technical Report BUCS-TR-2008-014, CS Dept, Boston. U., July 2008

- J. Saltzer. On the Naming and Binding of Network Destinations. RFC 1498 (Informational), August 1993

- V. Ishakian, J. Akinwuni, F. Esposito, I. Matta, "On supporting mobility and multihoming in recursive internet architectures". Computer Communications, Volume 35, Issue 13, July 2012, pages 1561-1573

- P. Brinch Hansen. The nucleus of a multiprogramming system. Communications of the ACM, 13(4): 238-241, 1970

- J. Small (2012), Patterns in Network Security: An analysis of architectural complexity in securing Recursive Inter-Network Architecture Networks

- G. Boddapati; J. Day; I. Matta; L. Chitkushev (June 2009), Assessing the security of a clean-slate Internet architecture (PDF)

- A. L. Russell, V. Schaffer. “In the shadow of ARPAnet and Internet: Louis Pouzin and the Cyclades network in the 1970s”. Available online at http://muse.jhu.edu/journals/technology_and_culture/v055/55.4.russell.html

- Flavio Esposito, Yuefeng Wang, Ibrahim Matta and John Day. Dynamic Layer Instantiation as a Service. Demo at USENIX Symposium on Networked Systems Design and Implementation (NSDI ’13), Lombard, IL, 2–5 April 2013.

- Yuefeng Wang, Ibrahim Matta, Flavio Esposito and John Day. Introducing ProtoRINA: A Prototype for Programming Recursive-Networking Policies. ACM SIGCOMM Computer Communication Review. Volume 44 Issue 3, July 2014.

- Yuefeng Wang, Flavio Esposito, and Ibrahim Matta. "Demonstrating RINA using the GENI Testbed". The Second GENI Research and Educational Experiment Workshop (GREE 2013), Salt Lake City, UT, March 2013.

- Yuefeng Wang, Ibrahim Matta and Nabeel Akhtar. "Experimenting with Routing Policies Using ProtoRINA over GENI". The Third GENI Research and Educational Experiment Workshop (GREE2014), March 19–20, 2014, Atlanta, Georgia

- S. Vrijders, D. Staessens, D. Colle, F. Salvestrini, E. Grasa, M. Tarzan and L. Bergesio “Prototyping the Recursive Internetwork Architecture: The IRATI Project Approach“, IEEE Network, Vol. 28, no. 2, March 2014

- S. Vrijders, D. Staessens, D. Colle, F. Salvestrini, V. Maffione, L. Bergesio, M. Tarzan, B. Gaston, E. Grasa; “Experimental evaluation of a Recursive InterNetwork Architecture prototype“, IEEE Globecom 2014, Austin, Texas

External links

- The Pouzin Society website: http://www.pouzinsociety.org

- RINA Education page at the IRATI website, available online at http://irati.eu/education/

- RINA document repository run by the TSSG, available online at http://rina.tssg.org

- RINA tutorial at the IEEE Globecom 2014 conference, available online at http://www.slideshare.net/irati-project/rina-tutorial-ieee-globecom-2014