Row and column vectors

In linear algebra, a column vector or column matrix is an m × 1 matrix, that is, a matrix consisting of a single column of m elements,

Similarly, a row vector or row matrix is a 1 × m matrix, that is, a matrix consisting of a single row of m elements[1]

Throughout, boldface is used for the row and column vectors. The transpose (indicated by T) of a row vector is a column vector

and the transpose of a column vector is a row vector

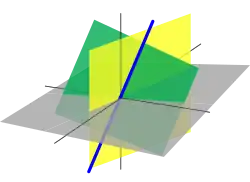

The set of all row vectors forms a vector space called row space; similarly, the set of all column vectors forms a vector space called column space. The dimensions of the row and column spaces equals the number of entries in the row or column vector.

The column space can be viewed as the dual space to the row space, since any linear functional on the space of column vectors can be represented uniquely as an inner product with a specific row vector.

Notation

To simplify writing column vectors in-line with other text, sometimes they are written as row vectors with the transpose operation applied to them.

or

Some authors also use the convention of writing both column vectors and row vectors as rows, but separating row vector elements with commas and column vector elements with semicolons (see alternative notation 2 in the table below).

| Row vector | Column vector | |

|---|---|---|

| Standard matrix notation (array spaces, no commas, transpose signs) |

||

| Alternative notation 1 (commas, transpose signs) |

||

| Alternative notation 2 (commas and semicolons, no transpose signs) |

Operations

Matrix multiplication involves the action of multiplying each row vector of one matrix by each column vector of another matrix.

The dot product of two vectors a and b is equivalent to the matrix product of the row vector representation of a and the column vector representation of b,

which is also equivalent to the matrix product of the row vector representation of b and the column vector representation of a,

The matrix product of a column and a row vector gives the outer product of two vectors a and b, an example of the more general tensor product. The matrix product of the column vector representation of a and the row vector representation of b gives the components of their dyadic product,

which is the transpose of the matrix product of the column vector representation of b and the row vector representation of a,

Preferred input vectors for matrix transformations

Frequently a row vector presents itself for an operation within n-space expressed by an n × n matrix M,

Then p is also a row vector and may present to another n × n matrix Q,

Conveniently, one can write t = p Q = v MQ telling us that the matrix product transformation MQ can take v directly to t. Continuing with row vectors, matrix transformations further reconfiguring n-space can be applied to the right of previous outputs.

In contrast, when a column vector is transformed to become another column under an n × n matrix action, the operation occurs to the left,

- ,

leading to the algebraic expression QM vT for the composed output from vT input. The matrix transformations mount up to the left in this use of a column vector for input to matrix transformation.

The column vector approach to matrix transformation leads to a right-to-left orientation for successive transformations. In geometric transformations described by matrices, the two approaches are related by the transpose operator. Though equivalent, the fact that directionality of English text is left-to-right has led some English authors to have a preference for the row vector input to matrix transformation:

For instance, this row vector input convention has been used to good effect by Raiz Usmani,[2] where on page 106 the convention allows the statement "The product mapping ST of U into W [is given] by:

- ."

(The Greek letters represent row vectors).

Ludwik Silberstein used row vectors for spacetime events; he applied Lorentz transformation matrices on the right in his Theory of Relativity in 1914 (see page 143). In 1963 when McGraw-Hill published Differential Geometry by Heinrich Guggenheimer of the University of Minnesota, he used the row vector convention in chapter 5, "Introduction to transformation groups" (eqs. 7a,9b and 12 to 15). When H. S. M. Coxeter reviewed[3] Linear Geometry by Rafael Artzy, he wrote, "[Artzy] is to be congratulated on his choice of the 'left-to-right' convention, which enables him to regard a point as a row matrix instead of the clumsy column that many authors prefer." J. W. P. Hirschfeld used right multiplication of row vectors by matrices in his description of projectivities on the Galois geometry PG(1,q).[4]

In the study of stochastic processes with a stochastic matrix, it is conventional to use a row vector as the stochastic vector.[5]

See also

Notes

- Meyer (2000), p. 8

- Raiz A. Usmani (1987) Applied Linear Algebra Marcel Dekker ISBN 0824776224. See Chapter 4: "Linear Transformations"

- Coxeter Review of Linear Geometry from Mathematical Reviews

- J. W. P. Hirschfeld (1979) Projective Geometry over Finite Fields, page 119, Clarendon Press ISBN 0-19-853526-0

- John G. Kemeny & J. Laurie Snell (1960) Finite Markov Chains, page 33, D. Van Nostrand Company

References

- Axler, Sheldon Jay (1997), Linear Algebra Done Right (2nd ed.), Springer-Verlag, ISBN 0-387-98259-0

- Lay, David C. (August 22, 2005), Linear Algebra and Its Applications (3rd ed.), Addison Wesley, ISBN 978-0-321-28713-7

- Meyer, Carl D. (February 15, 2001), Matrix Analysis and Applied Linear Algebra, Society for Industrial and Applied Mathematics (SIAM), ISBN 978-0-89871-454-8, archived from the original on March 1, 2001

- Poole, David (2006), Linear Algebra: A Modern Introduction (2nd ed.), Brooks/Cole, ISBN 0-534-99845-3

- Anton, Howard (2005), Elementary Linear Algebra (Applications Version) (9th ed.), Wiley International

- Leon, Steven J. (2006), Linear Algebra With Applications (7th ed.), Pearson Prentice Hall