Autonomic computing

Autonomic computing (AC) refers to the self-managing characteristics of distributed computing resources, adapting to unpredictable changes while hiding intrinsic complexity to operators and users. Initiated by IBM in 2001, this initiative ultimately aimed to develop computer systems capable of self-management, to overcome the rapidly growing complexity of computing systems management, and to reduce the barrier that complexity poses to further growth.[1]

A related concept in the area of autonomic computing is the concept of self-aware computing.

Description

The AC system concept is designed to make adaptive decisions, using high-level policies. It will constantly check and optimize its status and automatically adapt itself to changing conditions. An autonomic computing framework is composed of autonomic components (AC) interacting with each other. An AC can be modeled in terms of two main control schemes (local and global) with sensors (for self-monitoring), effectors (for self-adjustment), knowledge and planner/adapter for exploiting policies based on self- and environment awareness. This architecture is sometimes referred to as Monitor-Analyze-Plan-Execute (MAPE).

Driven by such vision, a variety of architectural frameworks based on "self-regulating" autonomic components has been recently proposed. A very similar trend has recently characterized significant research in the area of multi-agent systems. However, most of these approaches are typically conceived with centralized or cluster-based server architectures in mind and mostly address the need of reducing management costs rather than the need of enabling complex software systems or providing innovative services. Some autonomic systems involve mobile agents interacting via loosely coupled communication mechanisms.[2]

Autonomy-oriented computation is a paradigm proposed by Jiming Liu in 2001 that uses artificial systems imitating social animals' collective behaviours to solve difficult computational problems. For example, ant colony optimization could be studied in this paradigm.[3]

Problem of growing complexity

Forecasts suggest that the computing devices in use will grow at 38% per year[4] and the average complexity of each device is increasing.[4] Currently, this volume and complexity is managed by highly skilled humans; but the demand for skilled IT personnel is already outstripping supply, with labour costs exceeding equipment costs by a ratio of up to 18:1.[5] Computing systems have brought great benefits of speed and automation but there is now an overwhelming economic need to automate their maintenance.

In a 2003 IEEE Computer article, Kephart and Chess[1] warn that the dream of interconnectivity of computing systems and devices could become the "nightmare of pervasive computing" in which architects are unable to anticipate, design and maintain the complexity of interactions. They state the essence of autonomic computing is system self-management, freeing administrators from low-level task management while delivering better system behavior.

A general problem of modern distributed computing systems is that their complexity, and in particular the complexity of their management, is becoming a significant limiting factor in their further development. Large companies and institutions are employing large-scale computer networks for communication and computation. The distributed applications running on these computer networks are diverse and deal with many tasks, ranging from internal control processes to presenting web content to customer support.

Additionally, mobile computing is pervading these networks at an increasing speed: employees need to communicate with their companies while they are not in their office. They do so by using laptops, personal digital assistants, or mobile phones with diverse forms of wireless technologies to access their companies' data.

This creates an enormous complexity in the overall computer network which is hard to control manually by human operators. Manual control is time-consuming, expensive, and error-prone. The manual effort needed to control a growing networked computer-system tends to increase very quickly.

80% of such problems in infrastructure happen at the client specific application and database layer. Most 'autonomic' service providers guarantee only up to the basic plumbing layer (power, hardware, operating system, network and basic database parameters).

Characteristics of autonomic systems

A possible solution could be to enable modern, networked computing systems to manage themselves without direct human intervention. The Autonomic Computing Initiative (ACI) aims at providing the foundation for autonomic systems. It is inspired by the autonomic nervous system of the human body.[6] This nervous system controls important bodily functions (e.g. respiration, heart rate, and blood pressure) without any conscious intervention.

In a self-managing autonomic system, the human operator takes on a new role: instead of controlling the system directly, he/she defines general policies and rules that guide the self-management process. For this process, IBM defined the following four types of property referred to as self-star (also called self-*, self-x, or auto-*) properties. [7]

- Self-configuration: Automatic configuration of components;

- Self-healing: Automatic discovery, and correction of faults;[8]

- Self-optimization: Automatic monitoring and control of resources to ensure the optimal functioning with respect to the defined requirements;

- Self-protection: Proactive identification and protection from arbitrary attacks.

Others such as Poslad[7] and Nami and Bertels[9] have expanded on the set of self-star as follows:

- Self-regulation: A system that operates to maintain some parameter, e.g., Quality of service, within a reset range without external control;

- Self-learning: Systems use machine learning techniques such as unsupervised learning which does not require external control;

- Self-awareness (also called Self-inspection and Self-decision): System must know itself. It must know the extent of its own resources and the resources it links to. A system must be aware of its internal components and external links in order to control and manage them;

- Self-organization: System structure driven by physics-type models without explicit pressure or involvement from outside the system;

- Self-creation (also called Self-assembly, Self-replication): System driven by ecological and social type models without explicit pressure or involvement from outside the system. A system’s members are self-motivated and self-driven, generating complexity and order in a creative response to a continuously changing strategic demand;

- Self-management (also called self-governance): A system that manages itself without external intervention. What is being managed can vary dependent on the system and application. Self -management also refers to a set of self-star processes such as autonomic computing rather than a single self-star process;

- Self-description (also called self-explanation or Self-representation): A system explains itself. It is capable of being understood (by humans) without further explanation.

IBM has set forth eight conditions that define an autonomic system:[10]

The system must

- know itself in terms of what resources it has access to, what its capabilities and limitations are and how and why it is connected to other systems;

- be able to automatically configure and reconfigure itself depending on the changing computing environment;

- be able to optimize its performance to ensure the most efficient computing process;

- be able to work around encountered problems by either repairing itself or routing functions away from the trouble;

- detect, identify and protect itself against various types of attacks to maintain overall system security and integrity;

- adapt to its environment as it changes, interacting with neighboring systems and establishing communication protocols;

- rely on open standards and cannot exist in a proprietary environment;

- anticipate the demand on its resources while staying transparent to users.

Even though the purpose and thus the behaviour of autonomic systems vary from system to system, every autonomic system should be able to exhibit a minimum set of properties to achieve its purpose:

- Automatic: This essentially means being able to self-control its internal functions and operations. As such, an autonomic system must be self-contained and able to start-up and operate without any manual intervention or external help. Again, the knowledge required to bootstrap the system (Know-how) must be inherent to the system.

- Adaptive: An autonomic system must be able to change its operation (i.e., its configuration, state and functions). This will allow the system to cope with temporal and spatial changes in its operational context either long term (environment customisation/optimisation) or short term (exceptional conditions such as malicious attacks, faults, etc.).

- Aware: An autonomic system must be able to monitor (sense) its operational context as well as its internal state in order to be able to assess if its current operation serves its purpose. Awareness will control adaptation of its operational behaviour in response to context or state changes.

Evolutionary levels

IBM defined five evolutionary levels, or the autonomic deployment model, for the deployment of autonomic systems:

- Level 1 is the basic level that presents the current situation where systems are essentially managed manually.

- Levels 2–4 introduce increasingly automated management functions, while

- level 5 represents the ultimate goal of autonomic, self-managing systems.[11]

Design patterns

The design complexity of Autonomic Systems can be simplified by utilizing design patterns such as the model-view-controller (MVC) pattern to improve concern separation by encapsulating functional concerns.[12]

Control loops

A basic concept that will be applied in Autonomic Systems are closed control loops. This well-known concept stems from Process Control Theory. Essentially, a closed control loop in a self-managing system monitors some resource (software or hardware component) and autonomously tries to keep its parameters within a desired range.

According to IBM, hundreds or even thousands of these control loops are expected to work in a large-scale self-managing computer system.

Conceptual model

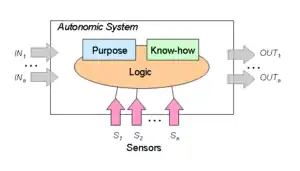

A fundamental building block of an autonomic system is the sensing capability (Sensors Si), which enables the system to observe its external operational context. Inherent to an autonomic system is the knowledge of the Purpose (intention) and the Know-how to operate itself (e.g., bootstrapping, configuration knowledge, interpretation of sensory data, etc.) without external intervention. The actual operation of the autonomic system is dictated by the Logic, which is responsible for making the right decisions to serve its Purpose, and influence by the observation of the operational context (based on the sensor input).

This model highlights the fact that the operation of an autonomic system is purpose-driven. This includes its mission (e.g., the service it is supposed to offer), the policies (e.g., that define the basic behaviour), and the "survival instinct". If seen as a control system this would be encoded as a feedback error function or in a heuristically assisted system as an algorithm combined with set of heuristics bounding its operational space.

Self-aware computing

A related concept in the area of autonomic computing is the concept of self-aware computing. The notion of self-awareness in computing has been studied in a number of research projects and activities over the past decades, including the SElf-awarE Computing (SEEC) project at the University of Chicago,[13] the FOCAS FET Coordination Action,[14] the EPiCS and ASCENS EU Projects,[15][16] the SEAMS Dagstuhl Seminars,[17] and the Self-Aware Computing (SeAC) Workshop Series.[18]

In an effort to encourage collaborations and cross-fertilization between related research activities, a Dagstuhl seminar was organized in January 2015 bringing together 45 international experts from the respective communities.[19] As part of this effort, the seminar participants formulated a definition of the term “self-aware computing system”,[20] capturing the common aspects of such systems as used by researchers in the interdisciplinary research community. The consensus at the seminar was that self-aware computing systems have two main properties. They:

- learn models, capturing knowledge about themselves and their environment (such as their structure, design, state, possible actions, and runtime behavior) on an ongoing basis; and

- reason using the models (to predict, analyze, consider, or plan), which enables them to act based on their knowledge and reasoning (for example, to explore, explain, report, suggest, self-adapt, or impact their environment).

The learning and reasoning in self-aware computing systems is done in accordance with high-level goals, which can change. This broader notion of self-aware computing is intended to provide a common terminology highlighting the synergies between related research activities. Several books on the topic review much of the latest relevant research across a wide array of disciplines and discuss open research challenges.[21][22]

Models and learning

The term “model” in the definition of a self-aware computing system refers to any abstraction that captures relevant knowledge about the system and its environment. There are different types of models, such as descriptive models, which describe a given aspect of the system, or prescriptive models, which describe how the system should behave in a given situation. In the context of self-aware computing, often predictive models are further distinguished, which support more complex reasoning, such as predicting how the system would behave under different conditions or predicting how a given action will impact the system and its environment.

The „learning“ in self-aware computing is based both on static information built into the system design (e.g., integrated skeleton models combined with machine learning algorithms) as well as information collected at run-time (e.g., model training data and parameters estimated based on monitoring data). The learning is assumed to take place on an ongoing basis during system run time; that is, models are refined, calibrated, and validated continuously during system operation. The model-based learning and reasoning processes are assumed to go beyond evaluating predefined event-condition-action rules or heuristics. An example of a complex learning and reasoning process often applied in the context of self-aware computing systems is time series forecasting.[23]

LRA-M loop

In the area of autonomic computing, systems often implement a Monitor-Analyze-Plan-Execute architecture, commonly referred to as MAPE-K loop, where K stands for "Knowledge".[1] In contrast to this, self-aware computing systems implement a Learn-Reason-Act loop, referred to as LRA-M loop, relying on Models as a knowledge base. The LRA-M loop is a widely accepted reference architecture that explicitly emphasizes the use of models to capture self-awareness concerns and provides an architectural perspective on a system’s (possible) self-awareness capabilities.[24][25][26][27][28]

A self-aware computing system collects empirical observations from the environment based on sensors and monitoring data about itself (self-monitoring). These empirical observations are the basis for an ongoing learning process (the "Learn" process) that captures potentially relevant information about the system and the environment (in the form of descriptive or predictive models). A continuous reasoning process (the "Reason" process) uses these models to determine appropriate actions required to meet the high-level system goals. The reasoning process can trigger system actions or self-adaptation processes (the optional "Act" process); it can also trigger changes affecting the learning and reasoning processes themselves, for example, switching from one type of prediction model or another.

While the "Monitor" and "Analyze" phases of MAPE-K also imply that information is gathered and analyzed during operation, in LRA-M, it is explicitly assumed that the acquired information is abstracted and used to learn models on an ongoing basis. Similarly, while the "Analyze" and "Plan" phases in MAPE-K can be compared to the reasoning processes in LRA-M, LRA-M's model-based reasoning is assumed to go beyond applying simple rules or heuristics explicitly programmed at system design time. Finally, while self-adaptation (also referred to self-expression[29]) is a central part of MAPE-K (the Execute phase), in LRA-M, self-adaptation is not strictly required, given that the corresponding "Act" process is defined as optional. Indeed, a self-aware computing system may provide recommendations on how to act, leaving the final decision on what action to take to a human operator—a common scenario in many cognitive computing applications.

Example

Several reference scenarios for self-aware computing systems have been defined.[30] As an example, consider a distributed enterprise messaging system designed to guarantee certain performance and quality-of-service requirements. In this context, descriptive models can be used to describe the system’s service-level objectives, the software architecture, the underlying hardware infrastructure, and the system’s run-time reconfiguration options. Predictive models could be statistical regression models used to estimate the influence of user behavior on the system resource demands, queueing network models used to evaluate the system performance for a given resource allocation, or neural network models used to forecast the system’s load intensity. Prescriptive models could describe how the system should react to specific events at run time (e.g., a server crash) or be sophisticated control theory models used to guide the system’s resource management.

Reasoning in the context of the above system can include the forecasting of the system load intensity (e.g., number of messages sent per minute) for a given time period,[23] the prediction of the application performance (e.g., message delivery time) for a given resource allocation (e.g., reserved network bandwidth and server capacity), or the prediction of the impact of a reconfiguration (e.g., adding additional network bandwidth or server capacity) on the messaging performance.[31] Based on these predictions, the system can apply higher-level reasoning and, for example, determine how it should adapt to changing workloads to continuously meet its performance and quality-of-service requirements.

Levels of self-awareness

Conceptually, there are several approaches to distinguish different levels of self-awareness.[29][32][33] At the highest level of abstraction, three generic levels can be defined that appear in different forms in existing classification schemes: pre-reflective, reflective, and meta-reflective self-awareness.

Applications

Applications for self-aware computing systems can be found in many domains, including software and system development,[28] information extraction,[34] data center performance and resource management,[35][36] cyber-physical systems,[25][26][37] embedded systems and systems-on-chip,[24][38] mobile-cloud hybrid robotics,[39] Internet of Things (IoT),[40] manufacturing systems and Industry 4.0,[41][42][43] traffic control,[44][45] healthcare,[46][47][48] and aerospace applications.[49][50]

In the domain of manufacturing systems and Industry 4.0, self-aware computing can be leveraged in the context of modular plants for process engineering and manufacturing, where production modules can be replaced on-the-fly during operation for online system maintenance or for optimizing production processes.[42] Facing this scenario requires research towards semantical self-description of production modules concerning their requirements and abilities as well as automated model-based analysis and reconfiguration of production processes. Efficient maintenance strategies are needed for improving reliability, preventing unexpected system failures, and reducing maintenance costs.[43] An example of such a strategy is predictive maintenance, where sensor data is used to continuously examine the health status of the equipment and prevent failures by proactively scheduling maintenance actions based on predicted future states.

In the domain of traffic control, self-aware computing can be used to avoid traffic jams and optimize routes and journey times.[45] Based on real-time traffic monitoring data, a recommendation for the best route for a given target destination can be computed. Further, leveraging big data analysis, an individual route can be generated for each road user, taking into account the routes others will take.[44]

In the medical context, self-aware computing systems can be leveraged to improve the classical implantology. Considering patients with cardiac arrhythmia, cardiac medical devices can be used to monitor patients permanently. For example, a pacemaker device can monitor a patient's heart rate, atrial-ventricle synchrony, and complex conditions like pacemaker-mediated tachycardia in order to maintain an adequate heart rate.[46] Self-aware computing systems can also help to improve the quality of life of epilepsy patients. For example, the electroencephalogram (EEG), which monitors brain activity, can be used for diagnosing and follow-up examinations of the epilepsy disorder. Medical implants using a high-density electrode array can provide permanent monitoring based on which machine learning techniques can be used to learn patterns that indicate possible attacks.[47][48] Current research aims at detecting and providing early warnings for upcoming attacks.

References

- Kephart, J.O.; Chess, D.M. (2003), "The vision of autonomic computing", Computer, 36: 41–52, CiteSeerX 10.1.1.70.613, doi:10.1109/MC.2003.1160055

- Padovitz, Amir; Arkady Zaslavsky; Seng W. Loke (2003). Awareness and Agility for Autonomic Distributed Systems: Platform-Independent Publish-Subscribe Event-Based Communication for Mobile Agents. Proceedings of the 14th International Workshop on Database and Expert Systems Applications (DEXA'03). pp. 669–673. doi:10.1109/DEXA.2003.1232098. ISBN 978-0-7695-1993-7.

- Jin, Xiaolong; Liu, Jiming (2004), "From Individual Based Modeling to Autonomy Oriented Computation", Agents and Computational Autonomy, Lecture Notes in Computer Science, 2969, p. 151, doi:10.1007/978-3-540-25928-2_13, ISBN 978-3-540-22477-8

- Horn. "Autonomic Computing:IBM's Perspective on the State of Information Technology" (PDF). Archived from the original (PDF) on September 16, 2011.

- ‘Trends in technology’, survey, Berkeley University of California, USA, March 2002

- http://whatis.techtarget.com/definition/autonomic-computing

- Poslad, Stefan (2009). Autonomous systems and Artificial Life, In: Ubiquitous Computing Smart Devices, Smart Environments and Smart Interaction. Wiley. pp. 317–341. ISBN 978-0-470-03560-3. Archived from the original on 2014-12-10. Retrieved 2015-03-17.

- S-Cube Network. "Self-Healing System".

-

Nami, M.R.; Bertels, K. (2007). "A survey of autonomic computing systems": 26–30. Cite journal requires

|journal=(help) - "What is Autonomic Computing? Webopedia Definition".

- "IBM Unveils New Autonomic Computing Deployment Model". 2002-10-21.

- Curry, Edward; Grace, Paul (2008), "Flexible Self-Management Using the Model-View-Controller Pattern", IEEE Software, 25 (3): 84, doi:10.1109/MS.2008.60

- "The Self-Aware Computing Project - University of Chicago". Retrieved 24 September 2020.

- "Results from FoCAS, the coordination action for research in Collective Adaptive Systems". Retrieved 24 September 2020.

- "ASCENS EU Project". Retrieved 24 September 2020.

- "EPICS EU Project - Engineering Proprioception in Computing Systems". EU CORDIS. Retrieved 24 September 2020.

- "Software Engineering for Self-Adaptive Systems". Retrieved 24 September 2020.

- "Workshop on Self-Aware Computing (SeAC)". Retrieved 24 September 2020.

- Kounev, Samuel; Zhu, Xiaoyun; Kephart, Jeffrey O.; Kwiatkowska, Marta (2015). "Model-driven Algorithms and Architectures for Self-Aware Computing Systems (Dagstuhl Seminar 15041)". Schloss Dagstuhl-Leibniz-Zentrum Fuer Informatik, Dagstuhl Reports. 5 (1): 164–196. doi:10.4230/DagRep.5.1.164. ISSN 2192-5283. Retrieved 24 September 2020.

- Kounev, Samuel; Lewis, Peter; Bellman, Kirstie L.; Bencomo, Nelly; Camara, Javier; Diaconescu, Ada; Esterle, Lukas; Geihs, Kurt; Giese, Holger; Götz, Sebastian; Inverardi, Paola; Kephart, Jeffrey O. & Zisman, Andrea (2017). "Chapter 1: The Notion of Self-aware Computing". In Kounev, Samuel; Kephart, Jeffrey O.; Milenkoski, Aleksandar & Zhu, Xiaoyun (eds.). Self-Aware Computing Systems (1st ed.). Springer International Publishing. pp. 3–16. doi:10.1007/978-3-319-47474-8_1. ISBN 978-3-319-47472-4.

- Kounev, Samuel; Kephart, Jeffrey O.; Milenkoski, Aleksandar; Zhu, Xiaoyun (2017). Self-Aware Computing Systems (1st ed.). Springer International Publishing. doi:10.1007/978-3-319-47474-8. ISBN 978-3-319-47472-4.

- Lewis, Peter R.; Platzner, Marco; Rinner, Bernhard; Tørresen, Jim; Yao, Xin (2016). Self-Aware Computing Systems (1st ed.). Springer International Publishing. doi:10.1007/978-3-319-39675-0. ISBN 978-3-319-39674-3. S2CID 38085111.

- Bauer, André; Züfle, Marwin; Herbst, Nikolas; Zehe, Albin; Hotho, Andreas; Kounev, Samuel (2020). "Time Series Forecasting for Self-Aware Systems". Proceedings of the IEEE. IEEE. 108 (7): 1068–1093. doi:10.1109/JPROC.2020.2983857. S2CID 218478964.

- Hoffmann, H.; Jantsch, A.; Dutt, N. D. (July 2020). "Embodied Self-Aware Computing Systems". Proceedings of the IEEE. IEEE. 108 (7): 1027–1046. doi:10.1109/JPROC.2020.2977054. S2CID 215837088.

- Bellman, K.; Landauer, C.; Dutt, N.; Esterle, L.; Herkersdorf, A.; Jantsch, A.; TaheriNejad, N.; Lewis, P. R.; Platzner, M.; Tammem\"{a}e, K. (June 2020). "Self-Aware Cyber-Physical Systems". ACM Transactions on Cyber-Physical Systems. ACM. 4 (4): 1–26. doi:10.1145/3375716. S2CID 218538677.

- Götzinger, Maximilian; Juhász, Dávid; Taherinejad, Nima; Willegger, Edwin; Tutzer, Benedikt; Liljeberg, Pasi; Jantsch, Axel; Rahmani, Amir M. (2020). "RoSA: A Framework for Modeling Self-Awareness in Cyber-Physical Systems". IEEE Access. IEEE. 8: 141373–141394. doi:10.1109/ACCESS.2020.3012824. S2CID 221120586.

- Jantsch, Axel (2019). "Towards a Formal Model of Recursive Self-Reflection". OpenAccess Series in Informatics (OASIcs), Vol. 68, Edited by Selma Saidi, Rolf Ernst and Dirk Ziegenbein. Workshop on Autonomous Systems Design (ASD 2019). Dagstuhl, Germany: Schloss Dagstuhl - Leibniz-Zentrum fuer Informatik. pp. 6:1–6:15. doi:10.4230/OASIcs.ASD.2019.6.

- Tinnes, C.; Biesdorf, A.; Hohenstein, U.; Matthes, F. (2019). Ideas on Improving Software Artifact Reuse via Traceability and Self-Awareness. 2019 IEEE/ACM 10th International Symposium on Software and Systems Traceability (SST). Montreal, Canada: IEEE. pp. 13–16. doi:10.1109/SST.2019.00013.

- Lewis, Peter R.; Chandra, Arjun; Faniyi, Funmilade; Glette, Kyrre; Chen, Tao; Bahsoon, Rami; Torresen, Jim; Yao, Xin (2015). "Architectural Aspects of Self-Aware and Self-Expressive Computing Systems: From Psychology to Engineering". Computer. IEEE. 48 (8): 62–70. doi:10.1109/MC.2015.235. S2CID 8384839. Retrieved 5 October 2020.

- Kephart, Jeffrey O.; Maggio, Martina; Diaconescu, Ada; Giese, Holger; Hoffmann, Henry; Kounev, Samuel; Koziolek, Anne; Lewis, Peter; Robertsson, Anders & Spinner, Simon (2017). "Chapter 4: Reference Scenarios for Self-Aware Computing". In Kounev, Samuel; Kephart, Jeffrey O.; Milenkoski, Aleksandar & Zhu, Xiaoyun (eds.). Self-Aware Computing Systems (1st ed.). Springer International Publishing. pp. 87–106. doi:10.1007/978-3-319-47474-8_1. ISBN 978-3-319-47472-4.

- Balsamo, Simonetta (May 2004). "Model-based performance prediction in software development: a survey". IEEE Transactions on Software Engineering. 30 (5): 295–310. doi:10.1109/TSE.2004.9. S2CID 18922186.

- Chen, Tao; Faniyi, Funmilade; Bahsoon, Rami; Lewis, Peter R.; Yao, Xin; Minku, Leandro L.; Esterle, Lukas (2014). The handbook of engineering self-aware and self-expressive systems (Report). EPiCS EU FP7 project consortium.

- Lewis, Peter; Bellman, Kirstie L.; Landauer, Christopher; Esterle, Lukas; Glette, Kyrre; Diaconescu, Ada & Giese, Holger (2017). "Chapter 3: Towards a Framework for the Levels and Aspects of Self-aware Computing Systems". In Kounev, Samuel; Kephart, Jeffrey O.; Milenkoski, Aleksandar & Zhu, Xiaoyun (eds.). Self-Aware Computing Systems (1st ed.). Springer International Publishing. pp. 51–85. doi:10.1007/978-3-319-47474-8_3. ISBN 978-3-319-47472-4.

- Alzuru, I.; Matsunaga, A.; Tsugawa, M.; Fortes, J. A. B. (2017). SELFIE: Self-Aware Information Extraction from Digitized Biocollections. 2017 IEEE 13th International Conference on e-Science (e-Science). Auckland, New Zealand: IEEE. pp. 69–78. doi:10.1109/eScience.2017.19.

- Kounev, S.; Huber, N.; Brosig, F.; Zhu, X. (2016). "A Model-Based Approach to Designing Self-Aware IT Systems and Infrastructures". Computer. IEEE. 49 (7): 53–61. doi:10.1109/MC.2016.198. S2CID 1683918.

- Huber, N.; Brosig, F.; Spinner, S.; Kounev, S.; Bähr, M. (2017). "Model-Based Self-Aware Performance and Resource Management Using the Descartes Modeling Language". IEEE Transactions on Software Engineering. IEEE. 43 (5): 432–452. doi:10.1109/TSE.2016.2613863. S2CID 206778721.

- Dutt, N.; TaheriNejad, N. (2016). Self-Awareness in Cyber-Physical Systems. 2016 29th International Conference on VLSI Design and 2016 15th International Conference on Embedded Systems (VLSID). Kolkata, India: IEEE. pp. 5–6. doi:10.1109/VLSID.2016.129.

- Dutt, N.; Jantsch, A.; Sarma, S. (2015). Self-aware Cyber-Physical Systems-on-Chip. 2015 IEEE/ACM International Conference on Computer-Aided Design (ICCAD). Austin, USA: IEEE. pp. 46–50. doi:10.1109/ICCAD.2015.7372548.

- Akbar, A.; Lewis, P. R. (2018). Self-Adaptive and Self-Aware Mobile-Cloud Hybrid Robotics. 2018 Fifth International Conference on Internet of Things: Systems, Management and Security. Valencia, Spain: IEEE. pp. 262–267. doi:10.1109/IoTSMS.2018.8554735.

- Esterle, L.; Rinner, B. (2018). An Architecture for Self-Aware IOT Applications. 2018 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). Calgary, Canada: IEEE. pp. 6588–6592. doi:10.1109/ICASSP.2018.8462005.

- Bagheri, Behrad; Yang, Shanhu; Kao, Hung-An; Lee, Jay (2015). "Cyber-physical Systems Architecture for Self-Aware Machines in Industry 4.0 Environment". IFAC-PapersOnLine. IFAC. 48 (3): 1622–1627. doi:10.1016/j.ifacol.2015.06.318.

- Kowalewski, S.; Braune, A.; Dernehl, C.; Greiner, T.; Koziolek, H.; Loskyll, M.; Niggemann, O.; Gatica, C. P.; Reißig, G. (2014). "Statusreport: Industrie 4.0 CPS-basierte Automation Forschungsbedarf anhand konkreter Fallbeispiele" (PDF). VDI/VDE-Gesellschaft für Mess- und Automatisierungstechnik (GMA).

- Kaiser, Kevin A.; Gebraeel, Z. (2009). "Predictive maintenance management using sensor-based degradation models". IEEE Transactions on Systems, Man, and Cybernetics-Part A: Systems and Humans. 39 (4): 840–849. doi:10.1109/TSMCA.2009.2016429. S2CID 5975976.

- Wilkie, David; van den Berg, Jur P.; Lin, Ming C.; Manocha, Dinesh (2011). "Self-Aware Traffic Route Planning". AAAI. 11: 1521–1527.

- Bacon, Jean; Bejan, Andrei Iu.; Beresford, Alastair R.; Evans, David; Gibbens, Richard J.; Moody, Ken (2011). "Using Real-Time Road Traffic Data to Evaluate Congestion". Dependable and Historic Computing 2011. Lecture Notes in Computer Science. 6875: 93–117. doi:10.1007/978-3-642-24541-1_9. ISBN 978-3-642-24540-4.

- Jiang, Zhihao; Pajic, Miroslav; Mangharam, Rahul (2012). "Cyber-physical modeling of implantable cardiac medical devices". Proceedings of the IEEE. 100 (1): 122–137. doi:10.1109/JPROC.2011.2161241. S2CID 7080536.

- Viventi, Jonathan; Kim, Dae-Hyeong; Vigeland, Leif; Frechette, Eric S.; Blanco, Justin A.; Kim, Yun-Suong; Avrin, Andrew E.; Tiruvadi, Vineet R.; Vanleer, Ann C. (2011). "Flexible, foldable, actively multiplexed, high-density electrode array for mapping brain activity in vivo". Nature Neuroscience. 14 (12): 1599–605. doi:10.1038/nn.2973. PMC 3235709. PMID 22081157.

- Tzallas, Alexandros T.; Tsipouras, Markos G.; Fotiadis, Dimitrios I. (2007). "Automatic seizure detection based on time-frequency analysis and artificial neural networks". Computational Intelligence and Neuroscience. 2007: 80510. doi:10.1155/2007/80510. PMC 2246039. PMID 18301712.

- Schilling, Klaus; Walter, Jürgen & Kounev, Samuel (2017). "Chapter 25: Spacecraft Autonomous Reaction Capabilities, Control Approaches and Self-Aware Computing". In Kounev, Samuel; Kephart, Jeffrey O.; Milenkoski, Aleksandar & Zhu, Xiaoyun (eds.). Self-Aware Computing Systems (1st ed.). Springer International Publishing. pp. 687–706. doi:10.1007/978-3-319-47474-8_1. ISBN 978-3-319-47472-4.

- Kaiser, Dennis; Lesch, Veronika; Rothe, Julian; Strohmeier, Michael; Spiess, Florian; Krupitzer, Christian; Montenegro, Sergio; Kounev, Samuel (2020). "Towards Self-Aware Multirotor Formations". Computers. Special Issue on Self-Aware Computing. MDPI. 9 (7): 1622–1627. doi:10.1016/j.ifacol.2015.06.318.

External links

- International Conference on Autonomic Computing (ICAC 2013)

- Autonomic Computing by Richard Murch published by IBM Press

- Autonomic Computing articles and tutorials

- Practical Autonomic Computing – Roadmap to Self Managing Technology

- Autonomic computing blog

- Whitestein Technologies – provider of development and integration environment for autonomic computing software

- Applied Autonomics provides Autonomic Web Services

- Enigmatec Website – providers of autonomic computing software

- Handsfree Networks – providers of autonomic computing software

- CASCADAS Project: Component-ware for Autonomic, Situation-aware Communications And Dynamically Adaptable, funded by the European Union

- CASCADAS Autonomic Tool-Kit in Open Source

- ANA Project: Autonomic Network Architecture Research Project, funded by the European Union

- JADE – A framework for developing autonomic administration software

- Barcelona Supercomputing Center – Autonomic Systems and eBusiness Platforms

- SOCRATES: Self-Optimization and Self-Configuration in Wireless Networks

- Dynamically Self Configuring Automotive Systems

- ASSL (Autonomic System Specification Language) : A Framework for Specification, Validation and Generation of Autonomic Systems

- Explanation of Autonomic Computing and its usage for business processes (IBM)- in German

- Autonomic Computing Architecture in the RKBExplorer

- International Journal of Autonomic Computing

- BiSNET/e: A Cognitive Sensor Networking Architecture with Evolutionary Multiobjective Optimization

- Licas: Open source framework for building service-based networks with integrated Autonomic Manager.