Nao (robot)

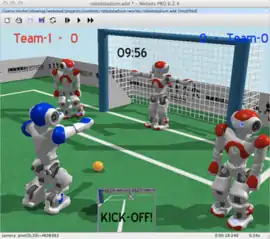

NAO (pronounced now) is an autonomous, programmable humanoid robot developed by Aldebaran Robotics, a French robotics company headquartered in Paris, which was acquired by SoftBank Group in 2015 and rebranded as SoftBank Robotics. The robot's development began with the launch of Project Nao in 2004. On 15 August 2007, Nao replaced Sony's robot dog Aibo as the robot used in the RoboCup Standard Platform League (SPL), an international robot soccer competition.[1] The Nao was used in RoboCup 2008 and 2009, and the NaoV3R was chosen as the platform for the SPL at RoboCup 2010.[2]

.jpg.webp) Robocup, 2016 | |

| Manufacturer | SoftBank Robotics (previously Aldebaran Robotics) |

|---|---|

| Country | France |

| Year of creation | 2008 (first public version) |

| Type | Humanoid robot |

| Purpose | Research, education and entertainment |

Several versions of the robot have been released since 2008. The Nao Academics Edition was developed for universities and laboratories for research and education purposes. It was released to institutions in 2008, and was made publicly available by 2011. Various upgrades to the Nao platform have since been released, including the 2011 Nao Next Gen and the 2014 Nao Evolution.[3][4]

Nao robots have been used for research and education purposes in numerous academic institutions worldwide. As of 2015, over 5,000 Nao units are in use in more than 50 countries.[4]

Development history

Aldebaran Robotics was established in 2005 by Bruno Maisonnier, who had previously begun developing the robot under "Project Nao" in 2004.[4] Six prototypes of Nao were designed between 2005 and 2007. In March 2008, the first production version of the robot, the Nao RoboCup Edition, was released to the contestants of that year's RoboCup.[5] The Nao Academics Edition was released to universities, educational institutions and research laboratories in late 2008.

In the summer of 2010, Nao made global headlines with a synchronized dance routine at the Shanghai Expo in China.[6] In October 2010, the University of Tokyo purchased 30 Nao robots for their Nakamura Lab, with hopes of developing the robots into active laboratory assistants.[7] In December 2010, a Nao robot was demonstrated doing a stand-up comedy routine,[8] and a new version of the robot was released, featuring sculpted arms and improved motors. In May 2011, Aldebaran announced that it would release Nao's controlling source code to the public as open source software.[9] In June 2011, Aldebaran raised US$13 million in a round of venture funding led by Intel Capital.[10] In 2013, Aldebaran was acquired by Japan's SoftBank Mobile for US$100 million.[11]

In December 2011, Aldebaran released the Nao Next Gen, featuring hardware and software enhancements such as high density cameras, improved robustness, anti-collision systems and a faster walking speed.[3] The Nao Evolution, featuring enhanced durability, improved multilingual speech synthesis, improved shape and facial detection and recognition using new algorithms, and improved sound source location using four directional microphones, was released in June 2014.[4]

Aldeberan Robotics was acquired by SoftBank Group in 2015 and rebranded as SoftBank Robotics.

Academic and scientific usage

Since 2011, over 200 academic institutions worldwide have made use of the robot, including the University of Hertfordshire and their Bold Hearts RoboCup Team, the Indian Institute of Information Technology, Allahabad, the University of Tokyo,[7] the Indian Institute of Technology Kanpur,[12] Saudi Arabia's King Fahd University of Petroleum and Minerals, University of South Wales and Montana State University.[13][14] In 2012, donated Nao robots were used to teach autistic children in a UK school; some of the children found the childlike, expressive robots more relatable than human beings.[15][16] In a broader context, Nao robots have been used by numerous British schools to introduce children to robots and the robotics industry.[17]

By the end of 2014, over 5,000 Nao robots were in use with educational and research institutions in 70 countries.[4] In 2015, Mitsubishi UFJ Financial Group began trialling Nao robots for customer service use in its Japanese bank branches.[18] In July 2015, Nao robots were shown to demonstrate a basic form of self-awareness in a philosophical experiment at Rensselaer Polytechnic Institute in New York, in which three robots were set up, muting two of them; they were then told that two of them had been given a "dumbing pill", and asked to figure out which of them hadn't. After initially replying he didn't know, the non-muted robot was able to figure out he hadn't been given the dumbing pill after hearing the sound of his own voice.[19] In September 2015, the French Institute of Health and Medical Research used Nao robots to test a system of robotic "autobiographical memory" designed to help train International Space Station crews and assist elderly patients.[20]

Nao is available as a research robot for schools, colleges and universities to teach programming and conduct research into human-robot interactions.[21]

In August 2018, RobotLAB released an online learning platform for schools that enhance the use of NAO for STEM, Coding and Engineering.[22]

Healthcare usage

Since its release in 2004, Nao has been tested and deployed in a number of healthcare scenarios, including usage in care homes[23] and in schools.

Design

The various versions of the Nao robotics platform feature either 2, 14, 21 or 25 degrees of freedom (DoF). A specialised model with 21 DoF and no actuated hands was created for the Robocup competition. All Nao Academics versions feature an inertial measurement unit with accelerometer, gyrometer and four ultrasonic sensors that provide Nao with stability and positioning within space. The legged versions included eight force-sensing resistors and two bumpers. The 2014 Nao Evolution, featured stronger metallic joints, improved grip and an enhanced sound source location system that utilises four directional microphones.[4] The most recent version, dubbed NAO6, was introduced in June 2018. [24] [25]

Software

The Nao robot is controlled by a specialised Linux-based operating system, dubbed NAOqi.[4] The OS powers the robot's multimedia system, which includes four microphones (for voice recognition and sound localization), two speakers (for multilingual text-to-speech synthesis) and two HD cameras (for computer vision, including facial and shape recognition). The robot also comes with a software suite that includes a graphical programming tool dubbed Choregraphe,[26] a simulation software package and a software developer's kit. Nao is furthermore compatible with the Microsoft Robotics Studio, Cyberbotics Webots, and the Gostai Studio (URBI).[27]

In August 2018, RobotLAB released Engage! K12. It is an online learning platform for schools that enhance the use of NAO for STEM, Coding and Engineering.[28] In February 2018, Finnish company Utelias Technologies released Elias Robot, a learning application that helps to learn languages with NAO. [29]

Specifications

| Robot Version | Nao V3+ (2008) | Nao V3.2 (2009) | Nao V3.3 (2010) | Nao Next Gen (V4) (2011)[30] | Nao Evolution (V5) (2014)[31] | Nao Power 6 (V6) (2018)[32] |

|---|---|---|---|---|---|---|

| Height | 573.2 millimetres (22.57 in) | 573 millimetres (22.6 in) | 574 millimetres (22.6 in) | |||

| Depth | 290 millimetres (11 in) | 311 millimetres (12.2 in) | ||||

| Width | 273.3 millimetres (10.76 in) | 275 millimetres (10.8 in) | ||||

| Weight | 4.83592 kilograms (10.6614 lb) | 4.996 kilograms (11.01 lb) | 5.182530 kilograms (11.42552 lb) | 5.305350006 kilograms (11.69629464 lb) | 5.48 kilograms (12.1 lb) | |

| Power supply | lithium battery providing 27.6 Wh at 21.6V | lithium battery providing 48.6 Wh at 21.6V | lithium battery providing 62.5 Wh at 21.6V | |||

| Autonomy | 60 minutes (active use) | 90 minutes (active use) | ||||

| Degrees of freedom | 25[33] | |||||

| CPU | x86 AMD GEODE 500MHz | Intel Atom Z530 @ 1.6 GHz | Intel Atom E3845 Quad Core @ 1.91 GHz | |||

| RAM | 256 MB | 1 GB | 4 GB DDR3 | |||

| Storage | 2 GB Flash memory | 2 GB Flash memory + 8 GB Micro SDHC | 32 GB SSD | |||

| Built-in OS | OpenNAO 1.6 (OpenEmbedded-based) | OpenNAO 1.8 (OpenEmbedded- based) | OpenNAO 1.10 (OpenEmbedded- based) | OpenNAO 1.12 (gentoo-based) | NAOqi 2.1 (gentoo-based) | NAOqi 2.8 (openembedded-based) |

| Compatible OS | Windows, Mac OS, Linux | |||||

| Programming languages | C++, Python, Java, MATLAB, Urbi, C, .Net | |||||

| Simulation environment | Webots | |||||

| Cameras | Two OV7670 58°DFOV cameras | Two MT9M114 72.6°DFOV cameras | Two HD OV5640 67.4°DFOV cameras | |||

| Sensors | 36 MRE (Magnetic Rotary Encoders) using Hall-effect sensor technology.12 bit precision, ie 4096 values per turn corresponding to about 0.1° precision

2 x gyrometer 1 axis 1 x accelerometer 3 axis 8 x FSR Force (Sensitive Resistors). 2 x bumpers located at the tip of each foot. These are simple ON/OFF switches. There is no difference between a push on the left or right foot. Sonar: 2 emitters, 2 receivers. Frequency: 40kHz. Sensitivity: -86dB. Resolution: 10mm. Detection range: 0.25 - 2.55 m. Effective cone: 60°. 2 x I/R. Wavelength = 940 nm. Emission angle = +/- 60°. Power =8 mW/sr 4 microphones: Sensitivity: -40 +/- 3 dB Frequency range: 20Hz-20kHz Signal/noise ratio: 58dBA 2 x Camera: OV7670 VGA(640x480), 30 fps. Focus range: 30 cm - infinity. 58° Diagonal Field Of View (47.8° Horizontal FOV, 36.8° Vertical FOV) Capacitive sensor |

36 x MRE (Magnetic Rotary Encoders) using Hall-effect sensor technology.12 bit precision, ie 4096 values per turn corresponding to about 0.1° precision

2 x gyrometer 1 axis 1 x accelerometer 3 axis 8 x FSR (Force Sensitive Resistors). 2 x bumpers located at the tip of each foot. These are simple ON/OFF switches. There is no difference between a push on the left or right foot. Sonar: 2 emitters, 2 receivers. Frequency: 40kHz. Sensitivity: -86dB. Resolution: 10mm. Detection range: 0.25 - 2.55 m. Effective cone: 60°. 2 x I/R. Wavelength = 940 nm. Emission angle = +/- 60°. Power =8 mW/sr 4 microphones: Sensitivity: -40 +/- 3 dB Frequency range: 20Hz-20kHz Signal/noise ratio: 58dBA 2 x Camera: MT9M114 960p(1280x960), 30fps Focus range: 30 cm - infinity. 72.6° Diagonal Field Of View (60.9° Horizontal FOV, 47.6° Vertical FOV) Capacitive sensor |

36 x MRE (Magnetic Rotary Encoders) using Hall-effect sensor technology.12 bit precision, ie 4096 values per turn corresponding to about 0.1° precision

a 3-axis gyrometer a 3-axis accelerometer 8 x FSR (Force Sensitive Resistors). 2 x bumpers located at the tip of each foot. These are simple ON/OFF switches. There is no difference between a push on the left or right foot. Sonar: 2 emitters, 2 receivers. Frequency: 40kHz Resolution: 1cm-4cm (depending on distance) Detection range: 0.20 m - 3 m Effective cone: 60° 2 x I/R. Wavelength = 940 nm. Emission angle = +/- 60°. Power =8 mW/sr Microphones x4 on the head Sensitivity 20mV/Pa +/-3dB at 1KHz Frequency range 150Hz to 12kHz 2 x Camera: MT9M114 960p(1280x960), 30fps Focus range: 30 cm - infinity. 72.6° Diagonal Field Of View (60.9° Horizontal FOV, 47.6° Vertical FOV) Capacitive sensor |

36 x MRE (Magnetic Rotary Encoders) using Hall-effect sensor technology.12 bit precision, ie 4096 values per turn corresponding to about 0.1° precision

a 3-axis gyrometer a 3-axis accelerometer 8 x FSR (Force Sensitive Resistors). 2 x bumpers located at the tip of each foot. These are simple ON/OFF switches. There is no difference between a push on the left or right foot. Sonar: 2 emitters, 2 receivers. Frequency: 40kHz Resolution: 1cm-4cm (depending on distance) Detection range: 0.20 m - 3 m Effective cone: 60° 2 x I/R. Wavelength = 940 nm. Emission angle = +/- 60°. Power =8 mW/sr 4 omnidirectional Microphones.Specification. Sensitivity : 250mV/Pa +/-3dB at 1kHz Frequency : range 100Hz to 10kHz (-10dB relative to 1kHz) 2 x Camera: Specifications Camera Model OV5640 Type System-on-a-chip (SoC) CMOS image sensor Imaging Array Resolution 5Mp Optical format 1/4 inch Active Pixels (HxV) 2592x1944 Sensitivity Pixel size 1.4µm*1.4µm Dynamic range 68db@8x gain Signal/Noise ratio (max) 36dB (maximum) Responsivity 600 mV/Lux-sec Output Camera output 640*480@30fps or 2560*1920@1fps Data Format YUV and RGB Shutter type Rolling shutter View Field of view 67.4°DFOV (56.3°HFOV,43.7°VFOV) Focus type Auto focus Capacitive sensor | ||

| Connectivity | Ethernet, Wi-Fi IEEE 802.11 a/b/g | Ethernet, Wi-Fi IEEE 802.11 a/b/g/n | ||||

See also

Related development

Robots of comparable role, configuration, dimensions and era

References

- "Nao robot replaces AIBO in RoboCup Standard Platform League". Engadget. 16 August 2007. Retrieved 4 October 2012.

- "UK robots prepare for world cup". BBC. 25 October 2010. Retrieved 4 October 2012.

- "Aldebaran Robotics announces Nao Next Gen humanoid robot". Engadget. 10 December 2011. Retrieved 15 April 2012.

- "Unveiling of NAO Evolution: a stronger robot and a more comprehensive operating system". Aldebaran Robotics. 2014. Archived from the original on 1 February 2015. Retrieved 1 February 2015.

- "RoboCup Standard Platform League". Tzi.de. Retrieved 4 October 2012.

- "Robotic mascot entertains at Shanghai Expo". ChannelNewsAsia.com. 21 June 2010. Retrieved 4 October 2012.

- "Le robot français Nao fait ses classes à l'Université de Tokyo" Archived 21 October 2010 at the Wayback Machine (in French). L'Express. Retrieved 4 October 2012.

- "Heather Knight: Silicon-based comedy". TED. December 2010. Retrieved 4 October 2012.

- "Aldebaran to Open Source NAO's code" Archived 24 July 2012 at the Wayback Machine. Nao Developer. 13 May 2011. Retrieved 4 October 2012.

- "Aldebaran raises $13 million in round led by Intel Capital". Aldebaran Robotics. 2011. Retrieved 4 October 2012.

- "The Sad Story of Softbank's Aldebaran Robotics and its Emotionally Intelligent Robot". RudeBaguette.com. 15 December 2012. Retrieved 4 February 2015.

- "Robot that walks, talks, emotes like humans...'Nao'". Times of India. 4 February 2013. Retrieved 8 February 2013.

- Nash, Audrow (23 January 2015). "Robots: Looney the Robot". RobotsPodcast.com. Retrieved 10 February 2015.

- "Nao, le robot que les universités s'arrachent" (in French). DigiSchool Média. 4 February 2013. Archived from the original on 1 February 2014. Retrieved 16 February 2013.

- "Robots in the classroom help autistic children learn". BBC. 8 November 2012. Retrieved 9 November 2012.

- "AskNAO". Aldebaran Robotics. Archived from the original on 5 February 2015. Retrieved 1 February 2015.

- "Robots found in the classroom". Active-Robots.com. 12 September 2014. Retrieved 1 February 2015.

- "Japanese bank introduces robot workers to deal with customers in branches". The Guardian. 4 February 2015. Retrieved 4 February 2015.

- "Polite robots show glimmer of self-awareness". Popular Science. 16 July 2015. Retrieved 23 December 2015.

- "'Autobiographical memory' lets robots act as knowledge go-betweens for ISS crews". Gizmag.com. 8 September 2015. Retrieved 16 September 2015.

- "For education & research". SoftBank Robotics. Retrieved 30 September 2016.

- "Launch of Engage! K12". Markets Insider. Retrieved 6 August 2018.

- https://www.express.co.uk/news/uk/958844/uk-care-homes-robots-elderly

- "NAO6 Press Kit .PDF". SoftBank Robotics. Announced June 21, 2018.

- "MODEL: H25600 Specifications .PDF". SoftBank Robotics. Announced June 21, 2018

- Choregraphe User Guide. Aldebaran Robotics. Retrieved 1 February 2015.

- "NAO NEXT Gen H25 Datasheet". Aldebaran Robotics. December 2011. Retrieved 4 October 2012.

- "Launch of Engage! K12". Markets Insider. Retrieved 6 August 2018.

- "Techno Teachers".

- "NAO Technical overview — NAO Software 1.14.5 documentation". doc.aldebaran.com. Retrieved 21 May 2019.

- "NAO - Construction — Aldebaran 2.1.4.13 documentation". doc.aldebaran.com. Retrieved 21 May 2019.

- "NAO Power V6 Standard Edition". RobotLAB. 2018. Retrieved 1 August 2018.

- "NAO degrees of freedom (3D animation)". Retrieved 18 May 2018.

External links

| Wikimedia Commons has media related to Nao. |

- Aldebaran Robotics official website and YouTube channel

- "My day with a robot". BBC News. 15 September 2015.

- Blanca Li dance performance with Nao robot (World Science Festival)

- Barboza Space Center (The Occupy Mars Learning Adventures Projects with Nao) www.BarbozaSpaceCenter.com