Neurorobotics

Neurorobotics, a combined study of neuroscience, robotics, and artificial intelligence, is the science and technology of embodied autonomous neural systems. Neural systems include brain-inspired algorithms (e.g. connectionist networks), computational models of biological neural networks (e.g. artificial spiking neural networks, large-scale simulations of neural microcircuits) and actual biological systems (e.g. in vivo and in vitro neural nets). Such neural systems can be embodied in machines with mechanic or any other forms of physical actuation. This includes robots, prosthetic or wearable systems but also, at smaller scale, micro-machines and, at the larger scales, furniture and infrastructures.

Neurorobotics is that branch of neuroscience with robotics, which deals with the study and application of science and technology of embodied autonomous neural systems like brain-inspired algorithms. At its core, neurorobotics is based on the idea that the brain is embodied and the body is embedded in the environment. Therefore, most neurorobots are required to function in the real world, as opposed to a simulated environment.[1]

Beyond brain-inspired algorithms for robots neurorobotics may also involve the design of brain-controlled robot systems.[2][3][4]

Introduction

Neurorobotics represents the two-front approach to the study of intelligence. Neuroscience attempts to discern what intelligence consists of and how it works by investigating intelligent biological systems, while the study of artificial intelligence attempts to recreate intelligence through non-biological, or artificial means. Neurorobotics is the overlap of the two, where biologically inspired theories are tested in a grounded environment, with a physical implementation of said model. The successes and failures of a neurorobot and the model it is built from can provide evidence to refute or support that theory, and give insight for future study.

Major classes of neurorobotic models

Neurorobots can be divided into various major classes based on the robot's purpose. Each class is designed to implement a specific mechanism of interest for study. Common types of neurorobots are those used to study motor control, memory, action selection, and perception.

Locomotion and motor control

Neurorobots are often used to study motor feedback and control systems, and have proved their merit in developing controllers for robots. Locomotion is modeled by a number of neurologically inspired theories on the action of motor systems. Locomotion control has been mimicked using models or central pattern generators, clumps of neurons capable of driving repetitive behavior, to make four-legged walking robots.[5] Other groups have expanded the idea of combining rudimentary control systems into a hierarchical set of simple autonomous systems. These systems can formulate complex movements from a combination of these rudimentary subsets.[6] This theory of motor action is based on the organization of cortical columns, which progressively integrate from simple sensory input into a complex afferent signals, or from complex motor programs to simple controls for each muscle fiber in efferent signals, forming a similar hierarchical structure.

Another method for motor control uses learned error correction and predictive controls to form a sort of simulated muscle memory. In this model, awkward, random, and error-prone movements are corrected for using error feedback to produce smooth and accurate movements over time. The controller learns to create the correct control signal by predicting the error. Using these ideas, robots have been designed which can learn to produce adaptive arm movements[7] or to avoid obstacles in a course.

Learning and memory systems

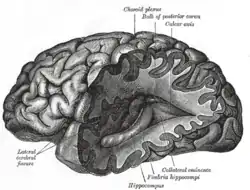

Robots designed to test theories of animal memory systems. Many studies currently examine the memory system of rats, particularly the rat hippocampus, dealing with place cells, which fire for a specific location that has been learned.[8][9] Systems modeled after the rat hippocampus are generally able to learn mental maps of the environment, including recognizing landmarks and associating behaviors with them, allowing them to predict the upcoming obstacles and landmarks.[9]

Another study has produced a robot based on the proposed learning paradigm of barn owls for orientation and localization based on primarily auditory, but also visual stimuli. The hypothesized method involves synaptic plasticity and neuromodulation,[10] a mostly chemical effect in which reward neurotransmitters such as dopamine or serotonin affect the firing sensitivity of a neuron to be sharper.[11] The robot used in the study adequately matched the behavior of barn owls.[12] Furthermore, the close interaction between motor output and auditory feedback proved to be vital in the learning process, supporting active sensing theories that are involved in many of the learning models.[10]

Neurorobots in these studies are presented with simple mazes or patterns to learn. Some of the problems presented to the neurorobot include recognition of symbols, colors, or other patterns and execute simple actions based on the pattern. In the case of the barn owl simulation, the robot had to determine its location and direction to navigate in its environment.

Action selection and value systems

Action selection studies deal with negative or positive weighting to an action and its outcome. Neurorobots can and have been used to study *simple* ethical interactions, such as the classical thought experiment where there are more people than a life raft can hold, and someone must leave the boat to save the rest. However, more neurorobots used in the study of action selection contend with much simpler persuasions such as self-preservation or perpetuation of the population of robots in the study. These neurorobots are modeled after the neuromodulation of synapses to encourage circuits with positive results.[11][13] In biological systems, neurotransmitters such as dopamine or acetylcholine positively reinforce neural signals that are beneficial. One study of such interaction involved the robot Darwin VII, which used visual, auditory, and a simulated taste input to "eat" conductive metal blocks. The arbitrarily chosen good blocks had a striped pattern on them while the bad blocks had a circular shape on them. The taste sense was simulated by conductivity of the blocks. The robot had positive and negative feedbacks to the taste based on its level of conductivity. The researchers observed the robot to see how it learned its action selection behaviors based on the inputs it had.[14] Other studies have used herds of small robots which feed on batteries strewn about the room, and communicate its findings to other robots.[15]

Sensory perception

Neurorobots have also been used to study sensory perception, particularly vision. These are primarily systems that result from embedding neural models of sensory pathways in automatas. This approach gives exposure to the sensory signals that occur during behavior and also enables a more realistic assessment of the degree of robustness of the neural model. It is well known that changes in the sensory signals produced by motor activity provide useful perceptual cues that are used extensively by organisms. For example, researchers have used the depth information that emerges during replication of human head and eye movements to establish robust representations of the visual scene.[16][17]

Biological robots

Biological robots are not officially neurorobots in that they are not neurologically inspired AI systems, but actual neuron tissue wired to a robot. This employs the use of cultured neural networks to study brain development or neural interactions. These typically consist of a neural culture raised on a multielectrode array (MEA), which is capable of both recording the neural activity and stimulating the tissue. In some cases, the MEA is connected to a computer which presents a simulated environment to the brain tissue and translates brain activity into actions in the simulation, as well as providing sensory feedback.[18] The ability to record neural activity gives researchers a window into a brain, albeit simple, which they can use to learn about a number of the same issues neurorobots are used for.

An area of concern with the biological robots is ethics. Many questions are raised about how to treat such experiments. Seemingly the most important question is that of consciousness and whether or not the rat brain experiences it. This discussion boils down to the many theories of what consciousness is.[19][20]

See Hybrot, consciousness.

Implications for neuroscience

Neuroscientists benefit from neurorobotics because it provides a blank slate to test various possible methods of brain function in a controlled and testable environment. Furthermore, while the robots are more simplified versions of the systems they emulate, they are more specific, allowing more direct testing of the issue at hand.[10] They also have the benefit of being accessible at all times, while it is much more difficult to monitor even large portions of a brain while the animal is active, let alone individual neurons.

With subject of neuroscience growing as it has, numerous neural treatments have emerged, from pharmaceuticals to neural rehabilitation.[21] Progress is dependent on an intricate understanding of the brain and how exactly it functions. It is very difficult to study the brain, especially in humans due to the danger associated with cranial surgeries. Therefore, the use of technology to fill the void of testable subjects is vital. Neurorobots accomplish exactly this, improving the range of tests and experiments that can be performed in the study of neural processes.

References

- Chiel, H. J., & Beer, R. D. (1997). The brain has a body: adaptive behavior emerges from interactions of nervous system, body and environment. [Editorial Material]. Trends in Neurosciences, 20(12), 553-557.

- Vannucci, L.; Ambrosano, A.; Cauli, N.; Albanese, U.; Falotico, E.; Ulbrich, S.; Pfotzer, L.; Hinkel, G.; Denninger, O.; Peppicelli, D.; Guyot, L.; Arnim, A. Von; Deser, S.; Maier, P.; Dillman, R.; Klinker, G.; Levi, P.; Knoll, A.; Gewaltig, M. O.; Laschi, C. (1 November 2015). "A visual tracking model implemented on the iCub robot as a use case for a novel neurorobotic toolkit integrating brain and physics simulation". 2015 IEEE-RAS 15th International Conference on Humanoid Robots (Humanoids): 1179–1184. doi:10.1109/HUMANOIDS.2015.7363512. ISBN 978-1-4799-6885-5.

- "Brain - Supported Learning Algorithms for Robots" (PDF). Retrieved 9 April 2017.

- "A Basic Neurorobotics Platform Using the Neurosky Mindwave". Ern Arrowsmith. 2 October 2012. Retrieved 9 April 2017.

- Ijspeert, A. J., Crespi, A., Ryczko, D., and Cabelguen, J. M. (2007). From swimming to walking with a salamander robot driven by a spinal cord model. Science 315, 1416-1420.

- Giszter, S. F., Moxon, K. A., Rybak, I. A., & Chapin, J. K. (2001). Neurobiological and neurorobotic approaches to control architectures for a humanoid motor system. Robotics and Autonomous Systems, 37(2-3), 219-235.

- Eskiizmirliler, S., Forestier, N., Tondu, B., and Darlot, C. (2002). A model of the cerebellar pathways applied to the control of a single-joint robot arm actuated by McKibben artificial muscles. Biol Cybern 86, 379-394.

- O'Keefe, J., and Nadel, L. (1978). The hippocampus as a cognitive map (Oxford: Clarendon Press).

- Mataric, M. J. (1998). Behavior-based robotics as a tool for synthesis of artificial behavior and analysis of natural behavior. [Review]. Trends in Cognitive Sciences, 2(3), 82-87.

- Rucci, M., Bullock, D., & Santini, F. (2007). Integrating robotics and neuroscience: brains for robots, bodies for brains. [Article]. Advanced Robotics, 21(10), 1115-1129.

- Cox, B. R., & Krichmar, J. L. (2009). Neuromodulation as a Robot Controller A Brain-Inspired Strategy for Controlling Autonomous Robots. [Article]. IEEE Robotics & Automation Magazine, 16(3), 72-80. doi:10.1109/mra.2009.933628

- Rucci, M., Edelman, G. M. and Wray, J. (1999). Adaptation of orienting behavior: from the barn owl to a robotic system [Article]. IEEE Transactions on Robotics and Automation, 15(1), 96-110.

- Hasselmo, M. E., Hay, J., Ilyn, M., and Gorchetchnikov, A. (2002). Neuromodulation, theta rhythm and rat spatial navigation. Neural Netw 15, 689-707.

- Krichmar, J. L., and Edelman, G. M. (2002). Machine Psychology: Autonomous Behavior, Perceptual Categorization, and Conditioning in a Brain-Based Device. Cerebral Cortex 12, 818-830.

- Doya, K., and Uchibe, E. (2005). The Cyber Rodent Project: Exploration of Adaptive Mechanisms for Self-Preservation and Self-Reproduction. Adaptive Behavior 13, 149 - 160.

- Santini, F, and Rucci, M. (2007). Active estimation of distance in a robotic system that replicates human eye movements Journal of Robotics and Autonomous Systems, 55, 107-121.

- Kuang, K., Gibson, M., Shi, B. E., Rucci, M. (2012). Active vision during coordinated head/eye movements in a humanoid robot, IEEE Transactions on Robotics, 99, 1-8.

- DeMarse, T. B., Wagenaar, D. A., Blau, A. W. and Potter, S. M. (2001). "The Neurally Controlled Animat: Biological Brains Acting with Simulated Bodies." Autonomous Robots 11: 305-310.

- Warwick, K. (2010). Implications and consequences of robots with biological brains. [Article]. Ethics and Information Technology, 12(3), 223-234. doi:10.1007/s10676-010-9218-6

- "IOS Press Ebooks - Brains on Wheels: Theoretical and Ethical Issues in Bio-Robotics". ebooks.iospress.nl. Retrieved 2018-11-14.

- Bach-y-Rita, P. (1999). Theoretical aspects of sensory substitution and of neurotransmission-related reorganization in spinal cord injury. [Review]. Spinal Cord, 37(7), 465-474.

External links

- Neurorobotics on Scholarpedia (Jeff Krichmar (2008), Scholarpedia, 3(3):1365)

- A lab that focuses on neurorobotics at Northwestern University.

- Frontiers in Neurorobotics.

- Neurorobotics: an experimental science of embodiment by Frederic Kaplan

- Neurorobotics Lab, Control Systems Lab, NTUn of Athens (Prof. Kostas J. Kyriakopoulos)

- Neurorobotics in the Human Brain Project