Hyperplane separation theorem

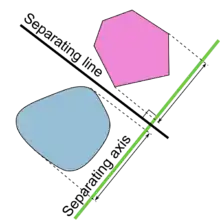

In geometry, the hyperplane separation theorem is a theorem about disjoint convex sets in n-dimensional Euclidean space. There are several rather similar versions. In one version of the theorem, if both these sets are closed and at least one of them is compact, then there is a hyperplane in between them and even two parallel hyperplanes in between them separated by a gap. In another version, if both disjoint convex sets are open, then there is a hyperplane in between them, but not necessarily any gap. An axis which is orthogonal to a separating hyperplane is a separating axis, because the orthogonal projections of the convex bodies onto the axis are disjoint.

The hyperplane separation theorem is due to Hermann Minkowski. The Hahn–Banach separation theorem generalizes the result to topological vector spaces.

A related result is the supporting hyperplane theorem.

In the context of support-vector machines, the optimally separating hyperplane or maximum-margin hyperplane is a hyperplane which separates two convex hulls of points and is equidistant from the two.[1][2][3]

Statements and proof

Hyperplane separation theorem[4] — Let A and B be two disjoint nonempty convex subsets of Rn. Then there exist a nonzero vector v and a real number c such that

for all x in A and y in B; i.e., the hyperplane , v the normal vector, separates A and B.

The proof is based on the following lemma:

Lemma — Let be a nonempty closed convex subset of Rn. Then there exists a unique vector in of minimum norm (length).

Proof of lemma: Let Let be a sequence in such that . Note that is in since is convex and so . Since

as , is a Cauchy sequence and so has limit x in . It is unique since if y is in and has norm δ, then and x = y.

Proof of theorem: Given disjoint nonempty convex sets A, B, let

Since is convex and the sum of convex sets is convex, is convex. By the lemma, the closure of , which is convex, contains a vector of minimum norm. Since is convex, for any in , the line segment

lies in and so

- .

For , we thus have:

and letting gives: . Hence, for any x in A and y in B, we have: . Thus, if v is nonzero, the proof is complete since

More generally (covering the case v = 0), let us first take the case when the interior of is nonempty. The interior can be exhausted by a nested sequence of nonempty compact convex subsets . Since 0 is not in , each contains a nonzero vector of minimum length and by the argument in the early part, we have: for any . We can normalize the 's to have length one. Then the sequence contains a convergent subsequence (because the n-sphere is compact) with limit v, which is nonzero. We have for any x in the interior of and by continuity the same holds for all x in . We now finish the proof as before. Finally, if has empty interior, the affine set that it spans has dimension less than that of the whole space. Consequently is contained in some hyperplane ; thus, for all x in and we finish the proof as before.

The number of dimensions must be finite. In infinite-dimensional spaces there are examples of two closed, convex, disjoint sets which cannot be separated by a closed hyperplane (a hyperplane where a continuous linear functional equals some constant) even in the weak sense where the inequalities are not strict.[5]

The above proof also proves the first version of the theorem mentioned in the lede (to see it, note that in the proof is closed under the hypothesis of the theorem below.)

Separation theorem I — Let A and B be two disjoint nonempty closed convex sets, one of which is compact. Then there exist a nonzero vector v and real numbers such that

for all x in A and y in B.

Here, the compactness in the hypothesis cannot be relaxed; see an example in the next section. This version of the separation theorem does generalize to infinite-dimension; the generalization is more commonly known as the Hahn–Banach separation theorem.

We also have:

Separation theorem II — Let A and B be two disjoint nonempty convex sets. If A is open, then there exist a nonzero vector v and real number such that

for all x in A and y in B. If both sets are open, then there exist a nonzero vector v and real number such that

for all x in A and y in B.

This follows from the standard version since the separating hyperplane cannot intersect the interiors of the convex sets.

Converse of theorem

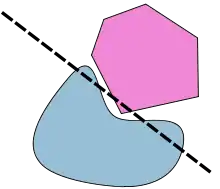

Note that the existence of a hyperplane that only "separates" two convex sets in the weak sense of both inequalities being non-strict obviously does not imply that the two sets are disjoint. Both sets could have points located on the hyperplane.

Counterexamples and uniqueness

If one of A or B is not convex, then there are many possible counterexamples. For example, A and B could be concentric circles. A more subtle counterexample is one in which A and B are both closed but neither one is compact. For example, if A is a closed half plane and B is bounded by one arm of a hyperbola, then there is no strictly separating hyperplane:

(Although, by an instance of the second theorem, there is a hyperplane that separates their interiors.) Another type of counterexample has A compact and B open. For example, A can be a closed square and B can be an open square that touches A.

In the first version of the theorem, evidently the separating hyperplane is never unique. In the second version, it may or may not be unique. Technically a separating axis is never unique because it can be translated; in the second version of the theorem, a separating axis can be unique up to translation.

Use in collision detection

The separating axis theorem (SAT) says that:

Two convex objects do not overlap if there exists a line (called axis) onto which the two objects' projections do not overlap.

SAT suggests an algorithm for testing whether two convex solids intersect or not.

Regardless of dimensionality, the separating axis is always a line. For example, in 3D, the space is separated by planes, but the separating axis is perpendicular to the separating plane.

The separating axis theorem can be applied for fast collision detection between polygon meshes. Each face's normal or other feature direction is used as a separating axis, as well as the cross products. Note that this yields possible separating axes, not separating lines/planes.

If the cross products were not used, certain edge-on-edge non-colliding cases would be treated as colliding. For increased efficiency, parallel axes may be calculated as a single axis.

Notes

- Hastie, Trevor; Tibshirani, Robert; Friedman, Jerome (2008). The Elements of Statistical Learning : Data Mining, Inference, and Prediction (PDF) (Second ed.). New York: Springer. pp. 129–135.

- Witten, Ian H.; Frank, Eibe; Hall, Mark A.; Pal, Christopher J. (2016). Data Mining: Practical Machine Learning Tools and Techniques (Fourth ed.). Morgan Kaufmann. pp. 253–254.

- Deisenroth, Marc Peter; Faisal, A. Aldo; Ong, Cheng Soon (2020). Mathematics for Machine Learning. Cambridge University Press. pp. 337–338. ISBN 978-1-108-45514-5.

- Boyd & Vandenberghe 2004, Exercise 2.22.

- Haïm Brezis, Analyse fonctionnelle : théorie et applications, 1983, remarque 4, p. 7.

References

- Boyd, Stephen P.; Vandenberghe, Lieven (2004). Convex Optimization (pdf). Cambridge University Press. ISBN 978-0-521-83378-3.

- Golshtein, E. G.; Tretyakov, N.V. (1996). Modified Lagrangians and monotone maps in optimization. New York: Wiley. p. 6. ISBN 0-471-54821-9.

- Shimizu, Kiyotaka; Ishizuka, Yo; Bard, Jonathan F. (1997). Nondifferentiable and two-level mathematical programming. Boston: Kluwer Academic Publishers. p. 19. ISBN 0-7923-9821-1.