LMS color space

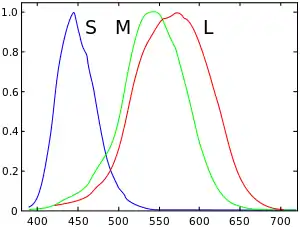

LMS (long, medium, short), is a color space which represents the response of the three types of cones of the human eye, named for their responsivity (sensitivity) peaks at long, medium, and short wavelengths.

The numerical range is generally not specified, except that the lower end is generally bounded by zero. It is common to use the LMS color space when performing chromatic adaptation (estimating the appearance of a sample under a different illuminant). It's also useful in the study of color blindness, when one or more cone types are defective.

XYZ to LMS

Typically, colors to be adapted chromatically will be specified in a color space other than LMS (e.g. sRGB). The chromatic adaptation matrix in the diagonal von Kries transform method, however, operates on tristimulus values in the LMS color space. Since colors in most colorspaces can be transformed to the XYZ color space, only one additional transformation matrix is required for any color space to be adapted chromatically: to transform colors from the XYZ color space to the LMS color space. However, many color adaption methods, or color appearance models (CAMs), use matrices to convert to spaces other than LMS (and sometimes refer to them as LMS or RGB or ργβ), and apply a von Kries-like diagonal matrix in that space.[2]

The CAT matrices for some CAMs in terms of CIEXYZ coordinates are presented here. The matrices, in conjunction with the XYZ data defined for the standard observer, implicitly define a "cone" response for each cell type.

Notes:

- All tristimulus values are normally calculated using the CIE 1931 2° standard colorimetric observer.[2]

- Unless specified otherwise, the CAT matrices are normalized (the elements in a row add up to 1) so the tristimulus values for an equal-energy illuminant (X=Y=Z), like CIE Illuminant E, produce equal LMS values.[2]

Hunt, RLAB

The Hunt and RLAB color appearance models use the Hunt-Pointer-Estevez transformation matrix (MHPE) for conversion from CIE XYZ to LMS.[3][4][5] This is the transformation matrix which was originally used in conjunction with the von Kries transform method, and is therefore also called von Kries transformation matrix (MvonKries).

| Equal-energy illuminants: | |

| Normalized[6] to D65: |

CIECAM97s, LLAB

The original CIECAM97s color appearance model uses the Bradford transformation matrix (MBFD) (as does the LLAB color appearance model).[2] This is a “spectrally sharpened” transformation matrix (i.e. the L and M cone response curves are narrower and more distinct from each other). The Bradford transformation matrix was supposed to work in conjunction with a modified von Kries transform method which introduced a small non-linearity in the S (blue) channel. However, outside of CIECAM97s and LLAB this is often neglected and the Bradford transformation matrix is used in conjunction with the linear von Kries transform method, explicitly so in ICC profiles.[7]

A revised version of CIECAM97s switches back to a linear transform method and introduces a corresponding transformation matrix (MCAT97s):[8]

Direct from spectra

From a physiological point of view, the LMS color space describes a more fundamental level of human visual response, so it makes more sense to define XYZ by LMS, rather than the other way around.

Stockman & Sharpe (2000)

A set of physiologically-based LMS functions are measured and proposed by Stockman & Sharpe in 2000. The function has been published in a technical report by the CIE in 2006.[10]

Applications

Color blindness

The LMS color space can be used to emulate the way color-blind people see color. The technique was first pioneered by Brettel et al. and is rated favorably by actual patients.[11]

A related application is making color filters for color-blind people to more easily notice differences in color, a process known as daltonization.[12]

Image processing

JPEG XL uses a XYB color space derived from LMS, where X = L + M, Y = L - M, and B = S. This can be interpreted as a hybrid color theory where L and M are opponents but S is handled in a trichromatic way, justified by the lower spatial density of S cones. In practical terms, this allows for using less data for storing blue signals without losing much perceived quality.[13]

References

- http://www.cvrl.org/database/text/cones/smj2.htm

- Fairchild, Mark D. (2005). Color Appearance Models (2E ed.). Wiley Interscience. pp. 182–183, 227–230. ISBN 978-0-470-01216-1.

- Schanda, Jnos, ed. (2007-07-27). Colorimetry. p. 305. doi:10.1002/9780470175637. ISBN 9780470175637.

- Moroney, Nathan; Fairchild, Mark D.; Hunt, Robert W.G.; Li, Changjun; Luo, M. Ronnier; Newman, Todd (November 12, 2002). "The CIECAM02 Color Appearance Model". IS&T/SID Tenth Color Imaging Conference. Scottsdale, Arizona: The Society for Imaging Science and Technology. ISBN 0-89208-241-0.

- Ebner, Fritz (1998-07-01). "Derivation and modelling hue uniformity and development of the IPT color space". Theses: 129.

- "Welcome to Bruce Lindbloom's Web Site". brucelindbloom.com. Retrieved 23 March 2020.

- Specification ICC.1:2010 (Profile version 4.3.0.0). Image technology colour management — Architecture, profile format, and data structure, Annex E.3, pp. 102.

- Fairchild, Mark D. (2001). "A Revision of CIECAM97s for Practical Applications" (PDF). Color Research & Application. Wiley Interscience. 26 (6): 418–427. doi:10.1002/col.1061.

- Fairchild, Mark. "Errata for COLOR APPEARANCE MODELS" (PDF).

The published MCAT02 matrix in Eq. 9.40 is incorrect (it is a version of the HuntPointer-Estevez matrix. The correct MCAT02 matrix is as follows. It is also given correctly in Eq. 16.2)

- "CIE functions". cvrl.ucl.ac.uk.

- "Color Vision Deficiency Emulation". colorspace.r-forge.r-project.org.

- Simon-Liedtke, Joschua Thomas; Farup, Ivar (February 2016). "Evaluating color vision deficiency daltonization methods using a behavioral visual-search method". Journal of Visual Communication and Image Representation. 35: 236–247. doi:10.1016/j.jvcir.2015.12.014. hdl:11250/2461824.

- Alakuijala, Jyrki; van Asseldonk, Ruud; Boukortt, Sami; Szabadka, Zoltan; Bruse, Martin; Comsa, Iulia-Maria; Firsching, Moritz; Fischbacher, Thomas; Kliuchnikov, Evgenii; Gomez, Sebastian; Obryk, Robert; Potempa, Krzysztof; Rhatushnyak, Alexander; Sneyers, Jon; Szabadka, Zoltan; Vandervenne, Lode; Versari, Luca; Wassenberg, Jan (6 September 2019). Tescher, Andrew G; Ebrahimi, Touradj (eds.). "JPEG XL next-generation image compression architecture and coding tools". Applications of Digital Image Processing XLII: 20. doi:10.1117/12.2529237. ISBN 9781510629677.