Signaling game

In game theory, a signaling game is a simple type of a dynamic Bayesian game.[1]

The essence of a signalling game is that one player takes an action, the signal, to convey information to another player, where sending the signal is more costly if they are conveying false information. A manufacturer, for example, might provide a warranty for its product in order to signal to consumers that its product is unlikely to break down. The classic example is of a worker who acquires a college degree not because it increases their skill, but because it conveys their ability to employers.

A simple signalling game would have two players, the sender and the receiver. The sender has one of two types that we might call "desirable" and "undesirable" with different payoff functions, where the receiver knows the probability of each type but not which one this particular sender has. The receiver has just one possible type.

The sender moves first, choosing an action called the "signal" or "message" (though the term "message" is more often used in non-signalling "cheap talk" games where sending messages is costless). The receiver moves second, after observing the signal.

The two players receive payoffs dependent on the sender's type, the message chosen by the sender and the action chosen by the receiver.[2][3]

The tension in the game is that the sender wants to persuade the receiver that they have the desirable type, and they will try to choose a signal to do that. Whether this succeeds depends on whether the undesirable type would send the same signal, and how the receiver interprets the signal.

Perfect Bayesian equilibrium

The equilibrium concept that is relevant for signaling games is Perfect Bayesian equilibrium, a refinement of Bayesian Nash equilibrium.

Nature chooses the sender to have type with probability . The sender then chooses the probability with which to take signalling action , which we can write as for each possible The receiver observes the signal but not , and chooses the probability with which to take response action , which we can write as for each possible The sender's payoff is and the receiver's is

A perfect Bayesian equilibrium is a combination of beliefs and strategies for each player. Both players believe that the other will follow the strategies specified in the equilibrium, as in simple Nash equilibrium, unless they observe something that has probability zero in the equilibrium. The receiver's beliefs also include a probability distribution representing the probability put on the sender having type if the receiver observes signal . The receiver's strategy is a choice of The sender's strategy is a choice of . These beliefs and strategies must satisfy certain conditions:

- Sequential rationality: each strategy should maximize a player's expected utility, given his beliefs.

- Consistency: each belief should be updated according to the equilibrium strategies, the observed actions, and Bayes' rule on every path reached in equilibrium with positive probability. On paths of zero probability, known as off-equilibrium paths, the beliefs must be specified but can be arbitrary.

The kinds of perfect Bayesian equilibria that may arise can be divided in three different categories: pooling equilibria, separating equilibria and semi-separating. A given game may or may not have more than equilibrium.

- In a pooling equilibrium, senders of different types all choose the same signal. This means that the signal does not give any information to the receiver, so the receiver's beliefs are not updated after seeing the signal.

- In a separating equilibrium, senders of different types always choose different signals. This means that the signal always reveals the sender's type, so the receiver's beliefs become deterministic after seeing the signal.

- In a semi-separating equilibrium (also called partial-pooling), some types of senders choose the same message and other types choose different messages.

Note that, if there are more types of senders than there are messages, the equilibrium can never be a separating equilibrium (but may be semi-separating). There are also hybrid equilibria, in which the sender randomizes between pooling and separating.

Examples

Reputation game

Receiver Sender | Stay | Exit |

|---|---|---|

| Sane, Prey | P1+P1, D2 | P1+M1, 0 |

| Sane, Accommodate | D1+D1, D2 | D1+M1, 0 |

| Crazy, Prey | X1, P2 | X1, 0 |

In this game,[1]:326–329[4] the sender and the receiver are firms. The sender is an incumbent firm and the receiver is an entrant firm.

- The sender can be one of two types: Sane or Crazy. A sane sender can send one of two messages: Prey and Accommodate. A crazy sender can only Prey.

- The receiver can do one of two actions: Stay or Exit.

The payoffs are given by the table at the right. We assume that:

- M1>D1>P1, i.e., a sane sender prefers to be a monopoly (M1), but if it is not a monopoly, it prefers to accommodate (D1) than to prey (P1). Note that the value of X1 is irrelevant since a Crazy firm has only one possible action.

- D2>0>P2, i.e., the receiver prefers to stay in a market with a sane competitor (D2) than to exit the market (0), but prefers to exit than to stay in a market with a crazy competitor (P2).

- Apriori, the sender has probability p to be sane and 1-p to be crazy.

We now look for perfect Bayesian equilibria. It is convenient to differentiate between separating equilibria and pooling equilibria.

- A separating equilibrium, in our case, is one in which the sane sender always accommodates. This separates it from a crazy sender. In the second period, the receiver has complete information: their beliefs are "If Accommodate then the sender is sane, otherwise the sender is crazy". Their best-response is: "If Accommodate then Stay, if Prey then Exit". The payoff of the sender when they accommodate is D1+D1, but if they deviate to Prey their payoff changes to P1+M1; therefore, a necessary condition for a separating equilibrium is D1+D1≥P1+M1 (i.e., the cost of preying overrides the gain from being a monopoly). It is possible to show that this condition is also sufficient.

- A pooling equilibrium is one in which the sane sender always preys. In the second period, the receiver has no new information. If the sender preys, then the receiver's beliefs must be equal to the apriori beliefs, which are, the sender is sane with probability p and crazy with probability 1-p. Therefore, the receiver's expected payoff from staying is: [p D2 + (1-p) P2]; the receiver stays if-and-only-if this expression is positive. The sender can gain from preying, only if the receiver exits. Therefore, a necessary condition for a pooling equilibrium is p D2 + (1-p) P2 ≤ 0 (intuitively, the receiver is careful and will not enter the market if there is a risk that the sender is crazy. The sender knows this, and thus hides their true identity by always preying like a crazy). But this condition is not sufficient: if the receiver exits also after Accommodate, then it is better for the sender to Accommodate, since it is cheaper than Prey. So it is necessary that the receiver stays after Accommodate, and it is necessary that D1+D1<P1+M1 (i.e., the gain from being a monopoly overrides the cost of preying). Finally, we must make sure that staying after Accommodate is a best-response for the receiver. For this, we must specify the receiver's beliefs after Accommodate. Note that this path has probability 0, so Bayes' rule does not apply, and we are free to choose the receiver's beliefs as e.g. "If Accommodate then the sender is sane".

To summarize:

- If preying is costly for a sane sender (D1+D1≥P1+M1), they will accommodate and there will be a unique separating PBE: the receiver will stay after Accommodate and exit after Prey.

- If preying is not too costly for a sane sender (D1+D1<P1+M1), and it is harmful for the receiver (p D2 + (1-p) P2 ≤ 0), the sender will prey and there will be a unique pooling PBE: again the receiver will stay after Accommodate and exit after Prey. Here, the sender is willing to lose some value by preying in the first period, in order to build a reputation of a predatory firm, and convince the receiver to exit.

- If preying is not costly for the sender nor harmful for the receiver, there will not be a PBE in pure strategies. There will be a unique PBE in mixed strategies - both the sender and the receiver will randomize between their two actions.

Education game

Michael Spence's 1973 paper on education as a signal of ability is the start of the economic analysis of signalling.[5][1]:329–331 In this game, the senders are workers and the receivers are employers. The example below has two types of workers and a continuous signal level.[6]

The players are a worker and two firms. The worker chooses an education level the signal, after which the firms simultaneously offer him a wage and and he accepts one or the other. The worker's type, known only to himself, is either high ability with or low ability with each type having probability 1/2. The high-ability worker's payoff is and the low-ability's is A firm that hires the worker at wage has payoff and the other firm has payoff 0.

In this game, the firms compete the wage down to where it equals the expected ability, so if there is no signal possible, the result would be This will also be the wage in a pooling equilibrium, one where both types of worker choose the same signal, so the firms are left using their prior belief of .5 for the probability he has High ability. In a separating equilibrium, the wage will be 0 for the signal level the Low type chooses and 10 for the high type's signal. There are many equilibria, both pooling and separating, depending on expectations.

In a separating equilibrium, the low type chooses The wages will be and for some critical level that signals high ability. For the low type to choose requires that so and we can conclude that For the high type to choose requires that so and we can conclude that Thus, any value of between 5 and 10 can support an equilibrium. Perfect Bayesian equilibrium requires an out-of-equilibrium belief to be specified too, for all the other possible levels of besides 0 and levels which are "impossible" in equilibrium since neither type plays them. These beliefs must be such that neither player would want to deviate from his equilibrium strategy 0 or to a different A convenient belief is that if another, more realistic, belief that would support an equilibrium is and if There is a continuum of equilibria, for each possible level of One equilibrium, for example, is

In a pooling equilibrium, both types choose the same One pooling equilibrium is for both types to choose no education, with the out-of-equilibrium belief In that case, the wage will be the expected ability of 5, and neither type of worker will deviate to a higher education level because the firms would not think that told them anything about the worker's type.

The most surprising result is that there are also pooling equilibria with Suppose we specify the out-of-equilibrium belief to be Then the wage will be 5 for a worker with but 0 for a worker with wage The low type compares the payoffs to and if he is willing to follow his equilibrium strategy of The high type will choose a fortiori. Thus, there is another continuum of equilibria, with values of in [0, 2.5].

In the signalling model of education, expectations are crucial. If, as in the separating equilibrium, employers expect that high-ability people will acquire a certain level of education and low-ability ones will not, we get the main insight: that if people cannot communicate their ability directly, they will acquire educations even if it does not increase productivity, just to demonstrate ability. Or, in the pooling equilibrium with if employers do not think education signals anything, we can get the outcome that nobody becomes educated. Or, in the pooling equilibrium with everyone acquires education that is completely useless, not even showing who has high ability, out of fear that if they deviate and do not acquire education, employers will think they have low ability.

[7]

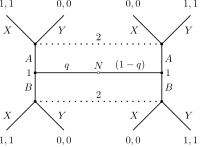

Beer-Quiche game

The Beer-Quiche game of Cho and Kreps[8] draws on the stereotype of quiche eaters being less masculine. In this game, an individual B is considering whether to duel with another individual A. B knows that A is either a wimp or is surly but not which. B would prefer a duel if A is a wimp but not if A is surly. Player A, regardless of type, wants to avoid a duel. Before making the decision B has the opportunity to see whether A chooses to have beer or quiche for breakfast. Both players know that wimps prefer quiche while surlies prefer beer. The point of the game is to analyze the choice of breakfast by each kind of A. This has become a standard example of a signaling game. See[9]:14–18 for more details.

Applications of signaling games

Signaling games describe situations where one player has information the other player does not have. These situations of asymmetric information are very common in economics and behavioral biology.

Philosophy

The first signaling game was the Lewis signaling game, which occurred in David K. Lewis' Ph. D. dissertation (and later book) Convention. See[10] Replying to W.V.O. Quine,[11][12] Lewis attempts to develop a theory of convention and meaning using signaling games. In his most extreme comments, he suggests that understanding the equilibrium properties of the appropriate signaling game captures all there is to know about meaning:

- I have now described the character of a case of signaling without mentioning the meaning of the signals: that two lanterns meant that the redcoats were coming by sea, or whatever. But nothing important seems to have been left unsaid, so what has been said must somehow imply that the signals have their meanings.[13]

The use of signaling games has been continued in the philosophical literature. Others have used evolutionary models of signaling games to describe the emergence of language. Work on the emergence of language in simple signaling games includes models by Huttegger,[14] Grim, et al.,[15] Skyrms,[16][17] and Zollman.[18] Harms,[19][20] and Huttegger,[21] have attempted to extend the study to include the distinction between normative and descriptive language.

Economics

The first application of signaling games to economic problems was Michael Spence's Education game. A second application was the Reputation game.

Biology

Valuable advances have been made by applying signaling games to a number of biological questions. Most notably, Alan Grafen's (1990) handicap model of mate attraction displays.[22] The antlers of stags, the elaborate plumage of peacocks and bird-of-paradise, and the song of the nightingale are all such signals. Grafen's analysis of biological signaling is formally similar to the classic monograph on economic market signaling by Michael Spence.[23] More recently, a series of papers by Getty[24][25][26][27] shows that Grafen's analysis, like that of Spence, is based on the critical simplifying assumption that signalers trade off costs for benefits in an additive fashion, the way humans invest money to increase income in the same currency. This assumption that costs and benefits trade off in an additive fashion might be valid for some biological signaling systems, but is not valid for multiplicative tradeoffs, such as the survival cost – reproduction benefit tradeoff that is assumed to mediate the evolution of sexually selected signals.

Charles Godfray (1991) modeled the begging behavior of nestling birds as a signaling game.[28] The nestlings begging not only informs the parents that the nestling is hungry, but also attracts predators to the nest. The parents and nestlings are in conflict. The nestlings benefit if the parents work harder to feed them than the parents ultimate benefit level of investment. The parents are trading off investment in the current nestlings against investment in future offspring.

Pursuit deterrent signals have been modeled as signaling games.[29] Thompson's gazelles are known sometimes to perform a 'stott', a jump into the air of several feet with the white tail showing, when they detect a predator. Alcock and others have suggested that this action is a signal of the gazelle's speed to the predator. This action successfully distinguishes types because it would be impossible or too costly for a sick creature to perform and hence the predator is deterred from chasing a stotting gazelle because it is obviously very agile and would prove hard to catch.

The concept of information asymmetry in molecular biology has long been apparent.[30] Although molecules are not rational agents, simulations have shown that through replication, selection, and genetic drift, molecules can behave according to signaling game dynamics. Such models have been proposed to explain, for example, the emergence of the genetic code from an RNA and amino acid world.[31]

Costly versus cost-free signaling

One of the major uses of signaling games both in economics and biology has been to determine under what conditions honest signaling can be an equilibrium of the game. That is, under what conditions can we expect rational people or animals subject to natural selection to reveal information about their types?

If both parties have coinciding interest, that is they both prefer the same outcomes in all situations, then honesty is an equilibrium. (Although in most of these cases non-communicative equilbria exist as well.) However, if the parties' interests do not perfectly overlap, then the maintenance of informative signaling systems raises an important problem.

Consider a circumstance described by John Maynard Smith regarding transfer between related individuals. Suppose a signaler can be either starving or just hungry, and they can signal that fact to another individual who has food. Suppose that they would like more food regardless of their state, but that the individual with food only wants to give them the food if they are starving. While both players have identical interests when the signaler is starving, they have opposing interests when the signaler is only hungry. When they are only hungry, they have an incentive to lie about their need in order to obtain the food. And if the signaler regularly lies, then the receiver should ignore the signal and do whatever they think is best.

Determining how signaling is stable in these situations has concerned both economists and biologists, and both have independently suggested that signal cost might play a role. If sending one signal is costly, it might only be worth the cost for the starving person to signal. The analysis of when costs are necessary to sustain honesty has been a significant area of research in both these fields.

See also

- Cheap talk

- Extensive form game

- Incomplete information

- Intuitive criterion and Divine equilibrium – refinements of PBE in signaling games.

- Screening game – a related kind of game where the uninformed player, the receiver, rather than choosing an action based on a signal, moves first and gives the informed player, the sender, proposals based on the type of the sender. The sender selects one of these proposals.

- Signalling (economics)

- Signalling theory

References

- Subsection 8.2.2 in Fudenberg Trole 1991, pp. 326–331

- Gibbons, Robert (1992). A Primer in Game Theory. New York: Harvester Wheatsheaf. ISBN 978-0-7450-1159-2.

- Osborne, M. J. & Rubinstein, A. (1994). A Course in Game Theory. Cambridge: MIT Press. ISBN 978-0-262-65040-3.

- which is a simplified version of a reputation model suggested in 1982 by Kreps, Wilson, Milgrom and Roberts

- Spence, A. M. (1973). "Job Market Signaling". Quarterly Journal of Economics. 87 (3): 355–374. doi:10.2307/1882010. JSTOR 1882010.

- This is a simplified version of the model in Johannes Horner, "Signalling and Screening," The New Palgrave Dictionary of Economics, 2nd edition, 2008, edited by Steven N. Durlauf and Lawrence E. Blume, http://najecon.com/econ504/signallingb.pdf.

- For a survey of empirical evidence on how important signalling is in education see Andrew Weiss. 1995. "Human Capital vs. Signalling Explanations of Wages." Journal of Economic Perspectives, 9 (4): 133-154. DOI: 10.1257/jep.9.4.133.

- Cho, In-Koo; Kreps, David M. (May 1987). "Signaling Games and Stable Equilibria". The Quarterly Journal of Economics. 102 (2): 179–222. CiteSeerX 10.1.1.407.5013. doi:10.2307/1885060. JSTOR 1885060.

- James Peck. "Perfect Bayesian Equilibrium" (PDF). Ohio State University. Retrieved 2 September 2016.

- Lewis, D. (1969). Convention. A Philosophical Study. Cambridge: Harvard University Press.

- Quine, W. V. O. (1936). "Truth by Convention". Philosophical Essays for Alfred North Whitehead. London: Longmans, Green & Co. pp. 90–124. ISBN 978-0-8462-0970-6. (Reprinting)

- Quine, W. V. O. (1960). "Carnap and Logical Truth". Synthese. 12 (4): 350–374. doi:10.1007/BF00485423.

- Lewis (1969), p. 124.

- Huttegger, S. M. (2007). "Evolution and the Explanation of Meaning". Philosophy of Science. 74 (1): 1–24. doi:10.1086/519477.

- Grim, P.; Kokalis, T.; Alai-Tafti, A.; Kilb, N.; St. Denis, Paul (2001). "Making Meaning Happen". Technical Report #01-02. Stony Brook: Group for Logic and Formal Semantics SUNY, Stony Brook.

- Skyrms, B. (1996). Evolution of the Social Contract. Cambridge: Cambridge University Press. ISBN 978-0-521-55471-8.

- Skyrms, B. (2010). Signals Evolution, Learning & Information. New York: Oxford University Press. ISBN 978-0-19-958082-8.

- Zollman, K. J. S. (2005). "Talking to Neighbors: The Evolution of Regional Meaning". Philosophy of Science. 72 (1): 69–85. doi:10.1086/428390.

- Harms, W. F. (2000). "Adaption and Moral Realism". Biology and Philosophy. 15 (5): 699–712. doi:10.1023/A:1006661726993.

- Harms, W. F. (2004). Information and Meaning in Evolutionary Processes. Cambridge: Cambridge University Press. ISBN 978-0-521-81514-7.

- Huttegger, S. M. (2005). "Evolutionary Explanations of Indicatives and Imperatives". Erkenntnis. 66 (3): 409–436. doi:10.1007/s10670-006-9022-1.

- Grafen, A. (1990). "Biological signals as handicaps". Journal of Theoretical Biology. 144 (4): 517–546. doi:10.1016/S0022-5193(05)80088-8. PMID 2402153.

- Spence, A. M. (1974). Market Signaling: Information Transfer in Hiring and Related Processes. Cambridge: Harvard University Press.

- Getty, T. (1998). "Handicap signalling: when fecundity and viability do not add up". Animal Behaviour. 56 (1): 127–130. doi:10.1006/anbe.1998.0744. PMID 9710469.

- Getty, T. (1998). "Reliable signalling need not be a handicap". Animal Behaviour. 56 (1): 253–255. doi:10.1006/anbe.1998.0748. PMID 9710484.

- Getty, T. (2002). "Signaling health versus parasites". The American Naturalist. 159 (4): 363–371. doi:10.1086/338992. PMID 18707421.

- Getty, T. (2006). "Sexually selected signals are not similar to sports handicaps". Trends in Ecology & Evolution. 21 (2): 83–88. doi:10.1016/j.tree.2005.10.016. PMID 16701479.

- Godfray, H. C. J. (1991). "Signalling of need by offspring to their parents". Nature. 352 (6333): 328–330. doi:10.1038/352328a0.

- Yachi, S. (1995). "How can honest signalling evolve? The role of the handicap principle". Proceedings of the Royal Society of London B. 262 (1365): 283–288. doi:10.1098/rspb.1995.0207.

- John Maynard Smith. (2000) The Concept of Information in Biology. Philosophy of Science. 67(2):177-194

- Jee, J.; Sundstrom, A.; Massey, S.E.; Mishra, B. (2013). "What can information-asymmetric games tell us about the context of Crick's 'Frozen Accident'?". Journal of the Royal Society Interface. 10 (88): 20130614. doi:10.1098/rsif.2013.0614. PMC 3785830. PMID 23985735.