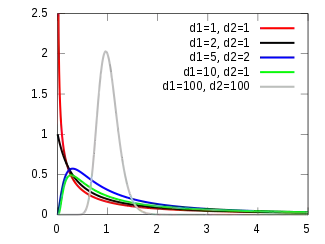

F-distribution

In probability theory and statistics, the F-distribution, also known as Snedecor's F distribution or the Fisher–Snedecor distribution (after Ronald Fisher and George W. Snedecor) is a continuous probability distribution that arises frequently as the null distribution of a test statistic, most notably in the analysis of variance (ANOVA), e.g., F-test.[2][3][4][5]

|

Probability density function  | |||

|

Cumulative distribution function  | |||

| Parameters | d1, d2 > 0 deg. of freedom | ||

|---|---|---|---|

| Support | if , otherwise | ||

| CDF | |||

| Mean |

for d2 > 2 | ||

| Mode |

for d1 > 2 | ||

| Variance |

for d2 > 4 | ||

| Skewness |

for d2 > 6 | ||

| Ex. kurtosis | see text | ||

| Entropy |

[1] | ||

| MGF | does not exist, raw moments defined in text and in [2][3] | ||

| CF | see text | ||

Definition

If a random variable X has an F-distribution with parameters d1 and d2, we write X ~ F(d1, d2). Then the probability density function (pdf) for X is given by

for real x > 0. Here is the beta function. In many applications, the parameters d1 and d2 are positive integers, but the distribution is well-defined for positive real values of these parameters.

The cumulative distribution function is

where I is the regularized incomplete beta function.

The expectation, variance, and other details about the F(d1, d2) are given in the sidebox; for d2 > 8, the excess kurtosis is

The k-th moment of an F(d1, d2) distribution exists and is finite only when 2k < d2 and it is equal to [6]

The F-distribution is a particular parametrization of the beta prime distribution, which is also called the beta distribution of the second kind.

The characteristic function is listed incorrectly in many standard references (e.g.,[3]). The correct expression [7] is

where U(a, b, z) is the confluent hypergeometric function of the second kind.

Characterization

A random variate of the F-distribution with parameters and arises as the ratio of two appropriately scaled chi-squared variates:[8]

where

- and have chi-squared distributions with and degrees of freedom respectively, and

- and are independent.

In instances where the F-distribution is used, for example in the analysis of variance, independence of and might be demonstrated by applying Cochran's theorem.

Equivalently, the random variable of the F-distribution may also be written

where and , is the sum of squares of random variables from normal distribution and is the sum of squares of random variables from normal distribution .

In a frequentist context, a scaled F-distribution therefore gives the probability , with the F-distribution itself, without any scaling, applying where is being taken equal to . This is the context in which the F-distribution most generally appears in F-tests: where the null hypothesis is that two independent normal variances are equal, and the observed sums of some appropriately selected squares are then examined to see whether their ratio is significantly incompatible with this null hypothesis.

The quantity has the same distribution in Bayesian statistics, if an uninformative rescaling-invariant Jeffreys prior is taken for the prior probabilities of and .[9] In this context, a scaled F-distribution thus gives the posterior probability , where the observed sums and are now taken as known.

Properties and related distributions

- If and are independent, then

- If are independent, then

- If (Beta distribution) then

- Equivalently, if , then .

- If , then has a beta prime distribution: .

- If then has the chi-squared distribution

- is equivalent to the scaled Hotelling's T-squared distribution .

- If then .

- If — Student's t-distribution — then:

- F-distribution is a special case of type 6 Pearson distribution

- If and are independent, with Laplace(μ, b) then

- If then (Fisher's z-distribution)

- The noncentral F-distribution simplifies to the F-distribution if .

- The doubly noncentral F-distribution simplifies to the F-distribution if

- If is the quantile p for and is the quantile for , then

- F-distribution is an instance of ratio distributions

See also

References

- Lazo, A.V.; Rathie, P. (1978). "On the entropy of continuous probability distributions". IEEE Transactions on Information Theory. IEEE. 24 (1): 120–122. doi:10.1109/tit.1978.1055832.

- Johnson, Norman Lloyd; Samuel Kotz; N. Balakrishnan (1995). Continuous Univariate Distributions, Volume 2 (Second Edition, Section 27). Wiley. ISBN 0-471-58494-0.

- Abramowitz, Milton; Stegun, Irene Ann, eds. (1983) [June 1964]. "Chapter 26". Handbook of Mathematical Functions with Formulas, Graphs, and Mathematical Tables. Applied Mathematics Series. 55 (Ninth reprint with additional corrections of tenth original printing with corrections (December 1972); first ed.). Washington D.C.; New York: United States Department of Commerce, National Bureau of Standards; Dover Publications. p. 946. ISBN 978-0-486-61272-0. LCCN 64-60036. MR 0167642. LCCN 65-12253.

- NIST (2006). Engineering Statistics Handbook – F Distribution

- Mood, Alexander; Franklin A. Graybill; Duane C. Boes (1974). Introduction to the Theory of Statistics (Third ed.). McGraw-Hill. pp. 246–249. ISBN 0-07-042864-6.

- Taboga, Marco. "The F distribution".

- Phillips, P. C. B. (1982) "The true characteristic function of the F distribution," Biometrika, 69: 261–264 JSTOR 2335882

- M.H. DeGroot (1986), Probability and Statistics (2nd Ed), Addison-Wesley. ISBN 0-201-11366-X, p. 500

- G. E. P. Box and G. C. Tiao (1973), Bayesian Inference in Statistical Analysis, Addison-Wesley. p. 110