Electronic music

Electronic music is music that employs electronic musical instruments, digital instruments or circuitry-based music technology. A distinction can be made between sound produced using electromechanical means (electroacoustic music) and that produced using electronics only.[1] Electromechanical instruments have mechanical elements, such as strings, hammers and electric elements, such as magnetic pickups, power amplifiers and loudspeakers. Examples of electromechanical sound producing devices include the telharmonium, Hammond organ, electric piano and the electric guitar, which are typically made loud enough for performers and audiences to hear with an instrument amplifier and speaker cabinet. Pure electronic instruments do not have vibrating strings, hammers or other sound-producing mechanisms. Devices such as the theremin, synthesizer and computer can produce electronic sounds.[2]

| Electronic music | |

|---|---|

| Stylistic origins | |

| Derivative forms | |

| Subgenres | |

| Fusion genres | |

| Mahraganat | |

| Other topics | |

| Electronic music |

|---|

| Experimental forms |

| Popular styles |

| Other topics |

The first electronic devices for performing music were developed at the end of the 19th century and shortly afterward Italian futurists explored sounds that had not been considered musical. During the 1920s and 1930s, electronic instruments were introduced and the first compositions for electronic instruments were made. By the 1940s, magnetic audio tape allowed musicians to tape sounds and then modify them by changing the tape speed or direction, leading to the development of electroacoustic tape music in the 1940s, in Egypt and France. Musique concrète, created in Paris in 1948, was based on editing together recorded fragments of natural and industrial sounds. Music produced solely from electronic generators was first produced in Germany in 1953. Electronic music was also created in Japan and the United States beginning in the 1950s. An important new development was the advent of computers to compose music. Algorithmic composition with computers was first demonstrated in the 1950s (although algorithmic composition per se without a computer had occurred much earlier, for example Mozart's Musikalisches Würfelspiel).

In the 1960s, live electronics were pioneered in America and Europe, Japanese electronic musical instruments began influencing the music industry and Jamaican dub music emerged as a form of popular electronic music. In the early 1970s, the monophonic Minimoog synthesizer and Japanese drum machines helped popularize synthesized electronic music.

In the 1970s, electronic music began to have a significant influence on popular music, with the adoption of polyphonic synthesizers, electronic drums, drum machines and turntables, through the emergence of genres such as disco, krautrock, new wave, synth-pop, hip hop and EDM. In the 1980s, electronic music became more dominant in popular music, with a greater reliance on synthesizers and the adoption of programmable drum machines such as the Roland TR-808 and bass synthesizers such as the TB-303. In the early 1980s, digital technologies for synthesizers including digital synthesizers such as the Yamaha DX7 became popular and a group of musicians and music merchants developed the Musical Instrument Digital Interface (MIDI).

Electronically produced music became popular by the 1990s, because of the advent of affordable music technology.[3] Contemporary electronic music includes many varieties and ranges from experimental art music to popular forms such as electronic dance music. Pop electronic music is most recognizable in its 4/4 form and more connected with the mainstream than preceding forms which were popular in niche markets.[4]

Origins: late 19th century to early 20th century

.jpg.webp)

At the turn of the 20th century, experimentation with emerging electronics led to the first electronic musical instruments.[5] These initial inventions were not sold, but were instead used in demonstrations and public performances. The audiences were presented with reproductions of existing music instead of new compositions for the instruments.[6] While some were considered novelties and produced simple tones, the Telharmonium synthesized the sound of several orchestral instruments with reasonable precision. It achieved viable public interest and made commercial progress into streaming music through telephone networks.[7]

Critics of musical conventions at the time saw promise in these developments. Ferruccio Busoni encouraged the composition of microtonal music allowed for by electronic instruments. He predicted the use of machines in future music, writing the influential Sketch of a New Esthetic of Music (1907).[8] Futurists such as Francesco Balilla Pratella and Luigi Russolo began composing music with acoustic noise to evoke the sound of machinery. They predicted expansions in timbre allowed for by electronics in the influential manifesto The Art of Noises (1913).[9][10]

Early compositions

Developments of the vacuum tube led to electronic instruments that were smaller, amplified, and more practical for performance.[11] In particular, the theremin, ondes Martenot and trautonium were commercially produced by the early 1930s.[12][13]

From the late 1920s, the increased practicality of electronic instruments influenced composers such as Joseph Schillinger to adopt them. They were typically used within orchestras, and most composers wrote parts for the theremin that could otherwise be performed with string instruments.[12]

Avant-garde composers criticized the predominant use of electronic instruments for conventional purposes.[12] The instruments offered expansions in pitch resources[14] that were exploited by advocates of microtonal music such as Charles Ives, Dimitrios Levidis, Olivier Messiaen and Edgard Varèse.[15][16][17] Further, Percy Grainger used the theremin to abandon fixed tonation entirely,[18] while Russian composers such as Gavriil Popov treated it as a source of noise in otherwise-acoustic noise music.[19]

Recording experiments

Developments in early recording technology paralleled that of electronic instruments. The first means of recording and reproducing audio was invented in the late 19th century with the mechanical phonograph.[20] Record players became a common household item, and by the 1920s composers were using them to play short recordings in performances.[21]

The introduction of electrical recording in 1925 was followed by increased experimentation with record players. Paul Hindemith and Ernst Toch composed several pieces in 1930 by layering recordings of instruments and vocals at adjusted speeds. Influenced by these techniques, John Cage composed Imaginary Landscape No. 1 in 1939 by adjusting the speeds of recorded tones.[22]

Concurrently, composers began to experiment with newly developed sound-on-film technology. Recordings could be spliced together to create sound collages, such as those by Tristan Tzara, Kurt Schwitters, Filippo Tommaso Marinetti, Walter Ruttmann and Dziga Vertov. Further, the technology allowed sound to be graphically created and modified. These techniques were used to compose soundtracks for several films in Germany and Russia, in addition to the popular Dr. Jekyll and Mr. Hyde in the United States. Experiments with graphical sound were continued by Norman McLaren from the late 1930s.[23]

Development: 1940s to 1950s

Electroacoustic tape music

The first practical audio tape recorder was unveiled in 1935.[24] Improvements to the technology were made using the AC biasing technique, which significantly improved recording fidelity.[25][26] As early as 1942, test recordings were being made in stereo.[27] Although these developments were initially confined to Germany, recorders and tapes were brought to the United States following the end of World War II.[28] These were the basis for the first commercially produced tape recorder in 1948.[29]

In 1944, prior to the use of magnetic tape for compositional purposes, Egyptian composer Halim El-Dabh, while still a student in Cairo, used a cumbersome wire recorder to record sounds of an ancient zaar ceremony. Using facilities at the Middle East Radio studios El-Dabh processed the recorded material using reverberation, echo, voltage controls, and re-recording. What resulted is believed to be the earliest tape music composition.[30] The resulting work was entitled The Expression of Zaar and it was presented in 1944 at an art gallery event in Cairo. While his initial experiments in tape-based composition were not widely known outside of Egypt at the time, El-Dabh is also known for his later work in electronic music at the Columbia-Princeton Electronic Music Center in the late 1950s.[31]

Musique concrète

Following his work with Studio d'Essai at Radiodiffusion Française (RDF), during the early 1940s, Pierre Schaeffer is credited with originating the theory and practice of musique concrète. In the late 1940s, experiments in sound based composition using shellac record players were first conducted by Schaeffer. In 1950, the techniques of musique concrete were expanded when magnetic tape machines were used to explore sound manipulation practices such as speed variation (pitch shift) and tape splicing (Palombini 1993, 14).[32]

On 5 October 1948, RDF broadcast Schaeffer's Etude aux chemins de fer. This was the first "movement" of Cinq études de bruits, and marked the beginning of studio realizations[33] and musique concrète (or acousmatic art). Schaeffer employed a disk-cutting lathe, four turntables, a four-channel mixer, filters, an echo chamber, and a mobile recording unit. Not long after this, Pierre Henry began collaborating with Schaeffer, a partnership that would have profound and lasting effects on the direction of electronic music. Another associate of Schaeffer, Edgard Varèse, began work on Déserts, a work for chamber orchestra and tape. The tape parts were created at Pierre Schaeffer's studio, and were later revised at Columbia University.

In 1950, Schaeffer gave the first public (non-broadcast) concert of musique concrète at the École Normale de Musique de Paris. "Schaeffer used a PA system, several turntables, and mixers. The performance did not go well, as creating live montages with turntables had never been done before."[34] Later that same year, Pierre Henry collaborated with Schaeffer on Symphonie pour un homme seul (1950) the first major work of musique concrete. In Paris in 1951, in what was to become an important worldwide trend, RTF established the first studio for the production of electronic music. Also in 1951, Schaeffer and Henry produced an opera, Orpheus, for concrete sounds and voices.

By 1951 the work of Schaeffer, composer-percussionist Pierre Henry, and sound engineer Jacques Poullin had received official recognition and The Groupe de Recherches de Musique Concrète, Club d 'Essai de la Radiodiffusion-Télévision Française was established at RTF in Paris, the ancestor of the ORTF.[35]

Elektronische Musik

Karlheinz Stockhausen worked briefly in Schaeffer's studio in 1952, and afterward for many years at the WDR Cologne's Studio for Electronic Music.

1954 saw the advent of what would now be considered authentic electric plus acoustic compositions—acoustic instrumentation augmented/accompanied by recordings of manipulated or electronically generated sound. Three major works were premiered that year: Varèse's Déserts, for chamber ensemble and tape sounds, and two works by Otto Luening and Vladimir Ussachevsky: Rhapsodic Variations for the Louisville Symphony and A Poem in Cycles and Bells, both for orchestra and tape. Because he had been working at Schaeffer's studio, the tape part for Varèse's work contains much more concrete sounds than electronic. "A group made up of wind instruments, percussion and piano alternates with the mutated sounds of factory noises and ship sirens and motors, coming from two loudspeakers."[36]

At the German premiere of Déserts in Hamburg, which was conducted by Bruno Maderna, the tape controls were operated by Karlheinz Stockhausen.[36] The title Déserts suggested to Varèse not only "all physical deserts (of sand, sea, snow, of outer space, of empty streets), but also the deserts in the mind of man; not only those stripped aspects of nature that suggest bareness, aloofness, timelessness, but also that remote inner space no telescope can reach, where man is alone, a world of mystery and essential loneliness."[37]

In Cologne, what would become the most famous electronic music studio in the world, was officially opened at the radio studios of the NWDR in 1953, though it had been in the planning stages as early as 1950 and early compositions were made and broadcast in 1951.[38] The brainchild of Werner Meyer-Eppler, Robert Beyer, and Herbert Eimert (who became its first director), the studio was soon joined by Karlheinz Stockhausen and Gottfried Michael Koenig. In his 1949 thesis Elektronische Klangerzeugung: Elektronische Musik und Synthetische Sprache, Meyer-Eppler conceived the idea to synthesize music entirely from electronically produced signals; in this way, elektronische Musik was sharply differentiated from French musique concrète, which used sounds recorded from acoustical sources.[39]

In 1954, Stockhausen composed his Elektronische Studie II—the first electronic piece to be published as a score. In 1955, more experimental and electronic studios began to appear. Notable were the creation of the Studio di fonologia musicale di Radio Milano, a studio at the NHK in Tokyo founded by Toshiro Mayuzumi, and the Philips studio at Eindhoven, the Netherlands, which moved to the University of Utrecht as the Institute of Sonology in 1960.

"With Stockhausen and Mauricio Kagel in residence, it became a year-round hive of charismatic avante-gardism [sic]"[40] on two occasions combining electronically generated sounds with relatively conventional orchestras—in Mixtur (1964) and Hymnen, dritte Region mit Orchester (1967).[41] Stockhausen stated that his listeners had told him his electronic music gave them an experience of "outer space", sensations of flying, or being in a "fantastic dream world".[42] More recently, Stockhausen turned to producing electronic music in his own studio in Kürten, his last work in the medium being Cosmic Pulses (2007).

Japanese electronic music

_Console.jpg.webp)

_Tone_Cabinet.jpg.webp)

The earliest group of electronic musical instruments in Japan, Yamaha Magna Organ was built in 1935.[43] however after the World War II, Japanese composers such as Minao Shibata knew of the development of electronic musical instruments. By the late 1940s, Japanese composers began experimenting with electronic music and institutional sponsorship enabled them to experiment with advanced equipment. Their infusion of Asian music into the emerging genre would eventually support Japan's popularity in the development of music technology several decades later.[44]

Following the foundation of electronics company Sony in 1946, composers Toru Takemitsu and Minao Shibata independently explored possible uses for electronic technology to produce music.[45] Takemitsu had ideas similar to musique concrète, which he was unaware of, while Shibata foresaw the development of synthesizers and predicted a drastic change in music.[46] Sony began producing popular magnetic tape recorders for government and public use.[44][47]

The avant-garde collective Jikken Kōbō (Experimental Workshop), founded in 1950, was offered access to emerging audio technology by Sony. The company hired Toru Takemitsu to demonstrate their tape recorders with compositions and performances of electronic tape music.[48] The first electronic tape pieces by the group were "Toraware no Onna" ("Imprisoned Woman") and "Piece B", composed in 1951 by Kuniharu Akiyama.[49] Many of the electroacoustic tape pieces they produced were used as incidental music for radio, film, and theatre. They also held concerts employing a slide show synchronized with a recorded soundtrack.[50] Composers outside of the Jikken Kōbō, such as Yasushi Akutagawa, Saburo Tominaga and Shirō Fukai, were also experimenting with radiophonic tape music between 1952 and 1953.[47]

Musique concrète was introduced to Japan by Toshiro Mayuzumi, who was influenced by a Pierre Schaeffer concert. From 1952, he composed tape music pieces for a comedy film, a radio broadcast, and a radio drama.[49][51] However, Schaeffer's concept of sound object was not influential among Japanese composers, who were mainly interested in overcoming the restrictions of human performance.[52] This led to several Japanese electroacoustic musicians making use of serialism and twelve-tone techniques,[52] evident in Yoshirō Irino's 1951 dodecaphonic piece "Concerto da Camera",[51] in the organization of electronic sounds in Mayuzumi's "X, Y, Z for Musique Concrète", and later in Shibata's electronic music by 1956.[53]

Modelling the NWDR studio in Cologne, NHK established an electronic music studio in Tokyo in 1955, which became one of the world's leading electronic music facilities. The NHK Studio was equipped with technologies such as tone-generating and audio processing equipment, recording and radiophonic equipment, ondes Martenot, Monochord and Melochord, sine-wave oscillators, tape recorders, ring modulators, band-pass filters, and four- and eight-channel mixers. Musicians associated with the studio included Toshiro Mayuzumi, Minao Shibata, Joji Yuasa, Toshi Ichiyanagi, and Toru Takemitsu. The studio's first electronic compositions were completed in 1955, including Mayuzumi's five-minute pieces "Studie I: Music for Sine Wave by Proportion of Prime Number", "Music for Modulated Wave by Proportion of Prime Number" and "Invention for Square Wave and Sawtooth Wave" produced using the studio's various tone-generating capabilities, and Shibata's 20-minute stereo piece "Musique Concrète for Stereophonic Broadcast".[54][55]

American electronic music

In the United States, electronic music was being created as early as 1939, when John Cage published Imaginary Landscape, No. 1, using two variable-speed turntables, frequency recordings, muted piano, and cymbal, but no electronic means of production. Cage composed five more "Imaginary Landscapes" between 1942 and 1952 (one withdrawn), mostly for percussion ensemble, though No. 4 is for twelve radios and No. 5, written in 1952, uses 42 recordings and is to be realized as a magnetic tape. According to Otto Luening, Cage also performed a William [sic] Mix at Donaueschingen in 1954, using eight loudspeakers, three years after his alleged collaboration. Williams Mix was a success at the Donaueschingen Festival, where it made a "strong impression".[56]

The Music for Magnetic Tape Project was formed by members of the New York School (John Cage, Earle Brown, Christian Wolff, David Tudor, and Morton Feldman),[57] and lasted three years until 1954. Cage wrote of this collaboration: "In this social darkness, therefore, the work of Earle Brown, Morton Feldman, and Christian Wolff continues to present a brilliant light, for the reason that at the several points of notation, performance, and audition, action is provocative."[58]

Cage completed Williams Mix in 1953 while working with the Music for Magnetic Tape Project.[59] The group had no permanent facility, and had to rely on borrowed time in commercial sound studios, including the studio of Louis and Bebe Barron.

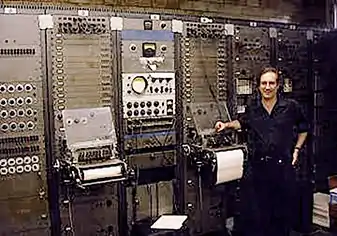

Columbia-Princeton Center

In the same year Columbia University purchased its first tape recorder—a professional Ampex machine—for the purpose of recording concerts. Vladimir Ussachevsky, who was on the music faculty of Columbia University, was placed in charge of the device, and almost immediately began experimenting with it.

Herbert Russcol writes: "Soon he was intrigued with the new sonorities he could achieve by recording musical instruments and then superimposing them on one another."[60] Ussachevsky said later: "I suddenly realized that the tape recorder could be treated as an instrument of sound transformation."[60] On Thursday, May 8, 1952, Ussachevsky presented several demonstrations of tape music/effects that he created at his Composers Forum, in the McMillin Theatre at Columbia University. These included Transposition, Reverberation, Experiment, Composition, and Underwater Valse. In an interview, he stated: "I presented a few examples of my discovery in a public concert in New York together with other compositions I had written for conventional instruments."[60] Otto Luening, who had attended this concert, remarked: "The equipment at his disposal consisted of an Ampex tape recorder . . . and a simple box-like device designed by the brilliant young engineer, Peter Mauzey, to create feedback, a form of mechanical reverberation. Other equipment was borrowed or purchased with personal funds."[61]

Just three months later, in August 1952, Ussachevsky traveled to Bennington, Vermont at Luening's invitation to present his experiments. There, the two collaborated on various pieces. Luening described the event: "Equipped with earphones and a flute, I began developing my first tape-recorder composition. Both of us were fluent improvisors and the medium fired our imaginations."[61] They played some early pieces informally at a party, where "a number of composers almost solemnly congratulated us saying, 'This is it' ('it' meaning the music of the future)."[61]

Word quickly reached New York City. Oliver Daniel telephoned and invited the pair to "produce a group of short compositions for the October concert sponsored by the American Composers Alliance and Broadcast Music, Inc., under the direction of Leopold Stokowski at the Museum of Modern Art in New York. After some hesitation, we agreed. . . . Henry Cowell placed his home and studio in Woodstock, New York, at our disposal. With the borrowed equipment in the back of Ussachevsky's car, we left Bennington for Woodstock and stayed two weeks. . . . In late September, 1952, the travelling laboratory reached Ussachevsky's living room in New York, where we eventually completed the compositions."[61]

Two months later, on October 28, Vladimir Ussachevsky and Otto Luening presented the first Tape Music concert in the United States. The concert included Luening's Fantasy in Space (1952)—"an impressionistic virtuoso piece"[61] using manipulated recordings of flute—and Low Speed (1952), an "exotic composition that took the flute far below its natural range."[61] Both pieces were created at the home of Henry Cowell in Woodstock, New York. After several concerts caused a sensation in New York City, Ussachevsky and Luening were invited onto a live broadcast of NBC's Today Show to do an interview demonstration—the first televised electroacoustic performance. Luening described the event: "I improvised some [flute] sequences for the tape recorder. Ussachevsky then and there put them through electronic transformations."[62]

The score for Forbidden Planet, by Louis and Bebe Barron,[63] was entirely composed using custom built electronic circuits and tape recorders in 1956 (but no synthesizers in the modern sense of the word).

Australia

The world's first computer to play music was CSIRAC, which was designed and built by Trevor Pearcey and Maston Beard. Mathematician Geoff Hill programmed the CSIRAC to play popular musical melodies from the very early 1950s. In 1951 it publicly played the Colonel Bogey March, of which no known recordings exist, only the accurate reconstruction.[64] However, CSIRAC played standard repertoire and was not used to extend musical thinking or composition practice. CSIRAC was never recorded, but the music played was accurately reconstructed. The oldest known recordings of computer-generated music were played by the Ferranti Mark 1 computer, a commercial version of the Baby Machine from the University of Manchester in the autumn of 1951.[65] The music program was written by Christopher Strachey.

Mid-to-late 1950s

The impact of computers continued in 1956. Lejaren Hiller and Leonard Isaacson composed Illiac Suite for string quartet, the first complete work of computer-assisted composition using algorithmic composition. "... Hiller postulated that a computer could be taught the rules of a particular style and then called on to compose accordingly."[66] Later developments included the work of Max Mathews at Bell Laboratories, who developed the influential MUSIC I program in 1957, one of the first computer programs to play electronic music. Vocoder technology was also a major development in this early era. In 1956, Stockhausen composed Gesang der Jünglinge, the first major work of the Cologne studio, based on a text from the Book of Daniel. An important technological development of that year was the invention of the Clavivox synthesizer by Raymond Scott with subassembly by Robert Moog.

In 1957, Kid Baltan (Dick Raaymakers) and Tom Dissevelt released their debut album, Song Of The Second Moon, recorded at the Philips studio in the Netherlands.[67] The public remained interested in the new sounds being created around the world, as can be deduced by the inclusion of Varèse's Poème électronique, which was played over four hundred loudspeakers at the Philips Pavilion of the 1958 Brussels World Fair. That same year, Mauricio Kagel, an Argentine composer, composed Transición II. The work was realized at the WDR studio in Cologne. Two musicians performed on a piano, one in the traditional manner, the other playing on the strings, frame, and case. Two other performers used tape to unite the presentation of live sounds with the future of prerecorded materials from later on and its past of recordings made earlier in the performance.

In 1958, Columbia-Princeton developed the RCA Mark II Sound Synthesizer, the first programmable synthesizer.[68] Prominent composers such as Vladimir Ussachevsky, Otto Luening, Milton Babbitt, Charles Wuorinen, Halim El-Dabh, Bülent Arel and Mario Davidovsky used the RCA Synthesizer extensively in various compositions.[69] One of the most influential composers associated with the early years of the studio was Egypt's Halim El-Dabh who,[70] after having developed the earliest known electronic tape music in 1944,[30] became more famous for Leiyla and the Poet, a 1959 series of electronic compositions that stood out for its immersion and seamless fusion of electronic and folk music, in contrast to the more mathematical approach used by serial composers of the time such as Babbitt. El-Dabh's Leiyla and the Poet, released as part of the album Columbia-Princeton Electronic Music Center in 1961, would be cited as a strong influence by a number of musicians, ranging from Neil Rolnick, Charles Amirkhanian and Alice Shields to rock musicians Frank Zappa and The West Coast Pop Art Experimental Band.[71]

Following the emergence of differences within the GRMC (Groupe de Recherche de Musique Concrète) Pierre Henry, Philippe Arthuys, and several of their colleagues, resigned in April 1958. Schaeffer created a new collective, called Groupe de Recherches Musicales (GRM) and set about recruiting new members including Luc Ferrari, Beatriz Ferreyra, François-Bernard Mâche, Iannis Xenakis, Bernard Parmegiani, and Mireille Chamass-Kyrou. Later arrivals included Ivo Malec, Philippe Carson, Romuald Vandelle, Edgardo Canton and François Bayle.[72]

Expansion: 1960s

These were fertile years for electronic music—not just for academia, but for independent artists as synthesizer technology became more accessible. By this time, a strong community of composers and musicians working with new sounds and instruments was established and growing. 1960 witnessed the composition of Luening's Gargoyles for violin and tape as well as the premiere of Stockhausen's Kontakte for electronic sounds, piano, and percussion. This piece existed in two versions—one for 4-channel tape, and the other for tape with human performers. "In Kontakte, Stockhausen abandoned traditional musical form based on linear development and dramatic climax. This new approach, which he termed 'moment form', resembles the 'cinematic splice' techniques in early twentieth century film."[73]

The theremin had been in use since the 1920s but it attained a degree of popular recognition through its use in science-fiction film soundtrack music in the 1950s (e.g., Bernard Herrmann's classic score for The Day the Earth Stood Still).[74]

In the UK in this period, the BBC Radiophonic Workshop (established in 1958) came to prominence, thanks in large measure to their work on the BBC science-fiction series Doctor Who. One of the most influential British electronic artists in this period[75] was Workshop staffer Delia Derbyshire, who is now famous for her 1963 electronic realisation of the iconic Doctor Who theme, composed by Ron Grainer.

In 1961 Josef Tal established the Centre for Electronic Music in Israel at The Hebrew University, and in 1962 Hugh Le Caine arrived in Jerusalem to install his Creative Tape Recorder in the centre.[76] In the 1990s Tal conducted, together with Dr Shlomo Markel, in cooperation with the Technion – Israel Institute of Technology, and VolkswagenStiftung a research project (Talmark) aimed at the development of a novel musical notation system for electronic music.[77]

Milton Babbitt composed his first electronic work using the synthesizer—his Composition for Synthesizer (1961)—which he created using the RCA synthesizer at the Columbia-Princeton Electronic Music Center.

For Babbitt, the RCA synthesizer was a dream come true for three reasons. First, the ability to pinpoint and control every musical element precisely. Second, the time needed to realize his elaborate serial structures were brought within practical reach. Third, the question was no longer "What are the limits of the human performer?" but rather "What are the limits of human hearing?"[78]

The collaborations also occurred across oceans and continents. In 1961, Ussachevsky invited Varèse to the Columbia-Princeton Studio (CPEMC). Upon arrival, Varese embarked upon a revision of Déserts. He was assisted by Mario Davidovsky and Bülent Arel.[79]

The intense activity occurring at CPEMC and elsewhere inspired the establishment of the San Francisco Tape Music Center in 1963 by Morton Subotnick, with additional members Pauline Oliveros, Ramon Sender, Anthony Martin, and Terry Riley.[80]

Later, the Center moved to Mills College, directed by Pauline Oliveros, where it is today known as the Center for Contemporary Music.[81]

Simultaneously in San Francisco, composer Stan Shaff and equipment designer Doug McEachern, presented the first “Audium” concert at San Francisco State College (1962), followed by a work at the San Francisco Museum of Modern Art (1963), conceived of as in time, controlled movement of sound in space. Twelve speakers surrounded the audience, four speakers were mounted on a rotating, mobile-like construction above.[82] In an SFMOMA performance the following year (1964), San Francisco Chronicle music critic Alfred Frankenstein commented, "the possibilities of the space-sound continuum have seldom been so extensively explored".[82] In 1967, the first Audium, a "sound-space continuum" opened, holding weekly performances through 1970. In 1975, enabled by seed money from the National Endowment for the Arts, a new Audium opened, designed floor to ceiling for spatial sound composition and performance.[83] “In contrast, there are composers who manipulated sound space by locating multiple speakers at various locations in a performance space and then switching or panning the sound between the sources. In this approach, the composition of spatial manipulation is dependent on the location of the speakers and usually exploits the acoustical properties of the enclosure. Examples include Varese's Poeme Electronique (tape music performed in the Philips Pavilion of the 1958 World Fair, Brussels) and Stanley Schaff's [sic] Audium installation, currently active in San Francisco”.[84] Through weekly programs (over 4,500 in 40 years), Shaff “sculpts” sound, performing now-digitized spatial works live through 176 speakers.[85]

A well-known example of the use of Moog's full-sized Moog modular synthesizer is the Switched-On Bach album by Wendy Carlos, which triggered a craze for synthesizer music.

In 1969 David Tudor brought a Moog modular synthesizer and Ampex tape machines to the National Institute of Design in Ahmedabad with the support of the Sarabhai family, forming the foundation of India's first electronic music studio. Here a group of composers Jinraj Joshipura, Gita Sarabhai, SC Sharma, IS Mathur and Atul Desai developed experimental sound compositions between 1969 and 1973[86]

Along with the Moog modular synthesizer, other makes of this period included ARP and Buchla.

Pietro Grossi was an Italian pioneer of computer composition and tape music, who first experimented with electronic techniques in the early sixties. Grossi was a cellist and composer, born in Venice in 1917. He founded the S 2F M (Studio de Fonologia Musicale di Firenze) in 1963 in order to experiment with electronic sound and composition.

Computer music

Musical melodies were first generated by the computer CSIRAC in Australia in 1950. There were newspaper reports from America and England (early and recently) that computers may have played music earlier, but thorough research has debunked these stories as there is no evidence to support the newspaper reports (some of which were obviously speculative). Research has shown that people speculated about computers playing music, possibly because computers would make noises,[87] but there is no evidence that they actually did it.[88][89]

The world's first computer to play music was CSIRAC, which was designed and built by Trevor Pearcey and Maston Beard in the 1950s. Mathematician Geoff Hill programmed the CSIRAC to play popular musical melodies from the very early 1950s. In 1951 it publicly played the "Colonel Bogey March"[90] of which no known recordings exist. However, CSIRAC played standard repertoire and was not used to extend musical thinking or composition practice which is current computer-music practice.

The first music to be performed in England was a performance of the British National Anthem that was programmed by Christopher Strachey on the Ferranti Mark I, late in 1951. Later that year, short extracts of three pieces were recorded there by a BBC outside broadcasting unit: the National Anthem, "Ba, Ba Black Sheep", and "In the Mood" and this is recognised as the earliest recording of a computer to play music. This recording can be heard at this Manchester University site. Researchers at the University of Canterbury, Christchurch declicked and restored this recording in 2016 and the results may be heard on SoundCloud.[91][92][65]

The late 1950s, 1960s and 1970s also saw the development of large mainframe computer synthesis. Starting in 1957, Max Mathews of Bell Labs developed the MUSIC programs, culminating in MUSIC V, a direct digital synthesis language[93]

Laurie Spiegel developed the algorithmic musical composition software "Music Mouse" (1986) for Macintosh, Amiga, and Atari computers.

Live electronics

In Europe in 1964, Karlheinz Stockhausen composed Mikrophonie I for tam-tam, hand-held microphones, filters, and potentiometers, and Mixtur for orchestra, four sine-wave generators, and four ring modulators. In 1965 he composed Mikrophonie II for choir, Hammond organ, and ring modulators.[94]

In 1966–67, Reed Ghazala discovered and began to teach "circuit bending"—the application of the creative short circuit, a process of chance short-circuiting, creating experimental electronic instruments, exploring sonic elements mainly of timbre and with less regard to pitch or rhythm, and influenced by John Cage's aleatoric music [sic] concept.[95]

Japanese instruments

In the 1950s,[96][97] Japanese electronic musical instruments began influencing the international music industry.[98][99] Ikutaro Kakehashi, who founded Ace Tone in 1960, developed his own version of electronic percussion that had been already popular on the overseas electronic organ.[100] At NAMM 1964, he revealed it as the R-1 Rhythm Ace, a hand-operated percussion device that played electronic drum sounds manually as the user pushed buttons, in a similar fashion to modern electronic drum pads.[100][101][102]

_clip1.jpg.webp)

In 1963, Korg released the Donca-Matic DA-20, an electro-mechanical drum machine.[103] In 1965, Nippon Columbia patented a fully electronic drum machine.[104] Korg released the Donca-Matic DC-11 electronic drum machine in 1966, which they followed with the Korg Mini Pops, which was developed as an option for the Yamaha Electone electric organ.[103] Korg's Stageman and Mini Pops series were notable for "natural metallic percussion" sounds and incorporating controls for drum "breaks and fill-ins."[99]

In 1967, Ace Tone founder Ikutaro Kakehashi patented a preset rhythm-pattern generator using diode matrix circuit[105] similar to the Seeburg's prior U.S. Patent 3,358,068 filed in 1964 (See Drum machine#History), which he released as the FR-1 Rhythm Ace drum machine the same year.[100] It offered 16 preset patterns, and four buttons to manually play each instrument sound (cymbal, claves, cowbell and bass drum). The rhythm patterns could also be cascaded together by pushing multiple rhythm buttons simultaneously, and the possible combination of rhythm patterns were more than a hundred.[100] Ace Tone's Rhythm Ace drum machines found their way into popular music from the late 1960s, followed by Korg drum machines in the 1970s.[99] Kakehashi later left Ace Tone and founded Roland Corporation in 1972, with Roland synthesizers and drum machines becoming highly influential for the next several decades.[100] The company would go on to have a big impact on popular music, and do more to shape popular electronic music than any other company.[102]

Turntablism has origins in the invention of direct-drive turntables. Early belt-drive turntables were unsuitable for turntablism, since they had a slow start-up time, and they were prone to wear-and-tear and breakage, as the belt would break from backspin or scratching.[106] The first direct-drive turntable was invented by Shuichi Obata, an engineer at Matsushita (now Panasonic),[107] based in Osaka, Japan. It eliminated belts, and instead employed a motor to directly drive a platter on which a vinyl record rests.[108] In 1969, Matsushita released it as the SP-10,[108] the first direct-drive turntable on the market,[109] and the first in their influential Technics series of turntables.[108] It was succeeded by the Technics SL-1100 and SL-1200 in the early 1970s, and they were widely adopted by hip hop musicians,[108] with the SL-1200 remaining the most widely used turntable in DJ culture for several decades.[110]

Jamaican dub music

In Jamaica, a form of popular electronic music emerged in the 1960s, dub music, rooted in sound system culture. Dub music was pioneered by studio engineers, such as Sylvan Morris, King Tubby, Errol Thompson, Lee "Scratch" Perry, and Scientist, producing reggae-influenced experimental music with electronic sound technology, in recording studios and at sound system parties.[111] Their experiments included forms of tape-based composition comparable to aspects of musique concrète, an emphasis on repetitive rhythmic structures (often stripped of their harmonic elements) comparable to minimalism, the electronic manipulation of spatiality, the sonic electronic manipulation of pre-recorded musical materials from mass media, deejays toasting over pre-recorded music comparable to live electronic music,[111] remixing music,[112] turntablism,[113] and the mixing and scratching of vinyl.[114]

Despite the limited electronic equipment available to dub pioneers such as King Tubby and Lee "Scratch" Perry, their experiments in remix culture were musically cutting-edge.[112] King Tubby, for example, was a sound system proprietor and electronics technician, whose small front-room studio in the Waterhouse ghetto of western Kingston was a key site of dub music creation.[115]

Late 1960s to early 1980s

Rise of popular electronic music

In the late 1960s, pop and rock musicians, including the Beach Boys and the Beatles, began to use electronic instruments, like the theremin and Mellotron, to supplement and define their sound. In his book Electronic and Experimental Music, Thom Holmes recognises the Beatles' 1966 recording "Tomorrow Never Knows" as the song that "ushered in a new era in the use of electronic music in rock and pop music" due to the band's incorporation of tape loops and reversed and speed-manipulated tape sounds.[116] Also in the late 1960s, the music duo Silver Apples and experimental rock bands like White Noise and the United States of America, are regarded as pioneers to the electronic rock and electronica genres for their work in melding psychedelic rock with oscillators and synthesizers.[117][118][119]

Gershon Kingsley's "Popcorn" composed in 1969 was the first international electronic dance hit popularised by Hot Butter in 1972 (which leads to a wave of bubblegum pop the following years).

By the end of the 1960s, the Moog synthesizer took a leading place in the sound of emerging progressive rock with bands including Pink Floyd, Yes, Emerson, Lake & Palmer, and Genesis making them part of their sound. Instrumental prog rock was particularly significant in continental Europe, allowing bands like Kraftwerk, Tangerine Dream, Can, Neu!, and Faust to circumvent the language barrier.[120] Their synthesiser-heavy "krautrock", along with the work of Brian Eno (for a time the keyboard player with Roxy Music), would be a major influence on subsequent electronic rock.[121]

Ambient dub was pioneered by King Tubby and other Jamaican sound artists, using DJ-inspired ambient electronics, complete with drop-outs, echo, equalization and psychedelic electronic effects. It featured layering techniques and incorporated elements of world music, deep basslines and harmonic sounds.[122] Techniques such as a long echo delay were also used.[123] Other notable artists within the genre include Dreadzone, Higher Intelligence Agency, The Orb, Ott, Loop Guru, Woob and Transglobal Underground.[124]

Dub music influenced electronic musical techniques later adopted by hip hop music, when Jamaican immigrant DJ Kool Herc in the early 1970s introduced Jamaica's sound system culture and dub music techniques to America. One such technique that became popular in hip hop culture was playing two copies of the same record on two turntables in alternation, extending the b-dancers' favorite section.[125] The turntable eventually went on to become the most visible electronic musical instrument, and occasionally the most virtuosic, in the 1980s and 1990s.[113]

Electronic rock was also produced by several Japanese musicians, including Isao Tomita's Electric Samurai: Switched on Rock (1972), which featured Moog synthesizer renditions of contemporary pop and rock songs,[126] and Osamu Kitajima's progressive rock album Benzaiten (1974).[127] The mid-1970s saw the rise of electronic art music musicians such as Jean Michel Jarre, Vangelis, Tomita and Klaus Schulze were a significant influence on the development of new-age music.[122] The hi-tech appeal of these works created for some years the trend of listing the electronic musical equipment employed in the album sleeves, as a disctintive feature. Electronic music began to enter regularly in radio programming and top-sellers charts, as the French band Space with their 1977 single Magic Fly.[128]

In this era, the sound of rock musicians like Mike Oldfield and The Alan Parsons Project (who is credited the first rock song to feature a digital vocoder in 1975, The Raven) used to be arranged and blended with electronic effects and/or music as well, which became much more prominent in the mid-1980s. Jeff Wayne achieved a long lasting success[129] with his 1978 electronic rock musical version of The War of the Worlds.

Film soundtracks also benefit of the electronic sound. In 1977, Gene Page recorded a disco version of the hit theme by John Williams from Steven Spielberg film Close Encounters of the Third Kind. Page's version peaked on the R&B chart at #30 in 1978. The score of 1978 film Midnight Express composed by Italian synth-pioneer Giorgio Moroder won the Academy Award for Best Original Score in 1979, as did it again in 1981 the score by Vangelis for Chariots of Fire.

After the arrival of punk rock, a form of basic electronic rock emerged, increasingly using new digital technology to replace other instruments. The American duo Suicide, who arose from the punk scene in New York, utilized drum machines and synthesizers in a hybrid between electronics and punk on their eponymous 1977 album.[130]

Synth-pop pioneering bands which enjoyed success for years included Ultravox with their 1977 track "Hiroshima Mon Amour" on Ha!-Ha!-Ha!,[131] Yellow Magic Orchestra with their self-titled album (1978), The Buggles with their prominent 1979 debut single Video Killed the Radio Star,[132] Gary Numan with his solo debut album The Pleasure Principle and single Cars in 1979,[133] Orchestral Manoeuvres in the Dark with their 1979 single Electricity featured on their eponymous debut album,[134] Depeche Mode with their first single Dreaming of Me recorded in 1980 and released in 1981 album Speak & Spell,[135] A Flock of Seagulls with their 1981 single Talking,[136] New Order with Ceremony[137] in 1981, and The Human League with their 1981 hit Don't You Want Me from debut album Dare.[138]

.jpg.webp)

The definition of MIDI and the development of digital audio made the development of purely electronic sounds much easier,[139] with audio engineers, producers and composers exploring frequently the possibilities of virtually every new model of electronic sound equipment launched by manufacturers. Synth-pop sometimes used synthesizers to replace all other instruments, but was more common that bands had one of more keyboardists in their line-ups along with guitarists, bassists, and/or drummers. These developments led to the growth of synth-pop, which after it was adopted by the New Romantic movement, allowed synthesizers to dominate the pop and rock music of the early 1980s, until the style began to fall from popularity in the mid-to-end of the decade.[138] Along with aforementioned successful pioneers, key acts included Yazoo, Duran Duran, Spandau Ballet, Culture Club, Talk Talk, Japan, and Eurythmics.

Synth-pop was taken up across the world, with international hits for acts including Men Without Hats, Trans-X and Lime from Canada, Telex from Belgium, Peter Schilling, Sandra, Modern Talking, Propaganda and Alphaville from Germany, Yello from Switzerland and Azul y Negro from Spain. Also, the synth sound is a key feature of Italo-disco.

Some synth-pop bands created futuristic visual styles of themselves to reinforce the idea of electronic sounds were linked primarily with technology, as Americans Devo and Spaniards Aviador Dro.

Keyboard synthesizers became so common that even heavy metal rock bands, a genre often regarded as the opposite in aesthetics, sound and lifestyle from that of electronic pop artists by fans of both sides, achieved worldwide success with themes as 1983 Jump[140] by Van Halen and 1986 The Final Countdown[141] by Europe, which feature synths prominently.

Proliferation of electronic music research institutions

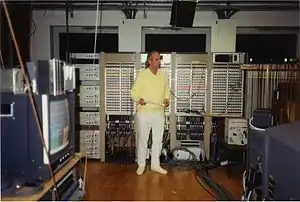

Elektronmusikstudion (EMS), formerly known as Electroacoustic Music in Sweden, is the Swedish national centre for electronic music and sound art. The research organisation started in 1964 and is based in Stockholm.

.jpg.webp)

STEIM is a center for research and development of new musical instruments in the electronic performing arts, located in Amsterdam, Netherlands. STEIM has existed since 1969. It was founded by Misha Mengelberg, Louis Andriessen, Peter Schat, Dick Raaymakers, Jan van Vlijmen, Reinbert de Leeuw, and Konrad Boehmer. This group of Dutch composers had fought for the reformation of Amsterdam's feudal music structures; they insisted on Bruno Maderna's appointment as musical director of the Concertgebouw Orchestra and enforced the first public fundings for experimental and improvised electronic music in The Netherlands.

IRCAM in Paris became a major center for computer music research and realization and development of the Sogitec 4X computer system,[142] featuring then revolutionary real-time digital signal processing. Pierre Boulez's Répons (1981) for 24 musicians and 6 soloists used the 4X to transform and route soloists to a loudspeaker system.

Barry Vercoe describes one of his experiences with early computer sounds:

At IRCAM in Paris in 1982, flutist Larry Beauregard had connected his flute to DiGiugno's 4X audio processor, enabling real-time pitch-following. On a Guggenheim at the time, I extended this concept to real-time score-following with automatic synchronized accompaniment, and over the next two years Larry and I gave numerous demonstrations of the computer as a chamber musician, playing Handel flute sonatas, Boulez's Sonatine for flute and piano and by 1984 my own Synapse II for flute and computer—the first piece ever composed expressly for such a setup. A major challenge was finding the right software constructs to support highly sensitive and responsive accompaniment. All of this was pre-MIDI, but the results were impressive even though heavy doses of tempo rubato would continually surprise my Synthetic Performer. In 1985 we solved the tempo rubato problem by incorporating learning from rehearsals (each time you played this way the machine would get better). We were also now tracking violin, since our brilliant, young flautist had contracted a fatal cancer. Moreover, this version used a new standard called MIDI, and here I was ably assisted by former student Miller Puckette, whose initial concepts for this task he later expanded into a program called MAX.[144]

Keyboard synthesizers

Released in 1970 by Moog Music, the Mini-Moog was among the first widely available, portable and relatively affordable synthesizers. It became once the most widely used synthesizer at that time in both popular and electronic art music.[145] Patrick Gleeson, playing live with Herbie Hancock in the beginning of the 1970s, pioneered the use of synthesizers in a touring context, where they were subject to stresses the early machines were not designed for.[146][147]

In 1974, the WDR studio in Cologne acquired an EMS Synthi 100 synthesizer, which a number of composers used to produce notable electronic works—including Rolf Gehlhaar's Fünf deutsche Tänze (1975), Karlheinz Stockhausen's Sirius (1975–76), and John McGuire's Pulse Music III (1978).[148]

Thanks to miniaturization of electronics in the 1970s, by the start of the 1980s keyboard synthesizers became lighter and affordable, integrating in a single slim unit all the necessary audio synthesys electronics and the piano-style keyboard itself, in sharp contrast with the bulky machinery and "cable spaguetty" employed along the 1960s and 1970s. First with analog synthesizers, the trend followed with digital synthesizers and samplers as well (see below).

Digital synthesis

In 1975, the Japanese company Yamaha licensed the algorithms for frequency modulation synthesis (FM synthesis) from John Chowning, who had experimented with it at Stanford University since 1971.[149][150] Yamaha's engineers began adapting Chowning's algorithm for use in a digital synthesizer, adding improvements such as the "key scaling" method to avoid the introduction of distortion that normally occurred in analog systems during frequency modulation.[151]

In 1980, Yamaha eventually released the first FM digital synthesizer, the Yamaha GS-1, but at an expensive price.[152] In 1983, Yamaha introduced the first stand-alone digital synthesizer, the DX7, which also used FM synthesis and would become one of the best-selling synthesizers of all time.[149] The DX7 was known for its recognizable bright tonalities that was partly due to an overachieving sampling rate of 57 kHz.[153]

The Korg Poly-800 is a synthesizer released by Korg in 1983. Its initial list price of $795 made it the first fully programmable synthesizer that sold for less than $1000. It had 8-voice polyphony with one Digitally controlled oscillator (DCO) per voice.

The Casio CZ-101 was the first and best-selling phase distortion synthesizer in the Casio CZ line. Released in November 1984, it was one of the first (if not the first) fully programmable polyphonic synthesizers that was available for under $500.

The Roland D-50 is a digital synthesizer produced by Roland and released in April 1987. Its features include subtractive synthesis, on-board effects, a joystick for data manipulation, and an analogue synthesis-styled layout design. The external Roland PG-1000 (1987-1990) programmer could also be attached to the D-50 for more complex manipulation of its sounds.

Samplers

A sampler is an electronic or digital musical instrument which uses sound recordings (or "samples") of real instrument sounds (e.g., a piano, violin or trumpet), excerpts from recorded songs (e.g., a five-second bass guitar riff from a funk song) or found sounds (e.g., sirens and ocean waves). The samples are loaded or recorded by the user or by a manufacturer. These sounds are then played back by means of the sampler program itself, a MIDI keyboard, sequencer or another triggering device (e.g., electronic drums) to perform or compose music. Because these samples are usually stored in digital memory, the information can be quickly accessed. A single sample may often be pitch-shifted to different pitches to produce musical scales and chords.

Prior to computer memory-based samplers, musicians used tape replay keyboards, which store recordings on analog tape. When a key is pressed the tape head contacts the moving tape and plays a sound. The Mellotron was the most notable model, used by a number of groups in the late 1960s and the 1970s, but such systems were expensive and heavy due to the multiple tape mechanisms involved, and the range of the instrument was limited to three octaves at the most. To change sounds a new set of tapes had to be installed in the instrument. The emergence of the digital sampler made sampling far more practical.

The earliest digital sampling was done on the EMS Musys system, developed by Peter Grogono (software), David Cockerell (hardware and interfacing) and Peter Zinovieff (system design and operation) at their London (Putney) Studio c. 1969.

The first commercially available sampling synthesizer was the Computer Music Melodian by Harry Mendell (1976).

First released in 1977–78,[154] the Synclavier I using FM synthesis, re-licensed from Yamaha,[155] and sold mostly to universities, proved to be highly influential among both electronic music composers and music producers, including Mike Thorne, an early adopter from the commercial world, due to its versatility, its cutting-edge technology, and distinctive sounds.

The first polyphonic digital sampling synthesizer was the Australian-produced Fairlight CMI, first available in 1979. These early sampling synthesizers used wavetable sample-based synthesis.[156]

Birth of MIDI

In 1980, a group of musicians and music merchants met to standardize an interface that new instruments could use to communicate control instructions with other instruments and computers. This standard was dubbed Musical Instrument Digital Interface (MIDI) and resulted from a collaboration between leading manufacturers, initially Sequential Circuits, Oberheim, Roland—and later, other participants that included Yamaha, Korg, and Kawai.[157] A paper was authored by Dave Smith of Sequential Circuits and proposed to the Audio Engineering Society in 1981. Then, in August 1983, the MIDI Specification 1.0 was finalized.

MIDI technology allows a single keystroke, control wheel motion, pedal movement, or command from a microcomputer to activate every device in the studio remotely and in synchrony, with each device responding according to conditions predetermined by the composer.

MIDI instruments and software made powerful control of sophisticated instruments easily affordable by many studios and individuals. Acoustic sounds became reintegrated into studios via sampling and sampled-ROM-based instruments.

Miller Puckette developed graphic signal-processing software for 4X called Max (after Max Mathews) and later ported it to Macintosh (with Dave Zicarelli extending it for Opcode)[158] for real-time MIDI control, bringing algorithmic composition availability to most composers with modest computer programming background.

Sequencers and drum machines

The early 1980s saw the rise of bass synthesizers, the most influential being the Roland TB-303, a bass synthesizer and sequencer released in late 1981 that later became a fixture in electronic dance music,[159] particularly acid house.[160] One of the first to use it was Charanjit Singh in 1982, though it wouldn't be popularized until Phuture's "Acid Tracks" in 1987.[160] Music sequencers began being used around the mid 20th century, and Tomita's albums in mid-1970s being later examples.[126] In 1978, Yellow Magic Orchestra were using computer-based technology in conjunction with a synthesiser to produce popular music,[161] making their early use of the microprocessor-based Roland MC-8 Microcomposer sequencer.[162][163]

Drum machines, also known as rhythm machines, also began being used around the late-1950s, with a later example being Osamu Kitajima's progressive rock album Benzaiten (1974), which used a rhythm machine along with electronic drums and a synthesizer.[127] In 1977, Ultravox's "Hiroshima Mon Amour" was one of the first singles to use the metronome-like percussion of a Roland TR-77 drum machine.[131] In 1980, Roland Corporation released the TR-808, one of the first and most popular programmable drum machines. The first band to use it was Yellow Magic Orchestra in 1980, and it would later gain widespread popularity with the release of Marvin Gaye's "Sexual Healing" and Afrika Bambaataa's "Planet Rock" in 1982.[164] The TR-808 was a fundamental tool in the later Detroit techno scene of the late 1980s, and was the drum machine of choice for Derrick May and Juan Atkins.[165]

Chiptunes

The characteristic lo-fi sound of chip music was initially the result of early computer's sound chips and sound cards' technical limitations; however, the sound has since become sought after in its own right.

Common cheap popular sound chips of the firsts home computers of the 1980s include the SID of the Commodore 64 and General Instrument AY series and clones (as the Yamaha YM2149) used in ZX Spectrum, Amstrad CPC, MSX compatibles and Atari ST models, among others.

Late 1980s to 1990s

Rise of dance music

Synth-pop continued into the late 1980s, with a format that moved closer to dance music, including the work of acts such as British duos Pet Shop Boys, Erasure and The Communards, achieving success along much of the 1990s.

The trend has continued to the present day with modern nightclubs worldwide regularly playing electronic dance music (EDM). Today, electronic dance music has radio stations,[166] websites,[167] and publications like Mixmag dedicated solely to the genre. Moreover, the genre has found commercial and cultural significance in the United States and North America, thanks to the wildly popular big room house/EDM sound that has been incorporated into U.S. pop music[168] and the rise of large-scale commercial raves such as Electric Daisy Carnival, Tomorrowland and Ultra Music Festival.

Advancements

Other recent developments included the Tod Machover (MIT and IRCAM) composition Begin Again Again for "hypercello", an interactive system of sensors measuring physical movements of the cellist. Max Mathews developed the "Conductor" program for real-time tempo, dynamic and timbre control of a pre-input electronic score. Morton Subotnick released a multimedia CD-ROM All My Hummingbirds Have Alibis.

2000s and 2010s

As computer technology has become more accessible and music software has advanced, interacting with music production technology is now possible using means that bear no relationship to traditional musical performance practices:[169] for instance, laptop performance (laptronica),[170] live coding[171] and Algorave. In general, the term Live PA refers to any live performance of electronic music, whether with laptops, synthesizers, or other devices.

Beginning around the year 2000, a number of software-based virtual studio environments emerged, with products such as Propellerhead's Reason and Ableton Live finding popular appeal.[172] Such tools provide viable and cost-effective alternatives to typical hardware-based production studios, and thanks to advances in microprocessor technology, it is now possible to create high quality music using little more than a single laptop computer. Such advances have democratized music creation,[173] leading to a massive increase in the amount of home-produced electronic music available to the general public via the internet. Software based instruments and effect units (so called "plugins") can be incorporated in a computer-based studio using the VST platform. Some of these instruments are more or less exact replicas of existing hardware (such as the Roland D-50, ARP Odyssey, Yamaha DX7 or Korg M1). In many cases, these software-based instruments are sonically indistinguishable from their physical counterpart.

Circuit bending

Circuit bending is the modification of battery powered toys and synthesizers to create new unintended sound effects. It was pioneered by Reed Ghazala in the 1960s and Reed coined the name "circuit bending" in 1992.[174]

Modular synth revival

Following the circuit bending culture, musicians also began to build their own modular synthesizers, causing a renewed interest for the early 1960s designs. Eurorack became a popular system.

See also

- Clavioline

- Electronic sackbut

- List of electronic music genres

- New Interfaces for Musical Expression

- Ondioline

- Sound sculpture

- Spectral music

- Tracker music

- Timeline of electronic music genres

- Live electronic music

Footnotes

- "The stuff of electronic music is electrically produced or modified sounds. ... two basic definitions will help put some of the historical discussion in its place: purely electronic music versus electroacoustic music" (Holmes 2002, p. 6).

- Electroacoustic music may also use electronic effect units to change sounds from the natural world, such as the sound of waves on a beach or bird calls. All types of sounds can be used as source material for this music. Electroacoustic performers and composers use microphones, tape recorders and digital samplers to make live or recorded music. During live performances, natural sounds are modified in real time using electronic effects and audio consoles. The source of the sound can be anything from ambient noise (traffic, people talking) and nature sounds to live musicians playing conventional acoustic or electro-acoustic instruments (Holmes 2002, p. 8).

- "Electronically produced music is part of the mainstream of popular culture. Musical concepts that were once considered radical—the use of environmental sounds, ambient music, turntable music, digital sampling, computer music, the electronic modification of acoustic sounds, and music made from fragments of speech-have now been subsumed by many kinds of popular music. Record store genres including new age, rap, hip-hop, electronica, techno, jazz, and popular song all rely heavily on production values and techniques that originated with classic electronic music" (Holmes 2002, p. 1). "By the 1990s, electronic music had penetrated every corner of musical life. It extended from ethereal sound-waves played by esoteric experimenters to the thumping syncopation that accompanies every pop record" (Lebrecht 1996, p. 106).

- Neill, Ben (2002). "Pleasure Beats: Rhythm and the Aesthetics of Current Electronic Music". Leonardo Music Journal. 12: 3–6. doi:10.1162/096112102762295052. S2CID 57562349.

- Holmes 2002, p. 41

- Swezey, Kenneth M. (1995). The Encyclopedia Americana – International Edition Vol. 13. Danbury, Connecticut: Grolier Incorporated. p. 211.; Weidenaar 1995, p. 82

- Holmes 2002, p. 47

- Busoni 1962, p. 95; Russcol 1972, pp. 35–36.

- "To present the musical soul of the masses, of the great factories, of the railways, of the transatlantic liners, of the battleships, of the automobiles and airplanes. To add to the great central themes of the musical poem the domain of the machine and the victorious kingdom of Electricity." Quoted in Russcol 1972, p. 40.

- Russcol 1972, p. 68.

- Holmes & 4th Edition, p. 18

- Holmes & 4th Edition, p. 21

- Holmes & 4th Edition, p. 33; Lee De Forest (1950), Father of radio: the autobiography of Lee de Forest, Wilcox & Follett, pp. 306–307

- Roads 2015, p. 204

- Holmes & 4th Edition, p. 24

- Holmes & 4th Edition, p. 26

- Holmes & 4th Edition, p. 28

- Toop 2016, p. "Free lines"

- Smirnov 2014, p. "Russian Electroacoustic Music from the 1930s–2000s"

- Holmes & 4th Edition, p. 34

- Holmes & 4th Edition, p. 45

- Holmes & 4th Edition, p. 46

- Jones, Barrie (2014-06-03). The Hutchinson Concise Dictionary of Music. Routledge. ISBN 978-1-135-95018-7.

- Anonymous 2006.

- Engel 2006, pp. 4 and 7

- Krause 2002 abstract.

- Engel & Hammar 2006, p. 6.

- Snell 2006, scu.edu

- Angus 1984.

- Young 2007, p. 24

- Holmes 2008, pp. 156–57.

- "Musique Concrete was created in Paris in 1948 from edited collages of everyday noise" (Lebrecht 1996, p. 107).

- NB: To the pioneers, an electronic work did not exist until it was "realized" in a real-time performance (Holmes 2008, p. 122).

- Snyder 1998

- Lange (2009), p. 173

- Kurtz 1992, pp. 75–76.

- Anonymous 1972.

- Eimert 1972, p. 349.

- Eimert 1958, p. 2; Ungeheuer 1992, p. 117.

- (Lebrecht 1996, p. 75): "... at Northwest German Radio in Cologne (1953), where the term 'electronic music' was coined to distinguish their pure experiments from musique concrete..."

- Stockhausen 1978, pp. 73–76, 78–79

- "In 1967, just following the world premiere of Hymnen, Stockhausen said about the electronic music experience: '... Many listeners have projected that strange new music which they experienced—especially in the realm of electronic music—into extraterrestrial space. Even though they are not familiar with it through human experience, they identify it with the fantastic dream world. Several have commented that my electronic music sounds "like on a different star", or "like in outer space." Many have said that when hearing this music, they have sensations as if flying at an infinitely high speed, and then again, as if immobile in an immense space. Thus, extreme words are employed to describe such experience, which are not "objectively" communicable in the sense of an object description, but rather which exist in the subjective fantasy and which are projected into the extraterrestrial space'" (Holmes 2002, p. 145).

- Before the Second World War in Japan, several "electrical" instruments seem already to have been developed (see ja:電子音楽#黎明期), and in 1935 a kind of "electronic" musical instrument, the Yamaha Magna Organ, was developed. It seems to be a multi-timbral keyboard instrument based on electrically blown free reeds with pickups, possibly similar to the electrostatic reed organs developed by Frederick Albert Hoschke in 1934 then manufactured by Everett and Wurlitzer until 1961.

- 一時代を画する新楽器完成 浜松の青年技師山下氏 [An epoch-defining new musical instrument was developed by a young engineer Mr.Yamashita in Hamamatsu]. Hochi Shimbun (in Japanese). 1935-06-08.

- 新電氣樂器 マグナオルガンの御紹介 [New Electric Musical Instrument – Introduction of the Magna Organ] (in Japanese). Hamamatsu: 日本樂器製造株式會社 (Yamaha). October 1935.

特許第一〇八六六四号, 同 第一一〇〇六八号, 同 第一一一二一六号

- Holmes 2008, p. 106.

- Holmes 2008, p. 106 & 115.

- Fujii 2004, pp. 64–66.

- Fujii 2004, p. 66.

- Holmes 2008, pp. 106–7.

- Holmes 2008, p. 107.

- Fujii 2004, pp. 66–67.

- Fujii 2004, p. 64.

- Fujii 2004, p. 65.

- Holmes 2008, p. 108.

- Holmes 2008, pp. 108 & 114–5.

- Loubet 1997, p. 11

- Luening 1968, p. 136

- Johnson 2002, p. 2.

- Johnson 2002, p. 4.

- "Carolyn Brown [Earle Brown's wife] was to dance in Cunningham's company, while Brown himself was to participate in Cage's 'Project for Music for Magnetic Tape.'... funded by Paul Williams (dedicatee of the 1953 Williams Mix), who—like Robert Rauschenberg—was a former student of Black Mountain College, which Cage and Cunnigham had first visited in the summer of 1948" (Johnson 2002, p. 20).

- Russcol 1972, p. 92.

- Luening 1968, p. 48.

- Luening 1968, p. 49.

- "From at least Louis and Bebbe Barron's soundtrack for The Forbidden Planet onwards, electronic music—in particular synthetic timbre—has impersonated alien worlds in film" (Norman 2004, p. 32).

- Doornbusch 2005, p. 25.

- Fildes 2008

- Schwartz 1975, p. 347.

- Harris 2018

- Holmes 2008, pp. 145–46.

- Rhea 1980, p. 64.

- Holmes 2008, p. 153.

- Holmes 2008, pp. 153–54 & 157

- Gayou 2007a, p. 207

- Kurtz 1992, p. 1.

- Glinsky 2000, p. 286.

- "Delia Derbyshire Audiological Chronology".

- Gluck 2005, pp. 164–65.

- Tal & Markel 2002, pp. 55–62.

- Schwartz 1975, p. 124.

- Bayly 1982–83, p. 150.

- "Electronic music". didierdanse.net. Retrieved 2019-06-10.

- "A central figure in post-war electronic art music, Pauline Oliveros [b. 1932] is one of the original members of the San Francisco Tape Music Center (along with Morton Subotnick, Ramon Sender, Terry Riley, and Anthony Martin), which was the resource on the U.S. west coast for electronic music during the 1960s. The Center later moved to Mills College, where she was its first director, and is now called the Center for Contemporary Music." from CD liner notes, "Accordion & Voice", Pauline Oliveros, Record Label: Important, Catalog number IMPREC140: 793447514024.

- Frankenstein 1964.

- Loy 1985, pp. 41–48.

- Begault 1994, p. 208, online reprint.

- Hertelendy 2008.

- "Electronic India 1969–73 revisited – The Wire". The Wire Magazine – Adventures In Modern Music.

- "Algorhythmic Listening 1949–1962 Auditory Practices of Early Mainframe Computing". AISB/IACAP World Congress 2012. Archived from the original on 7 November 2017. Retrieved 18 October 2017.

- Doornbusch, Paul (9 July 2017). "MuSA 2017 – Early Computer Music Experiments in Australia, England and the USA". MuSA Conference. Retrieved 18 October 2017.

- Doornbusch, Paul (2017). "Early Computer Music Experiments in Australia and England". Organised Sound. Cambridge University Press. 22 (2): 297–307 [11]. doi:10.1017/S1355771817000206.

- Doornbusch, Paul. "The Music of CSIRAC". Melbourne School of Engineering, Department of Computer Science and Software Engineering. Archived from the original on 18 January 2012.

- "First recording of computer-generated music – created by Alan Turing – restored". The Guardian. 26 September 2016. Retrieved 28 August 2017.

- "Restoring the first recording of computer music – Sound and vision blog". British Library. 13 September 2016. Retrieved 28 August 2017.

- Mattis 2001.

- Stockhausen 1971, pp. 51, 57, 66.

- "This element of embracing errors is at the centre of Circuit Bending, it is about creating sounds that are not supposed to happen and not supposed to be heard (Gard 2004). In terms of musicality, as with electronic art music, it is primarily concerned with timbre and takes little regard of pitch and rhythm in a classical sense. ... . In a similar vein to Cage's aleatoric music, the art of Bending is dependent on chance, when a person prepares to bend they have no idea of the final outcome" (Yabsley 2007).

- "クロダオルガン修理" [Croda Organ Repair]. CrodaOrganService.com (in Japanese). May 2017.

クロダオルガン株式会社(昭和30年 [1955年]創業、2007年に解散)は約50年の歴史のあいだに自社製造のクロダトーン...の販売、設置をおこなってきましたが、[2007年]クロダオルガン株式会社廃業... [In English: Kuroda Organ Co., Ltd. (founded in 1955, dissolved in 2007) has been selling and installing its own manufactured Kurodatone ... during about 50 years of history, but [in 2007] the Croda Organ Closed business ...]

- "Vicotor Company of Japan, Ltd.". Diamond's Japan Business Directory (in Japanese). Diamond Lead Company. 1993. p. 752. ISBN 978-4-924360-01-3.

[JVC] Developed Japan's first electronic organ, 1958

.

Note: the first model by JVC was "EO-4420" in 1958. See also the Japanese Wikipedia article: "w:ja:ビクトロン#機種". - Palmieri, Robert (2004). The Piano: An Encyclopedia. Encyclopedia of keyboard instruments (2nd ed.). Routledge. p. 406. ISBN 978-1-135-94963-1.

the development [and release] in 1959 of an all-transistor Electone electronic organ, first in a successful series of Yamaha electronic instruments. It was a milestone for Japan's music industry.

.

Note: the first model by Yamaha was "D-1" in 1959. See also the Japanese Wikipedia article "w:ja:エレクトーン#D-1". - Russell Hartenberger (2016), The Cambridge Companion to Percussion, page 84, Cambridge University Press

- Reid, Gordon (2004), "The History Of Roland Part 1: 1930–1978", Sound on Sound (November), retrieved 19 June 2011

- Matt Dean (2011), The Drum: A History, page 390, Scarecrow Press

- "The 14 drum machines that shaped modern music". 22 September 2016.

- "Donca-Matic (1963)". Korg Museum. Korg.

- "Automatic rhythm instrument".

- US patent 3651241, Ikutaro Kakehashi (Ace Electronics Industries, Inc.), "Automatic Rhythm Performance Device", issued 1972-03-21

- The World of DJs and the Turntable Culture, page 43, Hal Leonard Corporation, 2003

- Billboard, May 21, 1977, page 140

- Trevor Pinch, Karin Bijsterveld, The Oxford Handbook of Sound Studies, page 515, Oxford University Press

- "History of the Record Player Part II: The Rise and Fall". Reverb.com. Retrieved 5 June 2016.

- Six Machines That Changed The Music World, Wired, May 2002

- Veal, Michael (2013). "Electronic Music in Jamaica". Dub: Soundscapes and Shattered Songs in Jamaican Reggae. Wesleyan University Press. pp. 26–44. ISBN 9780819574428.

- Nicholas Collins, Margaret Schedel, Scott Wilson (2013), Electronic Music: Cambridge Introductions to Music, page 20, Cambridge University Press

- Nicholas Collins, Julio d' Escrivan Rincón (2007), The Cambridge Companion to Electronic Music, page 49, Cambridge University Press

- Andrew Brown (2012), Computers in Music Education: Amplifying Musicality, page 127, Routledge

- Dubbing Is A Must: A Beginner's Guide To Jamaica's Most Influential Genre, Fact.

- Holmes 2012, p. 468.

- Tom Doyle (October 2010). "Silver Apples: Early Electronica". Sound on Sound. SOS Publications Group. Retrieved 5 October 2020.

- Theodore Stone (May 2, 2018). "The United States of America and the Start of an Electronic Revolution". PopMatters. Retrieved 5 October 2020.

- Alexis Petridis (September 9, 2020). "Silver Apples' Simeon Coxe: visionary who saw music's electronic future". The Guardian. The Guardian. Retrieved 5 October 2020.

- Bussy 2004, pp. 15–17.

- Unterberger 2002, pp. 1330–1.

- Holmes 2008, p. 403.

- Toop, David (1995). Ocean of Sound. Serpent's Tail. p. 115. ISBN 9781852423827.

- Mattingly, Rick (2002). The Techno Primer: The Essential Reference for Loop-based Music Styles. Hal Leonard Corporation. p. 38. ISBN 0634017888. Retrieved 1 April 2013.

- Nicholas Collins, Margaret Schedel, Scott Wilson (2013), Electronic Music: Cambridge Introductions to Music, page 105, Cambridge University Press

- Jenkins 2007, pp. 133–34

- Osamu Kitajima – Benzaiten at Discogs

- Roberts, David, ed. (2005). Guinness World Records – British Hit Singles & Albums (18 ed.). Guinness World Records Ltd. p. 472. ISBN 1-904994-00-8.

- "The Top 40 Best-Selling Studio Albums of All Time". BBC. 2018. Retrieved 29 May 2019.

- D. Nobakht (2004), Suicide: No Compromise, p. 136, ISBN 978-0-946719-71-6

- Maginnis 2011

- "'The Buggles' by Geoffrey Downes" (liner notes). The Age of Plastic 1999 reissue.

- "Item Display – RPM – Library and Archives Canada". Collectionscanada.gc.ca. Archived from the original on 13 January 2012. Retrieved 8 August 2011.

- "Electricity by OMD". Songfacts. Retrieved 23 July 2013.

- Paige, Betty (31 January 1981). "This Year's Mode(L)". Sounds. Archived from the original on 24 July 2011.

- "BBC – Radio 1 – Keeping It Peel – 06/05/1980 A Flock of Seagulls". BBC Radio 1. Retrieved 12 June 2018.

- "Movement 'Definitive Edition'". New Order. 19 December 2018. Retrieved 26 January 2020.

- Anonymous 2010.

- Russ 2009, p. 66.

- Beato, Rick (2019-04-28), What Makes This Song Great? Ep.61 VAN HALEN (#2), retrieved 2019-06-24

- Tengner, Anders; Michael Johansson (1987). Europe – den stora rockdrömmen (in Swedish). Wiken. ISBN 91-7024-408-1.

- Schutterhoef 2007 "Archived copy". Archived from the original on 2013-11-04. Retrieved 2010-01-13.CS1 maint: archived copy as title (link).

-

Nicolas Schöffer (December 1983). "V A R I A T I 0 N S sur 600 STRUCTURES SONORES – Une nouvelle méthode de composition musicale sur l' ORDINATEUR 4X". Leonardo On-Line (in French). Leonardo/International Society for the Arts, Sciences and Technology (ISAST). Archived from the original on 2012-04-17."L'ordinateur nous permet de franchir une nouvelle étape quant à la définition et au codage des sons, et permet de créer des partitions qui dépassent en complexité et en précision les possibilités d'antan. LA METHODE DE COMPOSITION que je propose comporte plusieurs phases et nécessite l'emploi d'une terminologie simple que nous définirons au fur et à mesure : TRAMES, PAVES, BRIQUES et MODULES."

- Vercoe 2000, pp. xxviii–xxix.

- "In 1969, a portable version of the studio Moog, called the Minimoog Model D, became the most widely used synthesizer in both popular music and electronic art music" Montanaro 2004, p. 8.

- Zussman 1982, pp. 1, 5